-

Notifications

You must be signed in to change notification settings - Fork 901

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Cleanup unused container images built for Firebase Function following firebase deploys #3404

Comments

|

I just enabled billing and switched to node 10 for my cloud functions... couple of hours later I look at billing and ive used GB's of bandwidth.... This scared the hell out of me. I immediately used up all of my free tier and more. |

|

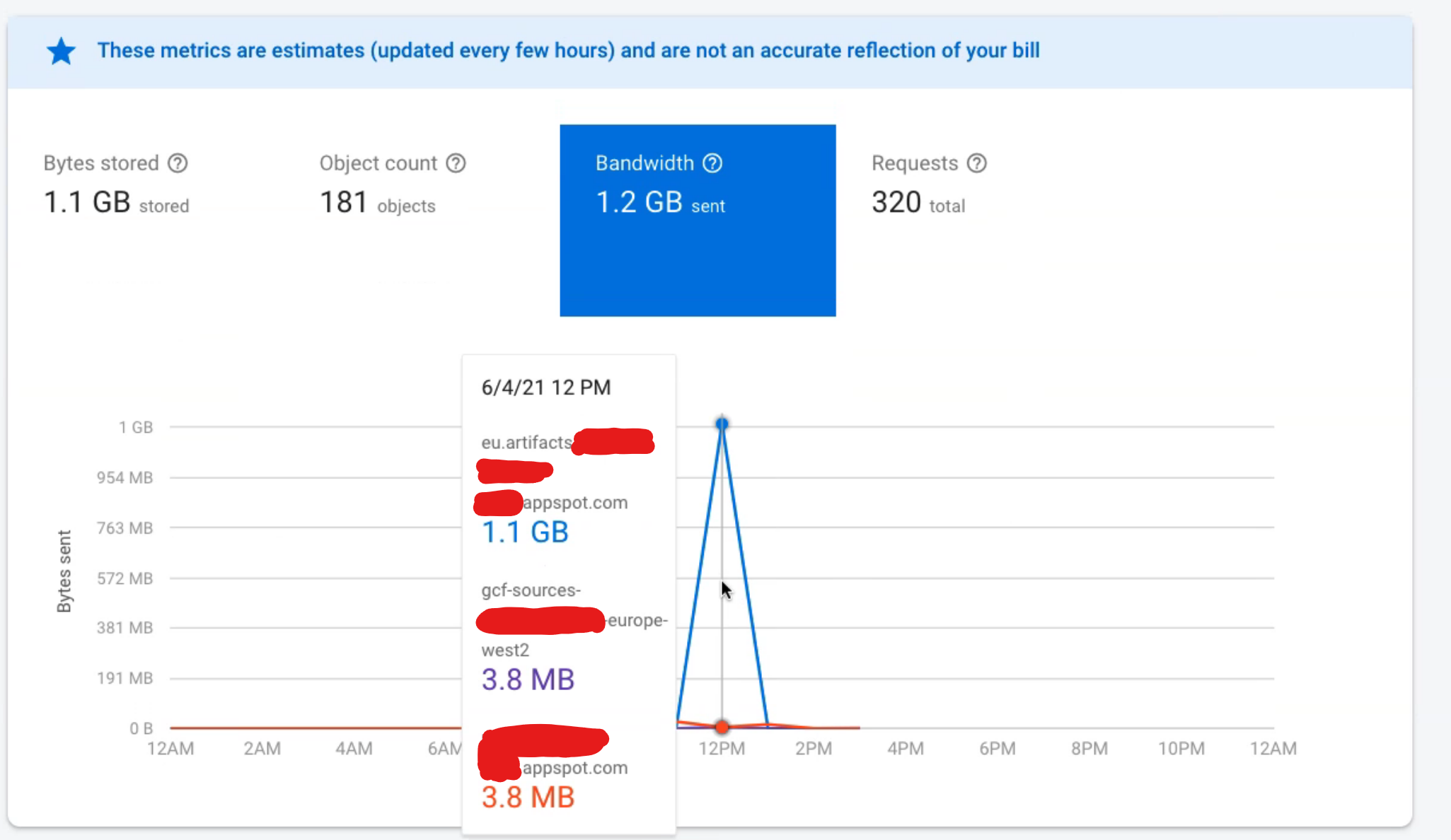

I am not sure, how can i check? All i can see in firebase is that eu.artifacts.PROJECT_NAME.appspot.com had a massive spike right after I deployed my functions for the first time with node 12. I am seeing this on the firebase storage usage dashboard page. My actual firebase bucket, PROJECT_NAME.appspot.com, had practically no usage during this time. |

|

Correct, Google Container Registry is what GCF uses to build your images and those are stored in the "artifacts" bucket. As far as i can tell from the pricing page, the bandwidth you're seeing should be free (see "Data moves from a Cloud Storage bucket located in a multi-region to a different Google Cloud service located in a region, and both locations are on the same continent."). This bug isn't about saving you bandwidth cost, it's about saving the few pennies of cost for leaving those images around. |

|

It looks like I can no longer see my activity as it was during the previous month. I can however see that my free trial has lost some some of the credit. I have read that the billing report becomes available on the 5th of each month so I will update you with what it says and whether this was the storage download. A few of the answers talked about deleting the container images manually but I was unsure of which were okay to remove. Will this new update remove old images that have been created from previous deploys or will it just remove new ones that are created from firebase deploys after the update is installed? |

Somewhere in between. The Cloud team worries that the Cloud customers may want to use the docker images for their own testing, so the update preserves images for functions we don't know about. So if you redeploy your functions it'll clean up all the history for those functions, but if you had previously deleted a function we won't clean it up retroactively. If you want to manually clean up images, please do not clean them up from Google Cloud Storage. You need to clean them up from Google Container Registry instead. You can do this at https://console.cloud.google.com/gcr. To fully wipe out all images, you'll need to go to the |

|

@inlined Hi again. I had to update some functions today <20 today and the crazy bandwidth usage occurred again..... got some screenshots this time. Does this still mean the usage is free? Also in the gcr fodlers there are sub directories (such as cache, worker) and what looks like multiple versions of my functions. Will deleting all of this affect the current version that is running? |

|

Hey all, I'm not 100% sure but I can definitely see that images created as a result of Firebase function deploy are getting deleted automatically. So, maybe there is progress on this issue. In the mean time I wrote a script that I run before each deploy. The script goes over my container images and:

Here's the script in case of you need it: const spawn = require("child_process").spawn;

const KEEP_AT_LEAST = 2;

const CONTAINER_REGISTRIES = [

"gcr.io/<your project name>",

"eu.gcr.io/<your project name>/gcf/europe-west3"

];

async function go(registry) {

console.log(`> ${registry}`);

const images = await command(`gcloud`, [

"container",

"images",

"list",

`--repository=${registry}`,

"--format=json",

]);

for (let i = 0; i < images.length; i++) {

console.log(` ${images[i].name}`);

const image = images[i].name;

let tags = await command(`gcloud`, [

"container",

"images",

"list-tags",

image,

"--format=json",

]);

const totalImages = tags.length;

// do not touch `latest`

tags = tags.filter(({ tags }) => !tags.find((tag) => tag === "latest"));

// sorting by date

tags.sort((a, b) => {

const d1 = new Date(a.timestamp.datetime);

const d2 = new Date(b.timestamp.datetime);

return d2.getTime() - d1.getTime();

});

// keeping at least X number of images

tags = tags.filter((_, i) => i >= KEEP_AT_LEAST);

console.log(` For removal: ${tags.length}/${totalImages}`);

for (let j = 0; j < tags.length; j++) {

console.log(

` Deleting: ${formatImageTimestamp(tags[j])} | ${tags[j].digest}`

);

await command("gcloud", [

"container",

"images",

"delete",

`${image}@${tags[j].digest}`,

"--format=json",

"--quiet",

"--force-delete-tags",

]);

}

}

}

function command(cmd, args) {

return new Promise((done, reject) => {

const ps = spawn(cmd, args);

let result = "";

ps.stdout.on("data", (data) => {

result += data;

});

ps.stderr.on("data", (data) => {

result += data;

});

ps.on("close", (code) => {

if (code !== 0) {

console.log(`process exited with code ${code}`);

}

try {

done(JSON.parse(result));

} catch (err) {

done(result);

}

});

});

}

function formatImageTimestamp(image) {

const { year, month, day, hour, minute } = image.timestamp;

return `${year}-${month}-${day} ${hour}:${minute}`;

}

(async function () {

for (let i = 0; i < CONTAINER_REGISTRIES.length; i++) {

await go(CONTAINER_REGISTRIES[i]);

}

})();And by the way I'll experiment using the script with my Cloud Builds runs. I have plenty happening and I'll need clean up there. P.S. |

|

@akauppi I have no idea, I assume not? How can i check? On the cloud build page on gcp it looks like im not... |

|

@febg11 Then it’s not that. |

|

When you deploy Firebase Functions/Cloud Functions with nodejs10 and above, you are using Cloud Build under the hood. It's mostly an implementation detail, but when deploys do go wrong, it's nice that you have access to exactly what went wrong since the image build is happening in your project. |

|

@febg11 I think the graphs you posted are a bit misleading. Yes there is data transfer in some of your buckets, but I believe they are all internal traffic (e.g. from Google datacenter to Google datacenter) and you won't be billed for any of that. It's unfortunate that we can't filter out "internal traffic" from the charts. I couldn't find a way to do so from metrics emitted from Cloud Storage, but I'll see if there are ways for us to only show "billable" usage. |

I bring some notes I did about this (explicitly using Cloud Build). This may or may not apply to the issue and I'm so sorry if I only create noise. But Google datacenter to Google datacenter is certainly not always free.

I calculated at that time that each build I ran on Cloud Build cost me 0.10 eur. My solution was to push Docker builder images to US instead, which reduced costs to 0. |

This seems a lot tbh. I would suggest if possible to delete the current project and create a new one in the same region. I'm using extensively firebase functions and I don't see to be charged for that. |

|

Let's not focus on me - my situation is under control. The point is that in case images are in a regional Container Registry and Cloud Build uses them (from US), there's possibility for problems. Also, the 0.10 eur/build is BS. The pricing shows that level for 0..1TB. My bad! |

|

So because my artifacts bucket and functions are in Europe I will get charged because cloud build runs in the US? Everytime I deploy a small change it's going to cost me? |

|

@akauppi You are right that not all Google Datacenter <-> Data Center traffic is free. Network that cross region would be billed against the user's project. But where are you quoting from for this comment?

I had thought that for each function deploys, Cloud Build job are created on the respective region. So my function that runs in us-central1 will trigger a Cloud Build job run in us-central1 while a asia-northeast1 function will get the image from a Cloud Build job in asia-northeast1. I tested deploying functions in us-central, europe-west1, and asia-northeast1, and I noticed that Cloud Build job was triggered on each respective region. In this scenario, I don't expect any billable network egress. Is there something I'm missing? |

|

@taeold I don’t think you are missing anything. There’s two Cloud Build contexts at play - and it’s misleading like two balls in a football game (just watching a match…). I brought the second ball, which is using Cloud Build in CI/CD. I’ll remove it now. Field is all yours! ⚽️ |

|

@akauppi Thanks for clarifying. @febg11 I don't think you are going to be billed for network usage when creating and updating functions. Instead, you will be billed for storage charges coming from container images you've got (viewable from Container Registry) at the end of the month. After this feature is implemented, you will likely not see any additional charges (make sure to clean up old images - FR will only clean up new images). |

|

Great looking forward for the update :) Do you think the firebase console UI could get updated to not reflect this usage? It will probably mislead others in the future. |

|

@febg11 That's a great point - I agree it's misleading. I'll bring it up internally. |

|

Hi @krasimir I just tried running your script and for each I my functions it just prints |

|

@febg11 17 functions is quite a lot. I think it's normal to have 3GB of storage. |

|

Fix is in the next version of |

|

Nice. However, I didnt see a drop in my storage after redeploying, still hovering around 2gb. Am i safe to delete everything in eu-artifacts..appspot.com or is this actually still being used? |

|

I wonder if this is because a previously-deleted function is pinning an Ubuntu image in Cloud Storage. We only clean up images for functions that are part of the current deployment. Can you go through the Google Container Repository panel (NOT the Cloud Storage panel) and delete everything? After redeploy your storage should hopefully go back down. |

@inlined I'm experiencing the same issue as @febg11 . I don't see the container images anymore but the drop didn't happen. What else can we do? |

|

Hi @vidrepar. Mine seems to have dropped but it took a few days... its around 600mb now :) Maybe check after a few days to see if the firebase storage ui has reflected the changes. |

|

Do the firebase tools automatically clean up artifacts when the deployment fails? I had a battle last week trying to get my functions to deploy, now I'm left with > 600MB of artifacts hanging around and a peak bandwidth consumption of 877MB, which seems excessive for 4 5-line cloud functions :/ |

|

@dgkimpton You are right - we don't try to clean up images if the deploy fails :(. We are brainstorming ways to make it easy to clean up artifacts left-behind by Firebase/Google Cloud Functions - stay tuned! |

|

Ah, that explains things. Rather than providing a way to clean up gracefully, what about a way to simply blat all the existing cloud function images and easily reinitialise? Sometimes the brute force approach is the easiest ;) |

|

Previously (some month ago) I have doing deploy of functions w/o any warnings use free Spark plan, now I got "Unhandled error cleaning up build images. This could result in a small monthly bill if not corrected. You can attempt to delete these images by redeploying or you can delete them manually at console.cloud.google.com/gcr/images/project-functions/us/gcf Function URL (app(us-central1)): us-central1-project-functions.cloudfunctions.net/app" I have deleted them but still got this error. Is really billing required for now? |

|

@code-by Yes with deprecation of Node8 runtime (announced a year ago), Firebase Functions does not work without a Blaze plan. Most users will not need to modify their code to migrate to supported Node.js runtime, but do check out https://cloud.google.com/functions/docs/migrating/nodejs-runtimes for guidance. As for the error message, we are tackling the issue here #3639. |

|

I have the same problem a single funcion deploy with only console.log hello world cost me 408MB bandwith I m scared to continue. I have: |

|

For better or worse this is working as designed. Cloud Functions uses Cloud Build which stores your images in Google Container Repository (soon to be Artifact Registry). All of that happens in your project and it required a full Ubuntu image to be stored. |

On each function deploy, I see that I'm left with container images in Google Container Registry (https://console.cloud.google.com/gcr) that are several hundreds MBs per function. They eat up significant portion of my total Google Cloud Storage usage.

Related issues I've seen on stack overflow:

I don't see a usecase for these images, and I'd like to have them cleaned up automatically on each deploy.

The text was updated successfully, but these errors were encountered: