New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Compress world data to reduce loading time using socket compression options for large data transfer events. #5942

Comments

|

It pains me when someone submits an issue and they say "should be simple" because whenever those cursed words are included in the issue description, it's never simple. Socket.io supports automatic compression of "large" messages (gated by default at 1024kb or greater) which was added in 1.4.0, see https://socket.io/blog/socket-io-1-4-0/ I have experimented with customizing the compression rules with some custom per-message deflate options but this (1) did not work in my experimentation and (2) has several reported memory leaks associated with this feature so I got deterred from investigating it further. See websockets/ws#1617 and websockets/ws#1369 |

|

Originally in GitLab by @manuelVo How about a custom compression stack? There are several pure JS compression libraries out there (or even wasm ones, for better performance). All that would be necessary would be to put the world json through the respective compression function and convert the compressed blob to base64 (or better ascii85 for better space efficiency) to send it through socket.io's text channel. When receiving the world, the client just needs to do the same thing reverse: unbase64, decompress, then josn decode; that should be it. Or am I missing something? (Apparently socket.io has a binary mode that could be used to send the compressed data without using base64 or something similar, but I haven't investigated that thoroughly, so this might be wrong) |

|

It's a possibility to explore, yes. I don't think we will prioritize it for v9, but maybe if we have some extra bandwidth we can take this up and explore a custom compression/decompression approach. |

|

Originally in GitLab by @stwlam Might it be viable to send the initial world data payload over http, with all subsequent data going through a websocket connection? At least then the data could be compressed if piped through a reverse proxy. |

|

Originally in GitLab by @Weissrolf The latter suggestion would maybe even work if all database files were initially sent over via HTTP. Because their compressed total size would still be lower than the uncompressed snippet currently sent via websocket. |

|

Comment from @ben_lubar Feature SummaryMy friend is setting up a D&D game on Foundry, and so far the UI seems really responsive, except for a very long initial load time. I opened my browser's developer tools to investigate and found that the client sends However, if I take the response and pass it through gzip, I get a file that is only 13708208 bytes (under 10 seconds per player). I believe this can be done automatically using the perMessageDeflate option in socket.io, although that may negatively affect the performance of the messages that aren't multiple megabytes in length. Additionally, the packet seemed to have a lot of unnecessary data, such as objects containing only empty strings or zeroes or nulls as field values and data the client almost definitely doesn't need like Windows filesystem paths. (they're not long individually, but they add up!) Filtering the data that gets sent could save a lot of space (and therefore loading time) without the downsides of perMessageDeflate. As a third possible solution, the initial state could be presented as a difference from some static file, so that the websocket would only need to describe the differences between the current state and "world state snapshot 1", where the static file would be cacheable by a proxy server or a web browser unlike a websocket message. User ExperienceThere does not need to be any user-visible effect of this feature other than the game loading faster. Priority/ImportanceThis is firmly in the "would be nice to have" tier of importance. |

|

Comment from @aaclayton: The socket.io library and zlip that it depends on has some notable issues with memory fragmentation when using the Some context on the issue here: https://serverfault.com/questions/1066025/how-to-fix-ws-and-socket-io-memory-leak |

|

Comment from @mkahvi Compressing just the initial world data message would provide lots of benefits by itself, and if it's limited to just that it should avoid all the memory leak problems except for servers that run for months without restart. Additionally you could provide a setting for it so people can disable it if it causes problems, since this seems to be a linux specific bug? |

|

Comment from @Weissrolf Compressing down the initial database messages could squeeze them to about 15-25% their original size using "fastest" deflate. Maybe add a single garbage collection after login/reload to get around the memory leak? But even without GC I did notice that not all data is initially transferred and that most of it is squeezed into a single large message. So only compressing that one websocket message would already help for a group of 1 GM + 4 players logging in or reloading (F5). My my real-world PF2E lv1-3 adventure has 10.1 mb worth of data files, out of which 5.5 mb is FoW data for a single largish map (all other maps reset). Upon login as GM 5.34 mb of combined JSON data is send as a single large message to my client, plus other messages adding up to 6.22 mb total. If my actors db was 60 mb worth of data then that single large message would have been over 60 mb large. PS: For comparison, all non websocket data adds up to 19.65 MB / 9.54 MB transferred in that world, so about half of it is compressed. Most of these show "Cache-Control: no cache" in their header and there doesn't seem to be happening much caching in Firefox even for large background map images. Chrome seems to cache them a bit more aggressively, only transferring 256-259 bytes per file (checking file headers I guess?). |

|

This could possibly become "bonus" territory in V10, but my past foray into per-message-deflate was stymied by the aforementioned memory leak which served as a substantial deterrent. |

|

Originally in GitLab by @zeel02 If the memory issue can't be resolved, would it be feasible to offer as a configuration option instead? A user with plenty of memory but poor bandwidth might be willing to accept the tradeoff. |

|

Originally in GitLab by @Weissrolf Does this have to be an all or nothing decision? What speaks against only compressing that single large message that includes over 95% of the total initial websocket data? I would expect the memory leak to happen due to many/all messages being compressed, but not necessarily a single one. And the HTTP idea also holds some merit if websockets is incapable of doing this. In my last example the whole database folder is compressed down to 16% its initial size via "fastest" deflate, from 10.1 mb down to 1.76 mb. That's still much smaller than the 5.34 mb of websocket data currently being sent that don't even include all data. |

|

No decision at all is going to be made here until and unless we accomplish our stated v10 priorities and have some spare bandwitdh for bonus work. If and when we take on this issue we will explore a wider variety of possibilities to try and accomplish the end goal (compressing the payload) in whatever way is best performing or least risky with regards to potential downsides. |

|

Originally in GitLab by @Weissrolf I thought I had checked that I did not disable the cache in developer tools, but then I mixed that up with the Chrome developer tools. So I had the cache enabled in Chrome, but accidentally disabled in Firefox. Sorry for the confusion and good to know it's working (cached) all properly. |

|

For reference: the Intel Celeron J3355 of my Synology DS218+ takes just 1-2 seconds to compress the data folder of a world (while data scrubbing of its other drive is running in the background). So the CPU load/time seems to be well worth the savings in transfer size. |

|

It would be great for something to happen on this issue. I have a long running campaign (over 2 years now) that has hit about 70 Mb in world data. In Australia, we don't have super-fast broadband, with fibre out of the reach of most people. Indeed we have players still on ADSL. Even in the best conditions, it can take 5 minutes to load a game, and for people on very slow connections they tend to keep trying to refresh the page after a few minutes, which just makes things worse. Adding a compression option for the websocket data would be a massive boost for performance, and help out those of us who don't have the luxury of great internet speeds. |

|

For what it’s worth there are a number of other ways to optimize load times; we can work through these with you on the discord. |

|

I modified my server code to add the "permessage-deflate" option on the socket.io server and we ran a 6 hour session last night with this updated code. All I can say is that the results were stunning! Everyone commented on how much faster things were running, and even players on the slowest of connections were able to participate in a way that they'd been unable to in the past with the standard Foundry code. I monitored memory usage over the session and couldn't see any downsides to this change. If there is indeed a memory leak problem, then its impacts appear to be overstated as far as Foundry is concerned. I'd highly recommend this change for all users. |

|

As mentioned here #5942 (comment) the Our hope is to approach this in a different way by virtue of #4314 |

|

My take on this would be that a Foundry server is never going to be used as a long-running server with large numbers of users. If there are memory problems, they're unlikely to be of such significance that they become an issue, and isn't it better to solve a real problem now with a relatively simple fix that gives users a significant performance boost? If there is a real problem with memory, then the compression could be made optional to allow users to make their own choice. At the moment, all that's on offer is that maybe the performance will be addressed at some stage in the future, because the solution available today "might" cause some unquantifiable problem now. Is there any evidence that memory fragmentation/leaks in socket.io are going to have an impact on Foundry users? I'd have no problem restarting my server before each game, or doing so on a regular basis if needed just to get some major increases in performance for users on slow connections. |

|

@esb Would you publish your approach how to enable compression? What files have to be edited how? The main benefit will be at login when the large non image/audio based db packages are transferred. Afterwards I imagine that fog of war data will benefit. No idea if the memory leak is data cumulating based or time based, but I can live with a restart from time to time. A quick fix would have been to only transfer the initial login package and then switch compression off. Now another long-term solution is being worked on, which I appreciate. But until this is implemented I would like to try the "hack" instead. |

I've heard some people running same world for months with no restarts or even returning to setup, so these seem very likely to encounter it if it is serious concern. Besides that, the per-message leak upstream issue has been closed. And the other issue mentions that it has been mitigated with other changes. Though the second issue is about turning compression sync, not about memory leak directly, so it might not reflect the memory leak status correctly. NodeJS issue 8871 might still be relevant however (or not? they don't seem to be sure if it should be called themselves). Also, I'd like to have an option for enabling compression on my end if this is not enabled wholesale. Windows and Mac were from the start unaffected by this issue as far as I understand. |

|

I've enabled socket compression and the server has been running for months without a restart. This has caused zero impact in terms of memory leaks, but there has been a real gain from the reduced load times. Previously we had players on really slow connections who would struggle to participate in a game due to timeouts and data loss, and this has all gone away for them now. I understand that at this time, this is probably a top priority, but it's a really trivial patch to the code that could be made optional and only enabled for those that want to try it. |

could you share said patch somewhere? I'd like to try this out, two of my players share a not-very-good connection. |

I too would be interessted how to enable this. EDIT:

Firefox headers suggest that it is defleating (Sec-WebSocket-Extensions: permessage-deflate is set) but it still needs around 7-8 Seconds to transfer the ~43mbyte of World data which would match my up to 50mbit/s upload speed. The server restarts every day anyway to take a backup so I would much rather deal with memory leakage instead of waiting for me and my players to download 43mb 6 times. I understand that this would be horrible for teh average customer without any knowledge but an maybe easy although hidden way to enable this would be nice. EDIT2: This runs behind NGINX but I do not think that nginx uncompresses the websocket again. |

Replacing .emit() calls with .compress(true).emit() seems to have some success with perMessageDeflate enabled (at least my player said world load was faster after, we didn't check if it actually was). Though you need to be careful what you replace since there's some non-socket.io .emit() calls that break with this change. |

|

Hi, I'm running into a very similar problem with poor load times (over a minute, sometimes more, even on a good network connection) for a fairly large world. I am trying to see if the

|

|

Everything pretty much. Except the ones that had It'd be smarter to put it only in specific ones, but I didn't look that closely, just enough to not break things. |

That makes a lot of sense that you need to add compress(true). |

|

Hey folks, we are all here for the same reason to provide feedback so that Foundry VTT can be better in the future, but our GitHub issue tracker is not a forum for discussion or tutorials about how to modify our server-side code. We don't support such modifications nor can we support you if they don't work properly. |

|

While I understand the sentiment especially from a support standpoint if find it quite sad that discussion about how to do this, between people which are not affiliated with Foundry, is disapproved. I bought the AV Premium module and selfhost, just importing the Adventure is already around a whopping 27mb of world data…. This is a load time of around 5-6 seconds per player on my bandwidth. Using an online tool to deflate the world data reduces the amount to around 13% of the original size. So, in my opinion this topic of modifying it myself is relevant even though this does not help on how to improve it for everyone. |

|

My original intent here was to indicate that this was a viable strategy for improving performance. There is an architectural limitation with how Foundry transfers data to the client, and this does become critical for large worlds, especially with clients who have slow connections. I have found that the I refrained from trying to provide patch instructions as this can lead to messy problems as people can end up nuking their systems. You need a fair degree of experience to perform such a modification, so I figured that if you have that experience, then you should be able to work out what to change anyway. Going forward, I would hope that this activity encourages the Foundry team to re-examine the idea of adding this option to the code. It's a very simple option to add. It's literally one line of code to enable, and a small amount extra to add an interface option to enable/disable the option. Maybe all the Foundry developers have super fast fibre connections, so it doesn't matter to them, but some of us live in poor countries like Australia which is near the bottom of the table of Internet infrastructure after a decade of neglect by the conservative government. This sort of stuff can be really important to our experience in using Foundry, so please reconsider adding it as an option. |

|

The fact that this is an open issue and not something that is closed is confirmation that we would like to improve performance here in the future. It's definitely something we will take a look at for future Foundry VTT updates. |

|

I totally understand not supporting any modifications to server code. I wouldn’t want to support that if I were in your shoes. Like some other folks have said, I was curious if this approach would actually improve performance so that I could chime in here with something that works on the off chance it would be helpful. I wouldn’t recommend anyone inexperienced in programming try any of this, and only undertook it myself as a professional. For what it’s worth I’m running into this issue with a group based in the US, some of whom have fiber connections. I ended up at this GitHub issue because of how long the load times have gotten as our campaign goes on, and some professional curiosity. Glad to hear it’s still being considered. |

Sadly adding only perMessageDeflate did not help. The headers are now set if you look at the socket but the data does not get compressed. I did fully reboot the Foundry server. |

I can confirm that adding the |

May I ask in which file? |

|

Instead of using a timer you can also use your browsers' network debugging tools (F12). They can tell you how much data was transferred. Firefox is more useful than Chrome in this regard. Unfortunately the websocket transfer is never marked as "finished", which makes it a bit harder to analyze. |

Thanks. ;-) |

|

I feel like client-side caching is almost more essential than compression. For context one of my players has really bad internet and so it can take them minutes to load into a world that takes me a couple of seconds to load into. Here's what I mean by that: running This may be a second reason to suggest sending world data through http, allowing the server to begin to responding with a 304 after the first load. Of course if caching through the websocket were implemented I would not mind as the end result would be the same but 304s have out of the box support for stuff I presume will be useful like etags[3] which should more easily allow updating if required (e.g. enabling/disabling modules/packs). Though unfortunately if I had to guess there might be some architectural work involved. Specifically I think you would have to split out the portions of world data that can frequently change from that which is basically never going to change. Packs and modules and the like seem to take up the majority of the space in my world data and I don't expect that to change often. I would just like to note that my own load takes about a dozen seconds despite having about 400 Mbps download according to speedtest.net and other sites. My server is hosted on Oracle with these specs, the instance metrics don't seem to indicate that there's anything being bottlenecked (e.g. cpu etc.) and I've transferred GBs of files onto and out of the server before fairly quickly. This would indicate to me that there's some other factor that may be slow, maybe vendoring on the server side but I don't have a super clear picture on that. I can open this as another issue if this would help tracking but I just happened to be debugging these issues recently and stumbled upon this issue and noticed it's getting added to the V11 Prototype 2. [1] I mention gzip, specifically the deflate compression algorithm as it is by far the most popular option on the web. Brotli shaves off ~31.8% of the size in comparison but may be less supported. |

|

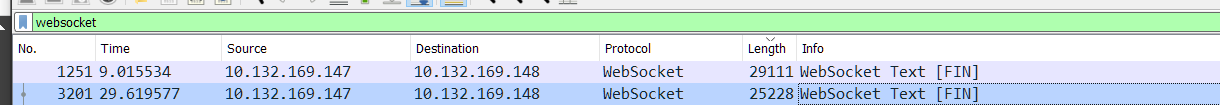

I'm gonna blame @LukeAbby for nerd-sniping me earlier this week by linking me to this issue (and messing with my sleep and time off), and @aaclayton can thank him instead! As I hadn't realized that the websocket data was uncompressed, I've decided to experiment and see if we could achieve that for The Forge, since we're already acting as a middleman/proxy between the user and the Foundry server and we have gzip compression enabled for other HTTP requests via our load balancer. Loading speedsI tested a small and a large world, with my normal (fast) internet (500 Mbps fiber, about 10ms latency) and using chrome network throttling to fast 3g (which is 1.44Mbps with 500ms latency).

So we can see a small decrease in performance for users with very fast connections probably due to the compression/decompression taking more time than what it would have taken to transfer that uncompressed data over the network. There's also a not-insignificant hit on the performance due to my own server having both the socket.io server and client stacks running instead of being a direct socket passthrough.

Which does confirm that there is some small slowdown with deflate on for users with a very good connection, but not as bad as it originally seemed. If the option was in Foundry itself, the difference would be very small indeed. The speed advantage however on a slow connection are definitely not insignificant! And I would expect the larger the world and slower the internet connection, the more of an impact it will have of course. For many users, loading time going from 5 minutes to 2 minutes could make a real difference in their ability to play. Bandwidth usageI've also tested bandwidth usage (though not on those 2 worlds in production, I only had a 19MB world to test with on my dev environment, and I could only check for packet sizes by capturing network packets in an unencrypted local env):

I've also done testing with replacing the client side socket.io library with one that uses the

So, my 19.6MiB world became 16.8Mib with msgpack, but they were both equal at 2.4Mib in size with the deflate option enabled. While msgpack did provide a lower world size, it's relatively insignificant compared to the deflate option and therefore, msgpack or alternative encodings is likely not worth pursuing, as long as we have deflate instead. Memory leak concernsNow, I haven't yet looked into the specific consequences of CPU and RAM usage on enabling the option on my servers, but I was concerned by @aaclayton's claim of memory leaks from that option and I've investigated this thoroughly. Once I have some actual data from real-world testing, I'll be able to report back on the effects of enabling the option, however, I can already guess that it is perfectly safe to use the option without any negative impact on RAM usage or server performance.

I've read through most of those issues, and here's what I found:

ConclusionIn conclusion... enabling deflate significantly reduces the amount of traffic used and significantly decreases the time to load the world. It may or may not add a small overhead that wouldn't be particularly noticeable for users with a very fast connection or on localhost. I hope this research was helpful to you. |

|

Thanks for the hard work and sharing it with the community. One could argue that the transfer of data is one of Foundry's main jobs, so this should not be postponed forever. And as far as I understood your timing tests were done for a single connection, so multiple users connecting (or reloading) at the same time may show even more impact. Fog of war improvements will also play into this as even a single map's fow can be of considerable size. |

|

@kakaroto thanks for sharing your findings. We do have this issue scoped for our current Prototype 2 milestone, so we'll investigate on our end and likely make this change. |

|

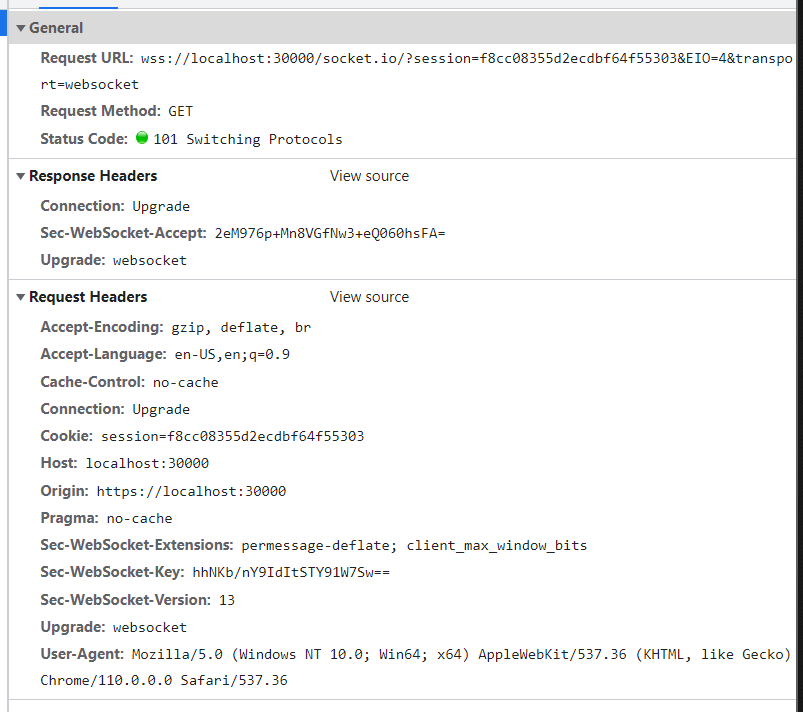

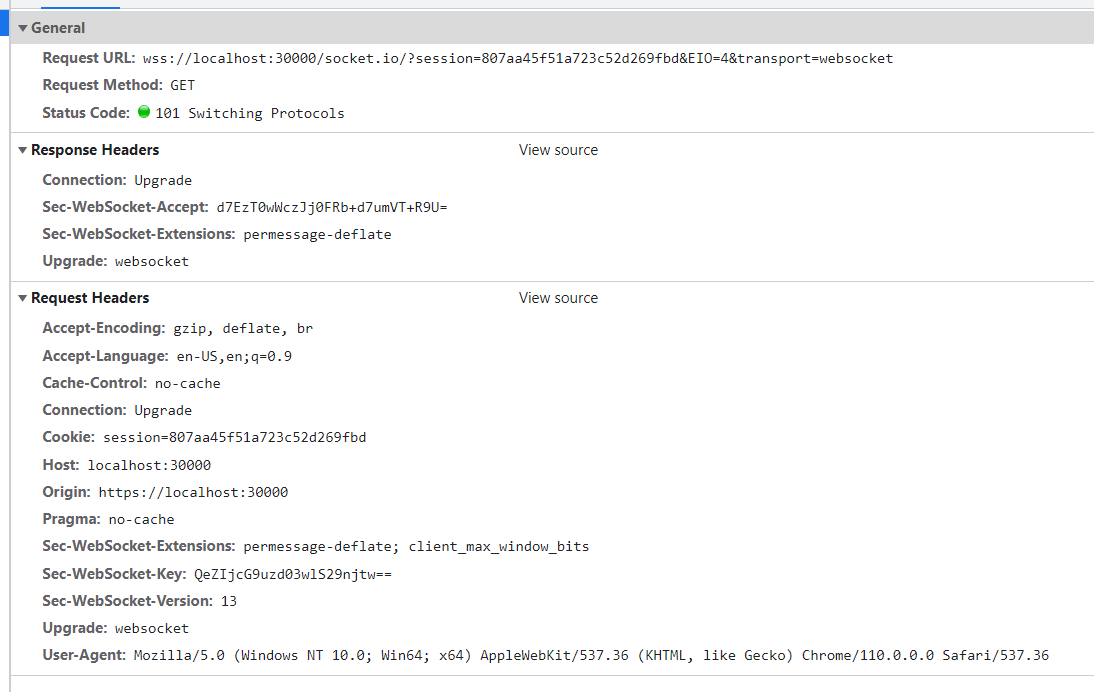

I spent some time today working on this and encountered frustration in my inability to verify the benefit of these proposed changes. I'm going to outline my findings. Perhaps @kakaroto or @esb who implemented changes and measured a different might have perspectives on why I'm not observing any benefit. Test Setup

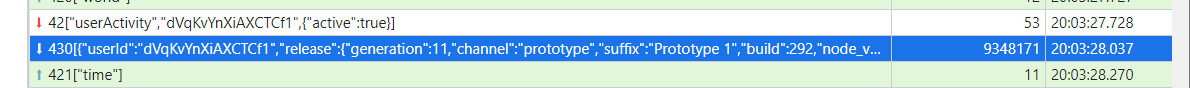

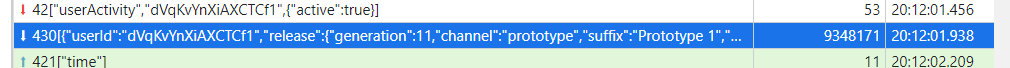

On the client side, On the server-side, the Deflate Off scenario is Scenario 1 - Deflate OffThe first test is the status-quo scenario of HeadersYou see from the websocket headers that the client requests Data TransferThis scenario transferred 3.4MB of data. The world data payload was the following message: Using Firefox dev tools instead reveals that 9.51MB of data was received: Scenario 2 - Deflate OnNow I repeat the same test with server-side HeadersWe can now see from the headers that both the client and server request Data TransferChrome reports the exact same amount of data transfer, 3.4MB although there is a small difference in speed. The world data payload was the following message and is the same length as before: Using Firefox dev tools also reports the same 9.51MB of data received: Open Questions

|

|

Only thing I can think of from the presented info to be missing is |

I think that if you use the devtools to check the size, you won't see any difference, because that's post-deflate sizes, because yes, the message is and will always be 9348171 bytes, but the amount of data actually transferred over the network will be difference. But for the websocket, Chrome will only show the size of the parsed data, not of what is transferred over the network. The only way I found to actually check for those sizes is by using Wireshark/tcpdump and analyzing the actual websocket message data. The only time I alluded to it though in my long post from before was in this line:

Basically, use non-ssl to be able to capture the content unencrypted, use Wireshark or tcpdump to dump the network packets (then open it in Wireshark) as you refresh the page.

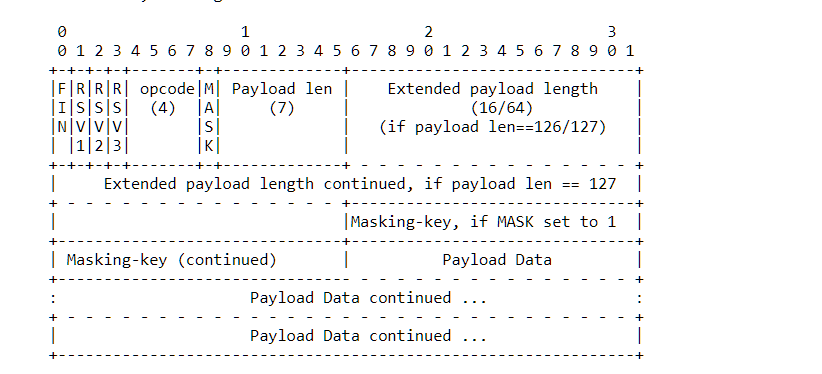

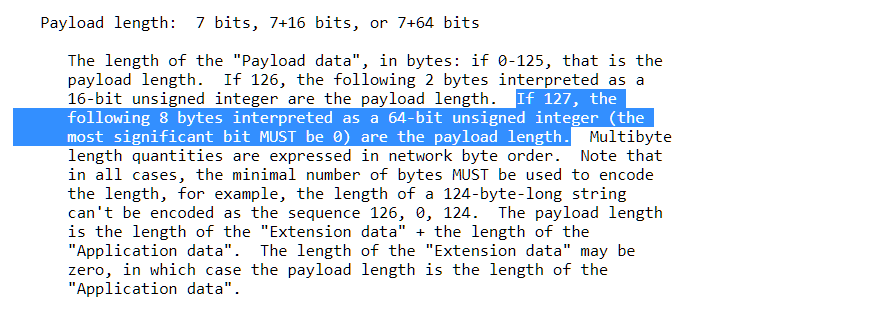

At this point, you can probably just check the size of the conversion as a whole to get an estimate of how much data was transferred: So what you can do to see the actual size of the compressed world, which is what I used in my report above, is that you can analyze the packets being sent/received and look for the size in their binary header. That's what Chrome/Firefox does as it decodes those packets and only shows the content itself, and since the deflate protocol is part of the protocol itself, it happens at an OSI layer above the application layer. You can use the websockets RFC to understand each field, more specifically the chapter 5 Data Framing: https://www.rfc-editor.org/rfc/rfc6455#section-5 Here's a good sample of the data, which shows 5 messages being sent and received. Red is sent by the client to the server and blue is what we received from the server. You can see that the client is always sending compressed data, even for small packets, but Foundry server is sending a mix between compressed and uncompressed data, likely due to the 1024 bytes threshold limit as default for the deflate option on the server. The next byte is 0x84 which is:

Compared now with the packet with deflate disabled (same world) You'll notice how the size was indeed 19.6MB even though Wireshark said "Entire conversation (16MB)" and I remember seeing a

Same configuration, just make sure the websocket response has

Likely just the way to verify the size. I also had the same problem initially before I figured out chrome won't show me the numbers I was looking for. For your purposes, just looking at the size estimate from Wireshark's "Entire conversation" line can be enough to prove that the compression did occur and impact significantly the size transferred, but if you want an accurate read on the world size transferred, The TLDR of it is to check the first two bytes of the packet, if it's I hope this helps, and I hope this was as fun for you to read/follow than it was for me to discover and write it down :) |

|

Thanks for the in-depth response @kakaroto - it sounds like the TL;DR is that the data is getting compressed as intended with I'll include this change in V11P2 and see how it fares in the wild. |

|

No problem, and yep, that's the TL;DR :) But I figured a more in-depth response was needed as you likely wanted to verify the claim that it had an actual effect. As for the slightly slower load times, I actually have an update on that. I've implemented a custom parser for the websocket which doesn't unpack/repack the data to avoid doing a JSON.parse/JSON.stringify needlessly, and the result is that the large world which was loading in 4 seconds and became 5.7 seconds with the deflate option, it dropped it to 4.4 seconds, which is not as bad. I would bet that the slowdown would be completely insignificant if it's implemented directly within Foundry itself, and most of my added latency is because of the socket.io server+client stack that I have to put in the middle of the connection, which isn't a problem for Foundry's server itself. Glad to see this included in v11p2, I can't wait to test it out natively! |

|

I did a quick test using a PF2 Abomination Fault server to load websocket data with and without compression, measuring via a TCP packet sniffer on my local ethernet port. The data is compressed manyfold now, which should help whenever multiple players connect at the same time or everyone is forced to do a reload. Thanks for the implementation! |

Originally in GitLab by @manuelVo

Feature Summary

Compress the world data before transmission, to reduce the loading time of foundry.

Priority/Importance

The world data can get quite big rather quickly. For example my pathfinder 1 world with ~70 actors is already over 10 MB in size. This is (at least in part) because feats, spells and classes contain lengthy description texts. Additionally, JSON as a text format is rather wasteful in space usage. Since foundry needs to do a blocking wait for this data, the transmission time of the world data is fully added to the loading time, which can make foundry slow to load over slower connections (especially when self-hosting, where it's not unusual to have upload speeds that don't exceed 1 MB/s; distributing this world to 6 other players would take 1 minute - furthermore this cannot be mitigated via a CDN because it's transmitted through the websocket). Compression can significantly reduce the amount of data that needs to be transmitted (the world data from above takes under 2 MB when it's compressed), thus improving loading times (our previously mentioned slow connection would only take 12 seconds to transmit the data to all players instead of a minute).

Since compression libraries for JS are readily available (maybe socket.io even supports this natively already) this feature should be fairly simple to implement.

Edit: Compressing templates could be worthwhile as well, though that won't be as impactful as compressing the world data.

The text was updated successfully, but these errors were encountered: