-

-

Notifications

You must be signed in to change notification settings - Fork 3.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Adding Option to Customize Parameters when Launching & Changing Kernels #9374

Comments

|

Moving #9528 here per @echarles @jasongrout

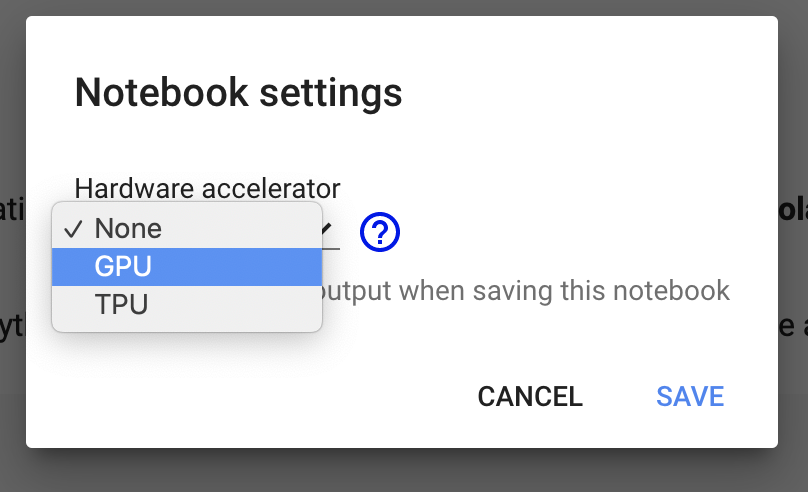

A generalized google hardware accelerator-like popup would be awesome. |

|

cc @kevin-bates for how this might impact jupyter/jupyter_client#608 |

|

Thanks for the copy @blink1073. By all means, Kernel Environment Provisioning will allow for the specification of parameters. However, I'd prefer we move away from EG's stop-gap of using Since only the environment provisioner knows what parameters it can support, I'd prefer we adopt a proposal similar to Parameterized Kernel Launch. This proposal had been based on the "Kernel Providers" proposal and should be updated/re-submitted relative to environment provisioners (if that gets adopted within In that proposal, the kernelspec will contain a schema consisting of the supported parameters (i.e., capabilities). This schema should be rich enough for consumers to produce sufficient dialogs for specifying parameters. The REST API would then need to be plumbed to take the specified parameters - although this should just be a matter of adding the What seems to be missing in this issue is the ability to determine the capabilities of the target kernel. For example, how does the front-end know that this particular kernel can support additional CPUs? And, in the absence of any kind of provisioner mechanism, what interprets the meaning of |

|

@kevin-bates, I was pointed to you as I am currently working with @Carreau on a JupyterLab Extension to manage iPython Kernels. I presented it at last week's Jupyter Developer meeting, and got some feedback regarding the flow and use cases. In any case, I will be sending an email so that we can have a video chat to update where this work is at - and then we can update this issue accordingly! |

|

I recently started doing some experimentation of my own with dynamically configurable kernels. It's nice to see that there are other folks who are starting to see the possibilities.

@kevin-bates +100 for the suggestion of using something JSON schema based. Strong typing, good support for constraints and/or validation, and a widely known syntax/semantics that we don't have to design and implement ourselves all seem like huge wins to me. It's sad to see that this proposal has been languishing since 2019. A good implementation would have probably saved me weeks worth of work I spent last year on figuring out how to hack a poor implementation into enterprise-gateway. |

|

Thanks Max - it's good to see parameterization gaining some traction. For parameterization to be useful, I believe we need a mechanism like kernel provisioners - which are responsible for publishing at least a portion of the parameters - with the others being kernel-specific. So you'd essentially have two sets of schema returned from the |

|

@telamonian I am getting ready to release a beta of the KernelSpec Manager Extension later today. I have a small bug that I need to fix while setting environment variables - the UI uses json schema to generate the components, and I need to fix the corresponding schema for a python dict (the typings are a little off). I'll leave a link to the repo here, and I plan to update it this weekend, after releasing a beta sometime between today/tomorrow. |

@kevin-bates Those are my thoughts exactly. What I really want to see is some concept of composability (along the lines of react's higher-order-components) and/or reusability (hierarchical merge of kernelspecs, actual inheritance, etc) brought into the kernel specification space. It sounds like your current plans for a provisioner that (in one way or another) "wraps" the kernel execution specified by a kernelspec sounds like a great example of composable parts. I would like to know more about the details about the implementation of providers, and about exactly how they can interact with kernelspecs. I can kind of see two different possible models:

I definitely favor the hook-based provisioner, but with all of your very complex In any case, I'll take a look at your ongoing work on jupyter/jupyter_client#612. It's great to see that the implementation of your Kerenel Provisioning proposal is coming along |

|

@anirrudh I'll definitely also be taking a look at ksmm. Being able to select the machine in the UI alongside the kernel type is something I've wanted to see realized for a while |

|

https://github.com/Quansight/ksmm is still WIP, but I would like anticipating some important aspects to move forward. The current POC is developed with React.js and pulls

|

|

This will be a very useful feature. |

|

Is there a latest update on this issue? We also have a similar usecase and this would be an extremely useful feature to us |

Problem

We were using Jupyter Enterprise Gateway to spawn remote kernels. It would be great if JupyterLab could expose an extra text field in Launcher & Choose Kernel form to let users specify additional arguments for the kernel (e.g. the CPU, RAM & GPU resources they need). And the options in this field will be passed together in the request body of API call to

/api/kernels.As far as I know, the Jupyter Enterprise Gateway has already been configured to take in any

KERNEL_*parameters in the request body. (See https://gitter.im/jupyter/enterprise_gateway?at=5f9c2a1ed5a5a635f28e9645)Proposed Solution

KERNEL_EXTRA_CONFIGto request body when calling/api/kernels,and turning them intoKERNEL_NUM_GPU,KERNEL_CPUandKERNEL_RAMin request bodyAdditional context

Similar feature in Google Colab to specify resources:

Thank you

The text was updated successfully, but these errors were encountered: