New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

New Demo of Networked-Aframe with MediaPipe Self-Segmentation #296

Comments

|

nice do you use vp9 or vp8 (in webrtc) for the transparent video? |

|

This is a cool scene @jimver04 ! @jimver04 tell me if I'm wrong, your demo currently use a custom aframe build that stops the camera video track and add a new video track coming from |

|

@jimver04 The multi streams example is now merged If you can adapt your demo to use the new API and give feedback on it that would be awesome. |

|

https://webrtchacks.com/how-to-make-virtual-backgrounds-transparent-in-webrtc/ is a similar implementation than yours. |

|

@vincentfretin Yes we have copied it from the same library of Mediapipe 👍 |

|

Sorry for not replying earlier but I was engaged in another project in UE4. The steps are as follows:

Note that I have stopped the original video stream to release some bandwidth.

Now I see that you have in basic-multi-streams.html

I will try to exploit that to make

PS: I have always the same stream within the other. I can not send two different streams by pressing "Share Screen". Is this a bug ? Then when ensuring that (1) works, I will do

|

|

Hi, no worries. Thanks for taking back at it. |

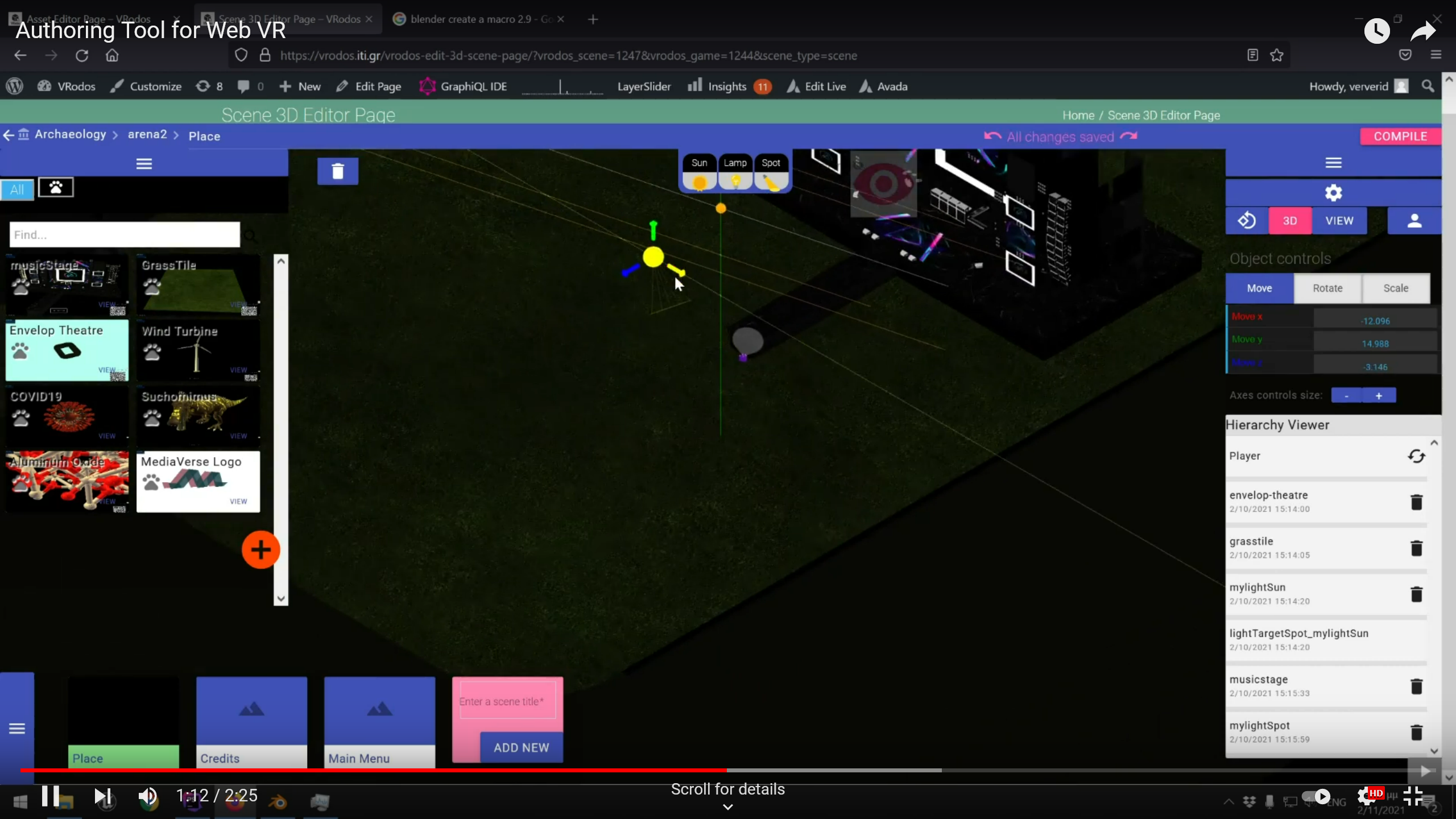

There is no place for demos so I am adding it here !

We have made a stage for live concerts using Networked-Aframe and MediaPipe.

Video

https://www.youtube.com/watch?v=2d8P70C7N_4

In the comments of the video you can find the Demo link for live testing. Currently in Beta. Since MediaPipe is sometimes glitchy, you may need to refresh Firefox or Chrome. Use a pc, laptop, or tablet. On mobiles (Chrome only) there might be issues.

The text was updated successfully, but these errors were encountered: