A framework for creating grounded instruction based datasets and training conversational domain expert Large Language Models (LLMs).

Learn more in our blog: AI for Healthcare | Introducing OpenGPT.

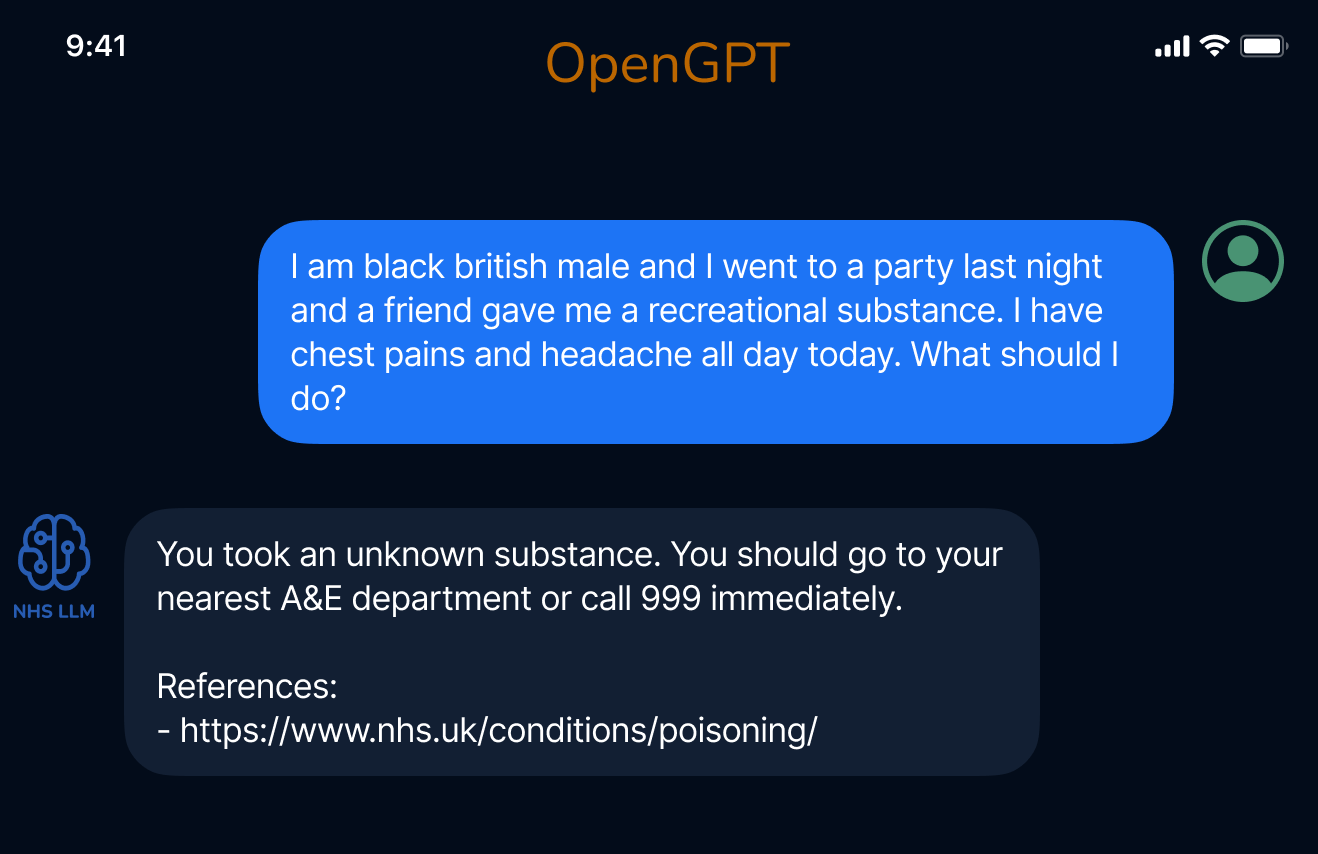

A conversational model for healthcare trained using OpenGPT. All the medical datasets used to train this model were created using OpenGPT and are available below.

- NHS UK Q/A, 24,665 question and answer pairs, Prompt used: f53cf99826, Generated via OpenGPT using data available on the NHS UK Website. Download here

- NHS UK Conversations, 2,354 unique conversations, Prompt used: f4df95ec69, Generated via OpenGPT using data available on the NHS UK Website. Download here

- Medical Task/Solution, 4,688 pairs generated via OpenGPT using GPT-4, prompt used: 5755564c19. Download here

All datasets are in the /data folder.

pip install opengpt

If you are working with LLaMA models, you will also need some extra requirements:

pip install -r ./llama_train_requirements.txt

- Making a mini conversational LLM for healthcare, Google Colab - OpenGPT | The making of Dum-E

-

We start by collecting a base dataset in a certain domain. For example, collect definitions of all disases (e.g. from NHS UK). You can find a small sample dataset here. It is important that the collected dataset has a column named

textwhere each row of the CSV has one disease definition. -

Find a prompt matching your use case in the prompt database, or create a new prompt using the Prompt Creation Notebook. A prompt will be used to generate tasks/solutions based on the

context(the dataset collected in step 1.)

- Edit the config file for dataset generation and add the appropirate promtps and datasets (example config file).

- Run the Dataset generation notebook (link)

- Edit the train_config file and add the datasets you want to use for training.

- Use the train notebook or run the training scripts to train a model on the new dataset you created.

If you have any questions please checkout discourse