Improve batch append throughput at high loads #234

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

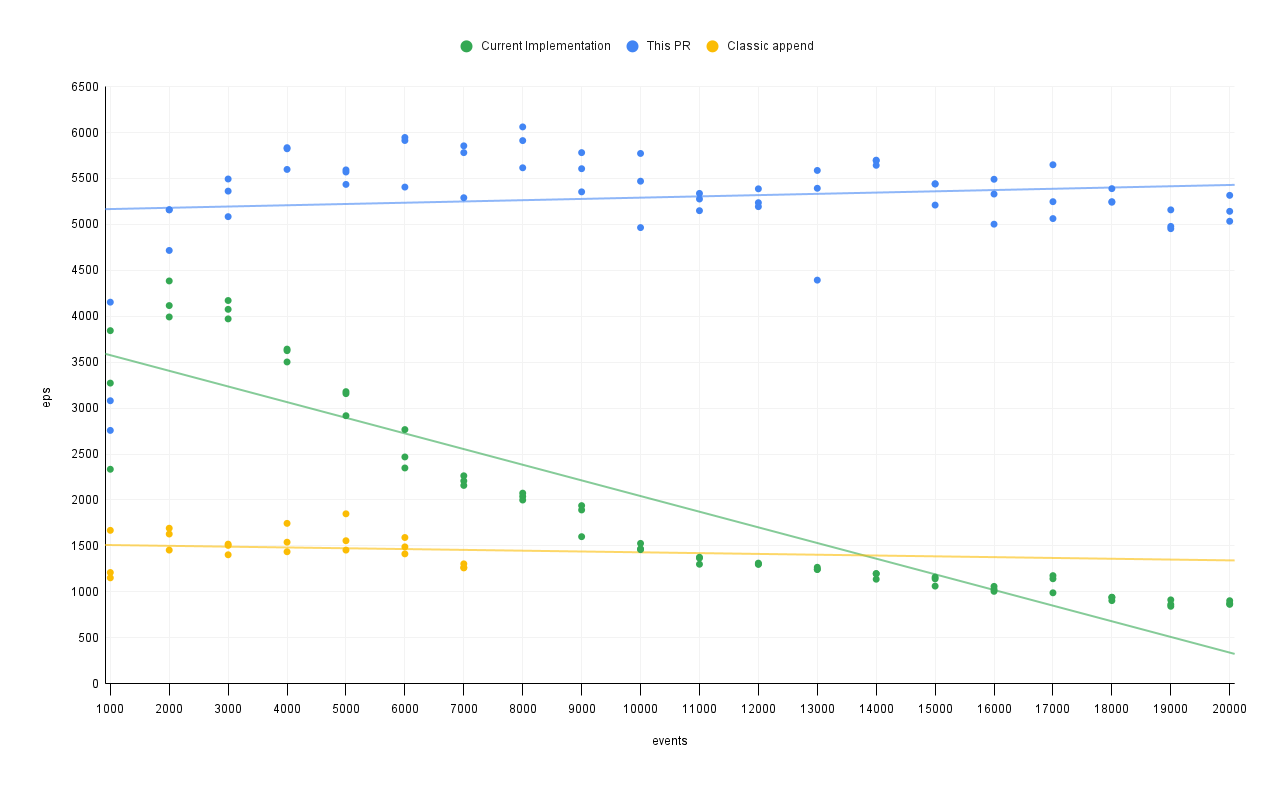

Current implementation of batch append drops off when more than 4000 batches are queued up, due to each batch having an event listener on the stream. This can be mitigated by having a single event listener and a Map of promises, and looking up the promise to resolve or reject from the

Map(This PR).Performance comparison:

(Please note, classic append begins to fail at around 8000 events due to #167, so counts are ommited)

Testing notes / explanation:

events) is created.eps) is calculated by(1000 * events) / durationCode

throughput.ts:

burst.ts: