Principal Component Analysis (PCA) applied on images and Naive Bayes Classifier used in order to classify them. Validation, cross validation and grid search performed with multi class Support-Vector Machines (SVM).

It is shown what happens if different Principal Components (PC) are chosen as basis for images representation and classification. Then, the Naive Bayes Classifier has been choosen and applied in order to classify the image.

Addiotional information and step by step code explained in PCA README.md.

Checkout out documentation in order to have a more in-depth explenation. Also a demo is available on youtube.

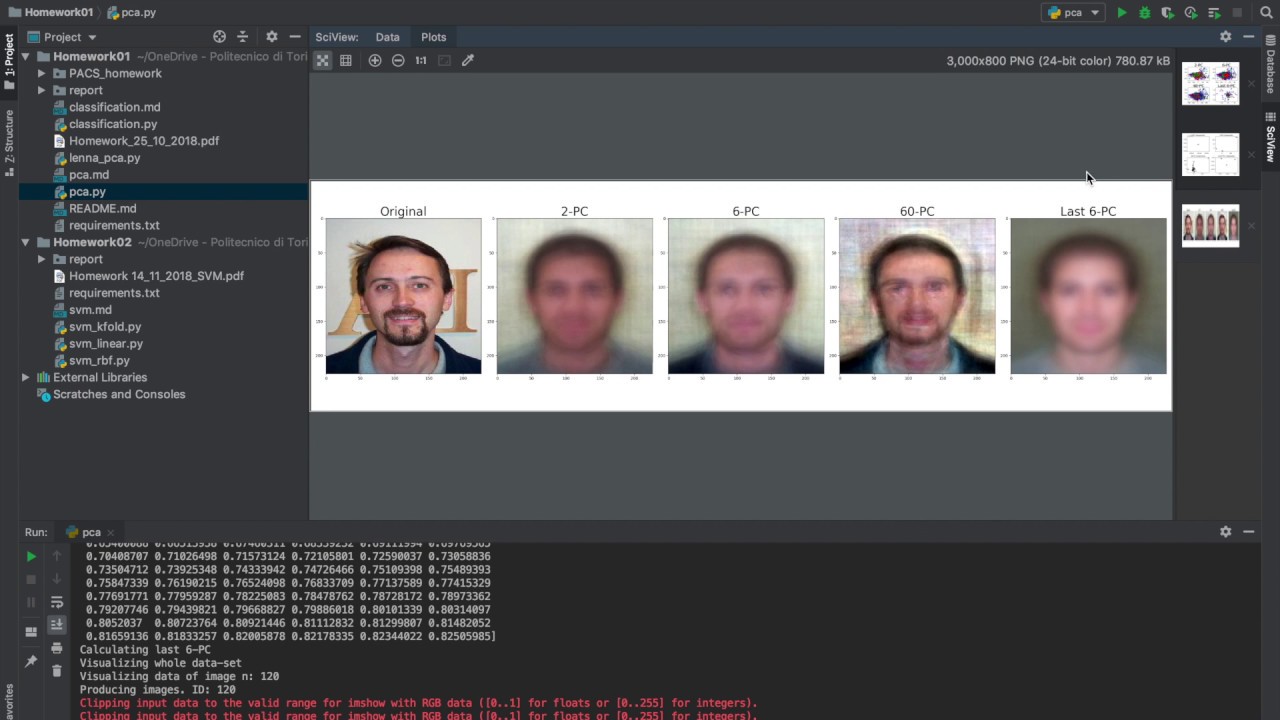

Example result of PCA application on images: in the 2-PC and 6-PC is possible to see (with a little attention) the silhouette (shape) of a dog. Instead, in the 60-PC the silhouette is more evident. The more are the number of PC the more easier to see becomes the solhouette of the dog. The last 6-PC, as expected, are really bad and it is not possible to understand nothing.

Each color is a different type of subjetc: blue -> dogs, green -> guitar, red -> houses and yellow -> people. The higher is the number of PC the more is the number informations that are brought. In the figure below is showed how, trough PC visualization, is possible to distinguish the different classes.

The classifier is able to predict, given an observation of an input, a probability distribution over a set of classes, rather than only outputting the most likely class that the observation should belong to. After splitting the data-set into train and test set, I used the Naive Bayed Classifier in several cases and checked the respective accuracy.

Addiotional information and step by step code explained in Naive Bayes Classifier README.md.

Given a set of training examples, each marked as belonging to one or the other of two categories, an Support-Vector Machines (SVM) training algorithm builds a model that assigns new examples to one category or the other, making it a non-probabilistic binary linear classifier.

Addiotional information and step by step code explained in SVM README.md.

Checkout the documentation in order to have a more in-depth explenation. Also a demo is available on youtube.

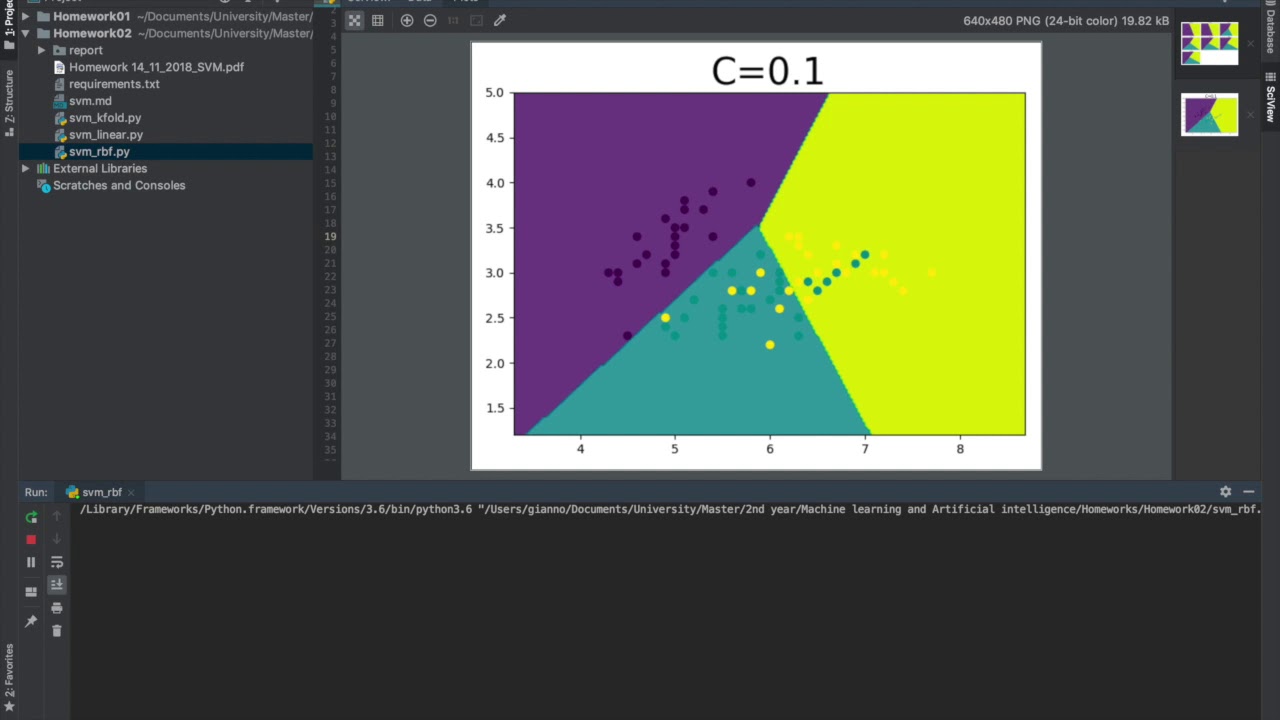

An example of two different graphs in data classifciation using the rbf (Radial Basis Function) kernel.

Install python dependencies (note that each sub prokect contains its own requirements.txt file) by running

python -m pip install -r requirements.txt

If using sklearn you get the following error

DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses import imp

Check file 'cloudpickle.py' and delete row

imports imp