Yuheng Liu1,2, Xinke Li3, Xueting Li4, Lu Qi5, Chongshou Li1, Ming-Hsuan Yang5,6

1Southwest Jiaotong University, 2University of Leeds, 3National University of Singapore, 4NVIDIA, 5The University of Cailfornia, Merced, 6Google Research

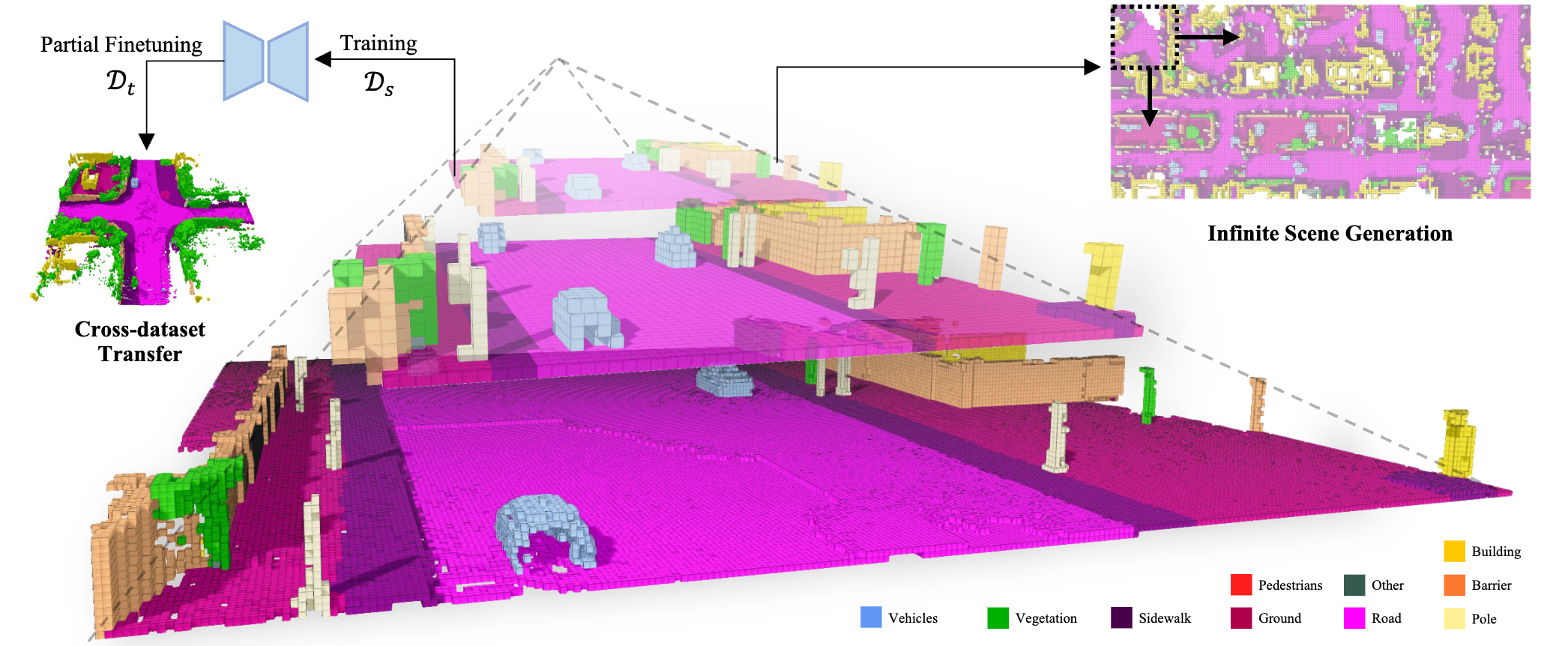

Diffusion models have shown remarkable results in generating 2D images and small-scale 3D objects. However, their application to the synthesis of large-scale 3D scenes has been rarely explored. This is mainly due to the inherent complexity and bulky size of 3D scenery data, particularly outdoor scenes, and the limited availability of comprehensive real-world datasets, which makes training a stable scene diffusion model challenging. In this work, we explore how to effectively generate large-scale 3D scenes using the coarse-to-fine paradigm. We introduce a framework, the Pyramid Discrete Diffusion model (PDD), which employs scale-varied diffusion models to progressively generate high-quality outdoor scenes. Experimental results of PDD demonstrate our successful exploration in generating 3D scenes both unconditionally and conditionally. We further showcase the data compatibility of the PDD model, due to its multi-scale architecture: a PDD model trained on one dataset can be easily fine-tuned with another dataset. The source codes and trained models will be made available to the public.

- [2023/11/22] Our work is now on arXiv.

- [2023/11/20] Official repo is created, code will be released soon, access our Project Page for more details.