New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

angular.io app gets stuck in “loading document” state, likely due to client-server version skew #28114

Comments

|

Having the server keep old chunks for previously deployed versions could be part of the solution for angular.io, but this can't be what Angular relies on by default, as this would greatly complicate deployment of apps to hosting services that expect to be given all static content at each deployment. Catching the error and providing a default message to end users that suggests reloading sounds pretty good. I'd add to that a hook, similar to the global handler, so devs can replace this message with their own handler code. |

|

That is interesting analysis @IgorMinar - I agree that we should prompt the user with a better error. Also once we land this PR (#28037) that retains the current scroll position on reload, it would be much safer to do automatic reloads. |

|

Interesting. Just an FYI, we have noticed a similar behavior with https://xlayers.app. This happens after each deployment. |

|

just my observations: Thanks for sharing this! |

I am not a big fun of auto-reloading, but +100 for doing a better job at notifying the user (right now the app gets stuck on a blank screen, which is 👎).

I suspect that the reason this one fails much more often than others is that basically all docs (i.e. non-marketing pages) require the As I've written elsewhere (see below), I still don't understand how this could be happening (although I may have some far-fetched theories), since to requested file is part of the eagerly cached

I have looked into this errors before and haven't been able to figure out why they are happening (or even how are they possible), because the SW should have the necessary files in its cache 😒 Copying my comments from Slack (not public):

|

|

Also had this issue and made a report here: #28243 (I didn't find this issues at the time) |

|

Still not able to reproduce this reliably, but here is some more info: This does not only happen when you have a tab open for a while. Yesterday, @benlesh run into it while having no tab open and by navigating to a docs page via a Google search results link 😞 Has anyone seen this in any other browser than Chrome? |

|

Yesterday, I have also had this error with a external link to the "next api" section ( I go seldom on this part). I had used Chrome. |

|

I only use Chrome and get this from time to time. |

|

Just happened again to me: |

|

FYI, we had the same issue with 2 angular apps (https://xlayers.app) and (https://ngx.tools). Both apps are deployed to Firebase CDN. And this problem came from the default Firebase cache-control config. I had to temporarily disable the cache (no recommended of course): I have tried other caching strategies but none worked. |

|

@manekinekko , you don't use webworker, do you ? |

|

Nope, SW. |

|

Interesting insight, @manekinekko. |

|

@gkalpak xlayers.app used to have a SW but I had to disable it until I find a better solution for this caching issue. |

|

yes @gkalpak . It's hard this morning ;) |

|

So, did the issue happen with the SW or also without? |

Previously, when a version was found to be broken, any clients assigned to that version were unassigned (and either assigned to the latest version or to none if the latest version was the broken one). A version could be considered broken for several reasons, but most often it is a response for a hashed asset that eiher does not exist or contains different content than the SW expects. See angular#28114 (comment) for more details. However, assigning a client to a different version (or the network) in the middle of a session, turned out to be more risky than keeping it on the same version. For angular.io, for example, it has led to angular#28114. This commit avoids making things worse when identifying a broken version by keeping existing clients to their assigned version (but ensuring that no new clients are assigned to the broken version). NOTE: Reloading the page generates a new client ID, so it is like a new client for the SW, even if the tab and URL are the same.

Previously, when a version was found to be broken, any clients assigned to that version were unassigned (and either assigned to the latest version or to none if the latest version was the broken one). A version could be considered broken for several reasons, but most often it is a response for a hashed asset that eiher does not exist or contains different content than the SW expects. See angular#28114 (comment) for more details. However, assigning a client to a different version (or the network) in the middle of a session, turned out to be more risky than keeping it on the same version. For angular.io, for example, it has led to angular#28114. This commit avoids making things worse when identifying a broken version by keeping existing clients to their assigned version (but ensuring that no new clients are assigned to the broken version). NOTE: Reloading the page generates a new client ID, so it is like a new client for the SW, even if the tab and URL are the same.

|

@gkalpak when you have a solution, can you please assist us in resolving this issue for RxJS docs? Since they're are fork of angular docs, they have the same issue. cc @niklas-wortmann |

|

@benlesh, @niklas-wortmann: Sure, happy to help. The fix would be just updating to a version of |

…43518) Previously, when a version was found to be broken, any clients assigned to that version were unassigned (and either assigned to the latest version or to none if the latest version was the broken one). A version could be considered broken for several reasons, but most often it is a response for a hashed asset that eiher does not exist or contains different content than the SW expects. See #28114 (comment) for more details. However, assigning a client to a different version (or the network) in the middle of a session, turned out to be more risky than keeping it on the same version. For angular.io, for example, it has led to #28114. This commit avoids making things worse when identifying a broken version by keeping existing clients to their assigned version (but ensuring that no new clients are assigned to the broken version). NOTE: Reloading the page generates a new client ID, so it is like a new client for the SW, even if the tab and URL are the same. PR Close #43518

…43518) Previously, when a version was found to be broken, any clients assigned to that version were unassigned (and either assigned to the latest version or to none if the latest version was the broken one). A version could be considered broken for several reasons, but most often it is a response for a hashed asset that eiher does not exist or contains different content than the SW expects. See #28114 (comment) for more details. However, assigning a client to a different version (or the network) in the middle of a session, turned out to be more risky than keeping it on the same version. For angular.io, for example, it has led to #28114. This commit avoids making things worse when identifying a broken version by keeping existing clients to their assigned version (but ensuring that no new clients are assigned to the broken version). NOTE: Reloading the page generates a new client ID, so it is like a new client for the SW, even if the tab and URL are the same. PR Close #43518

|

@benlesh, @niklas-wortmann: FYI, the fix has been released in v12.2.8. So, you can update the docs app to that version to fix the issue. |

This commit updates angular.io to the latest prerelease version of the Angular framework (v13.0.0-next.10). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. Fixes angular#28114

This commit updates angular.io to the latest prerelease version of the Angular framework (v13.0.0-next.10). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. Fixes angular#28114

This commit updates angular.io to a recent prerelease version of the Angular framework (v13.0.0-next.9). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE 1: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. NOTE 2: We do not update to the latest prerelease version (v13.0.0-next.10) due to an incompatibility of `@angular-eslint` with the new ESM format of `@angular/compiler` ([example failure][1]). Fixes angular#28114 [1]: https://circleci.com/gh/angular/angular/1062087

This commit updates angular.io to the latest stable version of the Angular framework (v12.2.8). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest stable Angular CLI. Fixes angular#28114

This commit updates angular.io to the latest stable version of the Angular framework (v12.2.8). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest stable Angular CLI. Fixes angular#28114

This commit updates angular.io to a recent prerelease version of the Angular framework (v13.0.0-next.9). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE 1: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. NOTE 2: We do not update to the latest prerelease version (v13.0.0-next.10) due to an incompatibility of `@angular-eslint` with the new ESM format of `@angular/compiler` ([example failure][1]). Fixes angular#28114 [1]: https://circleci.com/gh/angular/angular/1062087

This commit updates angular.io to the latest stable version of the Angular framework (v12.2.8). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest stable Angular CLI. Fixes angular#28114

This commit updates angular.io to a recent prerelease version of the Angular framework (v13.0.0-next.9). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE 1: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. NOTE 2: We do not update to the latest prerelease version (v13.0.0-next.10) due to an incompatibility of `@angular-eslint` with the new ESM format of `@angular/compiler` ([example failure][1]). Fixes angular#28114 [1]: https://circleci.com/gh/angular/angular/1062087

This commit updates angular.io to a recent prerelease version of the Angular framework (v13.0.0-next.9). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE 1: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. NOTE 2: We do not update to the latest prerelease version (v13.0.0-next.10) due to an incompatibility of `@angular-eslint` with the new ESM format of `@angular/compiler` ([example failure][1]). Fixes angular#28114 [1]: https://circleci.com/gh/angular/angular/1062087

This commit updates angular.io to the latest stable version of the Angular framework (v12.2.8). Among other benefits, this version also includes the ServiceWorker fix from #43518, which fixes #28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest stable Angular CLI. Fixes #28114 PR Close #43687

This commit updates angular.io to a recent prerelease version of the Angular framework (v13.0.0-next.9). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE 1: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest Angular CLI and remove redundant files/config now that CLI has dropped support for differential loading. NOTE 2: We do not update to the latest prerelease version (v13.0.0-next.10) due to an incompatibility of `@angular-eslint` with the new ESM format of `@angular/compiler` ([example failure][1]). Fixes angular#28114 [1]: https://circleci.com/gh/angular/angular/1062087

|

Since both #43518 (which fixed the issue for Note that people might still see the issue one more time (i.e. the first time they visit angular.io since the fix was deployed), but the error rate should be dropping (and the data from Google analytics does confirm that). We'll keep an eye on this to make sure that the error rate continues to drop. |

|

🎉 thank you @gkalpak for your determination and brilliance in finding a resolution to this really really annoying issue. |

|

This issue has been automatically locked due to inactivity. Read more about our automatic conversation locking policy. This action has been performed automatically by a bot. |

…r#43687) This commit updates angular.io to the latest stable version of the Angular framework (v12.2.8). Among other benefits, this version also includes the ServiceWorker fix from angular#43518, which fixes angular#28114. NOTE: This commit also makes the necessary changes to more closely align angular.io with new apps created with the latest stable Angular CLI. Fixes angular#28114 PR Close angular#43687

On several occasions in the past I’ve observed that if I have a long running angular.io app open in a tab, and after some time I come back to this tab and try to use it for another navigation within the app, the app responds with doc viewer progress bar indicating the document is being loaded, but it never actually loads the doc and remains in this state forever. Reloading the page resolves the issue.

When I was looking through the Google Analytics (GA) data today, I noticed that there was a spike in errors just after the holidays:

Google Analytics report

Notice how the spike starts just around Jan 7 when most people got back from holidays, and relatively quickly things come back to normal within the next few days.

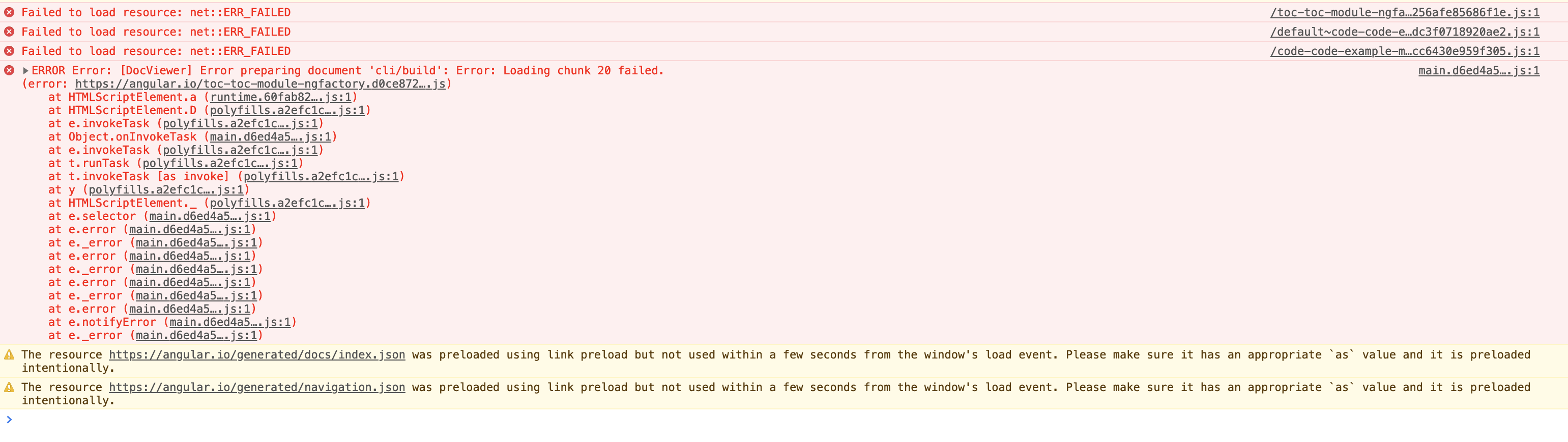

If we look into the error causes, you’ll see that the main root cause is failing to download certain JS chunks, mainly the “toc-module” chunk (there are some others if you go into the report and look at the long tail of errors, but they are less common).

This makes me believe that the problem I observed in the past and this error spike after holidays are related.

Here is my interpretation of the events:

There are at least three problems we need to fix:

UPDATE(2021-09-20):

See #28114 (comment) for an explanation of the problem.

The text was updated successfully, but these errors were encountered: