-

Notifications

You must be signed in to change notification settings - Fork 400

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve organization of metrics module #455

Comments

|

Thanks for the feedback. I'm definitely not averse to rethinking the file structure. And |

|

For documentation in both API and User Guide, I think we should start with the Metrics API proposal Re. structuring of the modules, I totally agree that we should combine all the little files that you mention! I was thinking using the name Re. content of the package: also please take a look at the metrics that exist but are not currently surfaced in the API documentation: https://github.com/fairlearn/fairlearn/blob/master/test/unit/metrics/test_metrics_engine_dicts.py |

I think that's more of a sphinx generated thing and how they're generated. I like merging those files, @romanlutz may need to get used to it :P

You could probably do that through extending or customizing sphinx, but I'd recommend having the table in a user guide nicely put in context rather than an automated table. |

Yes, definitely! This was more of a quick question so I did not have to open yet another issue :P

Some metrics, e.g. selection_rate, are not typical evaluation metrics anyways, so _base_metrics.py works even better I think! |

|

I agree that a handwritten table is likely to look much nicer, but.... if we went that route, we should give some thought to how to make sure that the table evolves with the auto-generated function list. |

|

@adrinjalali I just want to make sure I understand: You mean having single files in the documentation is better than more nesting? I'm totally on board with that :-) Perhaps you've noticed already, but I've moved away from very fine granular documents since that means more clicking. How about I take some time to get the table into the user guide as a PR and then we see what changes people want? I'm open to pretty much anything. The metrics were a major point of confusion for me at some point, so I'd rather avoid new users having the same experience, and that will only be possible with better documentation. Keep the feedback coming! :-) |

Adding a new method/function is not something which happens too frequently in a library, and when it does, we should make sure we put the effort to properly document it, and updating the relevant user guide is a part of that documentation process, so I guess it should come naturally.

I was talking about the |

|

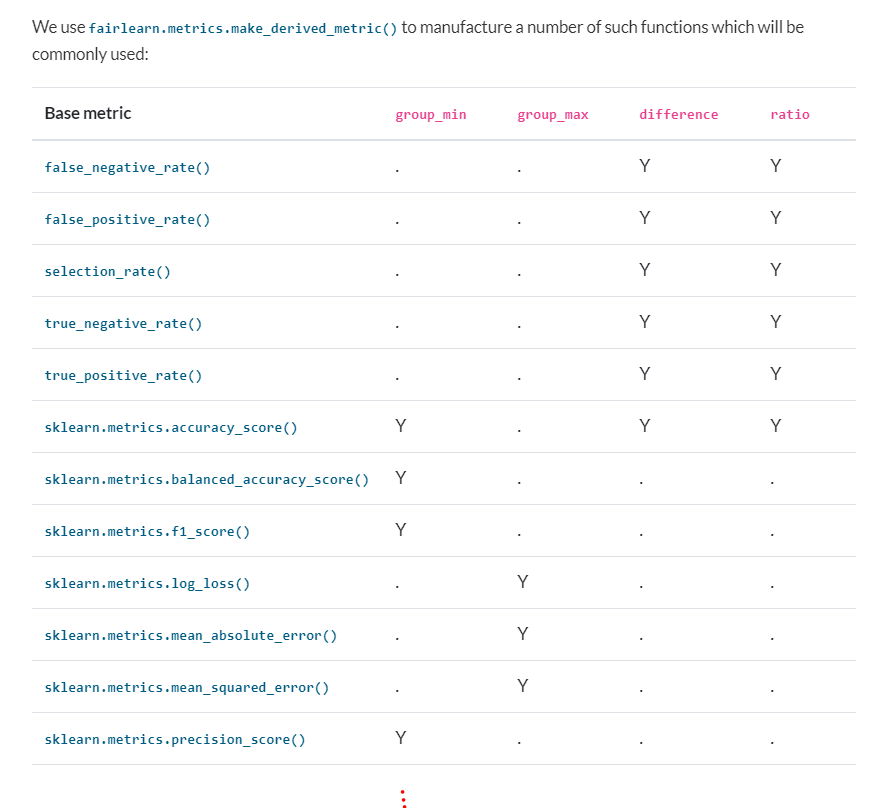

Looking at the user guide we actually do have a table with the performance metrics now: https://fairlearn.org/v0.6.0/user_guide/assessment.html#metrics For this particular issue it's probably best if we focus on the organization of the metrics module (code) rather than conflating it with the documentation. #458 touches on documentation a little bit as well, but we may want to start a completely fresh issue with very specific things that should be addressed (and small in scope). Other than that, it seems like there's agreement on merging files, so this strikes me as an issue that can move forward if there's anyone willing to take up the task. |

|

From what I can tell the only things left here beyond what @alliesaizan did in #1174 are:

@hildeweerts do you mind confirming? This is one of our older issues, so it's a particular highlight to close it out 🤣 |

Describe the issue

I think the organization of files in the fairlearn.metrics module could be improved,

both in API reference and the repository itself. My main issue regarding the documentation is that the distinction between performance metrics (e.g. recall) and fairness metrics (e.g. demographic parity) is not clear in the API reference. Additionally,navigating the repository was not very intuitive for me. For example, some of the performance metrics not directly available in scikit-learn have their own file (e.g. _balanced_root_mean_squared_error.py), whereas others are grouped in (e.g. _extra_metrics.py).

Suggest a potential alternative/fix

I think merging and/or renaming some of the seperate files could help to improve. E.g. something like this:

_performance.py (or perhaps _classification.py and _regression.py to mimic scikit-learn)PS. I suppose giving each function their own page as in the scikit-learn API reference is already implicit in the documentation proposal, right?EDIT: documentation issues moved to issue #720

The text was updated successfully, but these errors were encountered: