Solutions to all cryptopals problems: Sets 1-7, Set 8.

The only dependency on top of standard JRE 8 runtime is that on Lombok. The project runs on all subsequent versions of the Java platform such as Java 11 and Java 17.

The majority of the challenges of a set can be run by executing the com.cryptopals.Setx.main method of the set or

by running the JUnit5 tests found under src/test/java/com/cryptopals/SetXTests.

Required dependencies are defined in the project's pom.xml.

Some challenges (31,

32, 34,

35, 36,

37, 49,

57, 58,

59, 60,

66) require a server-side application.

This can be produced with mvn install and executed with

java -jar cryptopals_server-0.2.0.jar

as a typical SpringBoot application. This application provides either a RESTful API or an RMI component depending on a challenge.

For the more advanced problems I created a proper explanation about the implementation of each of these attacks, which you can find in the Table of Contents below.

-

- Challenge 57. Diffie-Hellman Revisited: Small Subgroup Confinement

- Challenge 58. Pollard's Method for Catching Kangaroos

- Challenge 59. Elliptic Curve Diffie-Hellman and Invalid-Curve Attacks

- Challenge 60. Single-Coordinate Ladders and Insecure Twists

- Challenge 61. Duplicate-Signature Key Selection in ECDSA (and RSA)

- Challenge 62. Key-Recovery Attacks on ECDSA with Biased Nonces

- Challenge 63. Key-Recovery Attacks on GCM with Repeated Nonces

- Challenge 64. Key-Recovery Attacks on GCM with a Truncated MAC

- Challenge 65. Truncated-MAC GCM Revisited: Improving the Key-Recovery Attack via Ciphertext Length Extension

- Challenge 66. Exploiting Implementation Errors in Diffie-Hellman

Challenge 48 is fairly straightforward to implement by following the steps in Section 3.1 Description of the Attack of Bleichenbacher's Chosen Ciphertext Attacks Against Protocols Based on the RSA Encryption Standard PKCS #1 paper. An important observation is that thanks to Step 2c the attack runs in O(log(n)), where n is the size of the RSA modulus.

I created a helper class to aid this process. When following the paper, one needs to pay particular attention to rounding in all the equalities. For example I ended up waisting a lot of time with Inequality (2) in Step 2c:

2B + ri·n 3B + ri·n

--------- <= si < --------- (2)

b a

Initially I implemented it by letting si iterate from the lower bound until (not including) the upper bound. However

that resulted in an incorrect implementation. The term on the right of Inequality (2) will most likely not be an integer

value. Therefore, when computed using infinite precision integers, it will be less than its counterpart computed over reals.

As a result the correct way to implement Step 2c is to let si go to (including) the upper bound when the upper bound

is rounded down to an integer:

while (true) {

BigInteger lower = divideAndRoundUp(_2B.add(rn), interval.upper),

upper = _3B.add(rn).divide(interval.lower);

for (BigInteger nextS=lower; nextS.compareTo(upper) <= 0; nextS = nextS.add(ONE)) {

if (paddingOracle.test(pubKey.encrypt(nextS).multiply(cipherText))) return s = nextS;

}

rn = rn.add(pubKey.getModulus());

}The challenge suggests to go all the way up to 768-bits moduli. With my first implementation using Java's BigInteger it takes about 30 seconds. Yet, in the real world such small RSA moduli are long a relic of the past. Trying to go for 1024-bits moduli and longer let the implementation spin for longer than I wanted to wait. To address that I switched to an optimized implementation of infinite precision integers based on The GNU Multiple Precision Arithmentic Library (GMP). Thanks to the JNA-GMP wrapper this was very easy to do. If you are on macOS, you probably already installed gmp when you installed python with Homebrew.

With tiny changes to the RSAHelper and RSAHelperExt classes the speedup was remarkable. With GMP 6.2.0 I was able to go all the way to 2048-bits moduli within just a couple of minutes:

| RSA modulus size | Average duration of attack (20 tries) |

|---|---|

| 256 bits | 2 s 262 ms |

| 768 bits | 7 s 207 ms |

| 1024 bits | 19 s 271 ms |

| 1536 bits | 39 s 213 ms |

| 2048 bits | 1 m 54s 607 ms |

This difference between the performance of JRE's implementation of BigIntegers and that of GMP is quite remarkable and goes somewhat against Joshua Bloch's advice given in "Item 66: Use native methods judiciously" of his excellent "Effective Java, 3rd edition" book. His reply to my tweet confirmed that.

/**

* @param numBits number of bits in each prime factor of an RSA modulus, i.e. the modulus is thus {@code 2*numBits} long

*/

@DisplayName("https://cryptopals.com/sets/6/challenges/47 and https://cryptopals.com/sets/6/challenges/48")

@ParameterizedTest @ValueSource(ints = { 128, 384, 512, 768, 1024 })

void challenges47and48(int numBits) {

RSAHelperExt rsa = new RSAHelperExt(BigInteger.valueOf(17), numBits);

BigInteger plainText = RSAHelperExt.pkcs15Pad(CHALLANGE_47_PLAINTEXT.getBytes(),

rsa.getPublicKey().getModulus().bitLength());

BigInteger cipherTxt = rsa.encrypt(plainText);

BigInteger crackedPlainText = PaddingOracleHelper.solve(cipherTxt, rsa.getPublicKey(), rsa::paddingOracle);

assertArrayEquals(CHALLANGE_47_PLAINTEXT.getBytes(), rsa.pkcs15Unpad(crackedPlainText));

}Bleichenbacher’s attack clearly demonstrates that RSA-PKCS1 v1.5 encryption is not CCA-secure. A truly CCA-secure public key encryption system cannot be broken even given a full decryption oracle (this is by definition of CCA security for public key encryption), while Bleichenbacher’s attack merely uses a partial oracle. Is the fix that was implemented in TLS 1.0 sufficient to make RSA-PKCS1 v1.5 CCA secure? Likely, but there's no security proof. This is the main reason v2.0 of RSA-PKCS1 adopted Optimal Asymmetric Encryption Padding (OAEP) for RSA encryption, for which there's a security proof that the resulting scheme is CCA secure under certain assumptions.

The second part of this challenge, which deals with a message length extension attack for a multiple transactions request:

Your mission: capture a valid message from your target user. Use length extension to add a transaction paying the attacker's account 1M spacebucks.

assumes that the attacker and the victim share the same authentication key, which is quite a stretch.

Challenge 52 is one of the best demonstrations of the birthday paradox I've seen. NB: the way this challenge defines the compression function containts a mistake. The correct definition should be

function MD(M, H, C):

for M[i] in pad(M):

H := C(M[i], H) ^ H

return H

For the purposes of this task it makes sense to choose a cipher whose key size is 8 bytes. It will also be easier

if the cipher's key and block sizes are the same. I opted for Blowfish, which is present in all JREs through

com.sun.crypto.provider.SunJCE provider. I used a 16 bit hash for the easier hash function f, and a 32 bit hash for g.

This way I needed to find 216 messages colliding in f to ensure there's a pair among them colliding in g.

Challenge 54 shows an ingenious way of finding a target collision between a Merkle–Damgård

hash of two messages m0 and m1, where m0 is chosen arbitrarily by the attacker while

m1 is not and needs to be augmented with a suffix that would make its hash match that of m0.

The only requirement is that |m0| > |m1| by a few blocks. The number of blocks

by which the length of m0 exceeds the length of m1 is referred to as k. The way this challenge

is presented is in the form of using hashes to produce commitments.

The attack is explained at length by John Kelsey and Tadayoshi Kohno in

their Herding Hash Functions and the Nostradamus Attack paper. The most

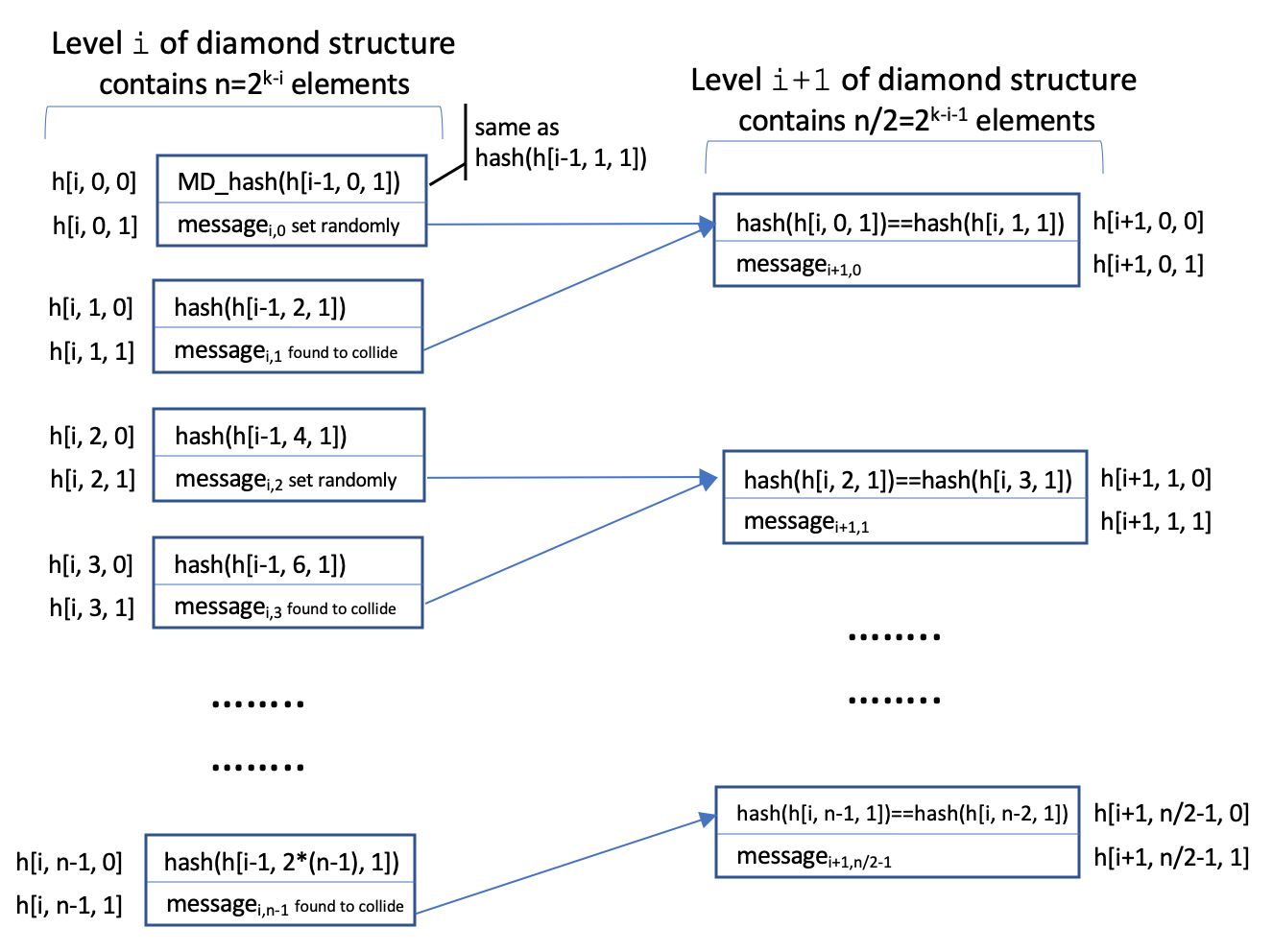

involved part of the attack is building the diamond structure. I decided to represent it as a multi dimensional array.

The first dimension (i) is the tree level, the second (j) contains 2k-i two-element arrays in which the first element

is the starting hash h[i, j] (i.e. the chaining variable) and the second element is a message block whose hash collides

with that of the message starting at either h[i, j+1] (when j is even) or h[i, j-1] (when j is odd). I demonstrate this

in the following picture:

To make working with the diamond structure easier I created the DiamondStrcuture class,

which encapsulates it.

To make working with the diamond structure easier I created the DiamondStrcuture class,

which encapsulates it.

Level 0 of the array is special in that all the hashes stored in h[0, j, 0] are the initial chaining variables

and can be set at will. I decided to populate the elements h[0, j, 0] in such as way as to ensure that they are sorted.

This allows me to make use of a binary search when I need to construct a k-blocks long suffix for m1. The

construction of level i of the diamond structure calls for finding 2k-i message blocks whose hash matches

a given target. I created MDHelper::findCollisionsWith

helper method to make it easier. To speed up the construction of a given level, I observed that this task

lends itself to parallelization.

This sped up the process a lot.

Some other notes worth mentioning. I use m0 of 14 blocks long and m1 of 4 blocks. This gives me

a diamond structure with 10 levels and 210 different initial hashes at level 0. I make use of the easy 16-bit hash f

from Challenge 52. With this setup I am able to construct the desired Nostradamus message in about 11 minutes on

my MacBook Pro (with 8 virtual cores).

@Test

void challenge54() throws NoSuchAlgorithmException, NoSuchPaddingException, BadPaddingException, InvalidKeyException, IllegalBlockSizeException, ExecutionException, InterruptedException {

byte[] H = {0, 1}, H2 = {0, 1, 2};

MDHelper mdHelper = new MDHelper(H, H2, "Blowfish", 8);

String originalCommittedToMsg = /* 14 blocks, 2^10 */

"3-5, 0-0, 1-6, 4-2, 2-2, 4-3, 1-1 dummy prediction that will be replaced"

+ "1234567887654321012345677654321012345678",

nostradamusMsg = "3-1, 0-1, 2-6, 2-2, 3-1, 1-1,0-3"; /* 4 blocks */

byte[] hash = mdHelper.mdEasy(originalCommittedToMsg.getBytes()),

trgtHash = mdHelper.mdInnerLast(originalCommittedToMsg.getBytes(), H,

0, originalCommittedToMsg.length() / 8), sfx;

DiamondStructure ds = new DiamondStructure(

originalCommittedToMsg.length() - nostradamusMsg.length() >> 3,

trgtHash, "Blowfish", 8);

sfx = ds.constructSuffix(mdHelper.mdInnerLast(nostradamusMsg.getBytes(), H, 0, 4));

if (sfx != null) {

assertEquals(originalCommittedToMsg.length(), nostradamusMsg.length() + sfx.length);

byte longMsg[] = Arrays.copyOf(nostradamusMsg.getBytes(), nostradamusMsg.length() + sfx.length);

System.arraycopy(sfx, 0, longMsg, nostradamusMsg.length(), sfx.length);

assertArrayEquals(hash, mdHelper.mdEasy(longMsg));

} else {

fail("Too few leaves in the diamond structure :-(");

}

}It is not safe to produce a commitment just by hashing a secret message m with a collision-resistant hash function. There's no

security proof that such a construction is safe. The correct way to produce a commitment for a secret message m

is to generate a uniformly distributed random number r of, say 512 bits if SHA256 is used as a collision-resistant hash function.

Then compute h = SHA256(r || m). The commitment is a pair (r, h), of which h is revealed while r is kept secret until

it comes time to prove knowledge of m. However the attack presented in this challenge will still work with this correct setup

since the person making the prediction is in control of r.

This attack shows that producing a commitment by hashing a secret message m with a hash function that is built using

the Merkle–Damgård construction may not guarantee the binding property of the commitment, which a cryptographically secure

commitment scheme must possess (in addition to that of hiding m). If a hash function is target collision resistant,

using it to produce commitments would be safe.

Why does this attack work? The main reason is that hash functions employing the Merkle–Damgård construction are vulnerable to message-length extension attacks. That's the main reason the recently standardized by NIST SHA3 hash standard uses the sponge construction instead of Merkle–Damgård. Using SHA3 for making commitments is immune from this attack. So would be using HMAC0 with SHA256 as the underlying hash: HMAC0(m) := HMAC(0l, m) = H(opad || H(ipad || m))

How feasible would mounting this attack be against SHA256? In their original paper the authors indicate that it reduces

the effort required for finding a collision with the target hash from O(2256) to O(2172) when

k=84, i.e. |m0| is greater than |m1| by 84 512-bit blocks (or by 5.25 KiB). The space complexity

of such a diamond structure would be huge. Level 0 alone would take up 284 * (64 + 32) bytes, which is

1536 YiB yobibyte (1 yobibyte == 280 bytes) — a mind-boggling number. This makes this attack infeasible

against SHA-256 in my opinion. Using shorter hashes from the MD family for making commitments is indeed risky.

Challenge 55 is probably one of the most interesting to work on in the first 7 sets. I succeeded in implementing it in a uniform Object-Oriented way, which aids readability and maintainability. The implementation is also blazingly fast — it finds a collison within a few seconds. Here is one found with it:

Collision found between

683E10B651E9185B4D9886D90B7634AE7C4D753533F75041C388E6ACF20CF8B12BA9C27368F09B22EDCE3445BBFED7E8636EDB70070DF0EB7449FA54E421D246

683E10B651E918DB4D9886490B7634AE7C4D753533F75041C388E6ACF20CF8B12BA9C27368F09B22EDCE3445BBFED7E8636EDA70070DF0EB7449FA54E421D246

MD4: B9B0031B30D53E826B80CBDDBE7354D9

I succeeded in fully enforcing all constraints from the first round of MD4 as well as all constraints from the first two steps of the second round. I didn't figure out how to apply the constraints from the 3rd step of the second round of MD4. X. Wang et al. give some hints in their paper, yet they are not easy to follow

Utilize more precise modification to correct some other conditions. For example, we can use the internal collision in Table 2 in which there are three message words are changed to correct c5,i, i = 26, 27, 29, 32. The precise modification should add some extra conditions in the first rounds (see Table 2) in advance. There are many other precise modifications. c5,30 can be corrected by other modification. By various modifications, besides two conditions in the third round, almost all the conditions in rounds 1-2 will be corrected. The probability can be among 2^6 ∼ 2^2.

It is interesting to note that X. Wang et al. used differential cryptanalysis to discover the conditions that lead to collisions in MD4. MD4 was developed in 1990 by Ron Rivest, which is also the year in which Eli Biham and Adi Shamir introduced differential cryptanalysis. Obviously the designer of MD4 didn't take it into account while designing MD4. Interestingly, the NSA discovered differential cryptanalysis as early as in the 1970s, which is one of the reasons why DES is immune to it (see this paper or Section 12.4 in Bruce Schneier's Applied Cryptography 2nd edition for details).

Challenge 56 is an excellent demonstration of how even a tiny bias that makes the distribution of a secure PRF slightly different from uniform might be enough to break it. In the case of RC4 bytes 2 to 255 of RC4 keystream have biases on the order of 1/216 or higher.

This challenge is based on the attack outlined in Section 4.1 of this paper. In my solution I used the biases in the 16th (Z16) and 32nd (Z32) bytes of RC4's keystream, which are elucidated in Section 3.1 of the paper.

The essence of this attack is fairly simple — the biases in the distributions of Z16 and Z32 make

the frequency of a few values much higher than 1/256 (0x00, 0xF0, 0x10 for Z16;

and 0x00, 0xE0, 0x20 for Z32). If we ensure that we encrypt the same plaintext bytes in these positions

repeatedly, certain ciphertext values for C16 and C32 will also occur more frequently than others.

By encrypting on the order of 224 values, we construct the distribution of C16 and C32,

which (like the distribution of Z16 and Z32) will not be uniform. This is enough to recover the

original plaintext bytes P16 and P32 using the maximum-likelihood estimation.

Since the biases in Z16 and Z32, while non-negligible, are still fairly small, I used 227 RC4 keystreams (with independent 128-bit keys) to construct their frequency distributions. With smaller values such as 225 or less, the recovered plaintext cookie will contain errors, particularly for P32. BTW: In the paper Nadhem J. AlFardan et al. used 244 RC4 keystreams to determine the disributions of Z16 and Z32. For this challenge this would be an overkill.

For the maximum-likelihood estimation of the plaintext bytes I used 224 ciphertexts. This is enough to recover P16 and P32 and fully corraborates the results in Figure 4 in the paper.

This set of problems is amazingly interesting, however it took me approximately twice as long as the previous sets combined. It also calls for creating some handy software that might be of use beyond solving these challenges: such as code for elliptic curve cryptography, various ways for calculating dlog, code for GCM along with its GHASH one-time-hash, code for finding roots of polynomials over different fields, matrix operations over GF(2), matrix operations over R, etc.

Challenge 57 introduces the Pohlig-Hellman

algorithm. The best general-purpose algorithm for taking discrete logs in Zp* is

the General Number Field Sieve (GNFS). The running time

of the GNFS is O(∛p), where p is the group's prime.

The Pohlig-Hellman algorithm lets take discrete logs potentially faster than that for groups where n = p-1 (the order of Zp*)

has many small factors. Moreover it works for any cyclic group — the fact that will come in handy in Challenge 59.

If n = p1e1 · p2e2 · ... · prer, then

the computational complexity of taking dlog with Pohlig-Hellman is O{Σ[ei · (lgn + √pi)]}.

Well, the way @spdevlin proposes to go about the solution, which is the path I took:

Friendly tip: maybe avoid any repeated factors. They only complicate things.

the complexity will be O[Σ(lgn + √pi)], where n <= p1 · p2 · ... · pr.

Probably the most involved part of Pohlig-Hellman is a need to implement Garner's algorithm to reconstruct Bob's private key from its residues per subset of the moduli of p-1.

All in all the challenge presents an attack that can bypass DH implementations where Bob makes some rudimentary checks on the offered subgroup description (p, q, g):

- Are both p and q prime?

- Does q divide p-1?

- Is g different from 1?

- Is gq equal 1?

The challenge does make two big assumptions though, namely that

- Bob will naively hang on to the same private key across all new sessions with Alice.

- That group Zp* contains a large number of subgroups with small order. The attack will for example not work if p is a safe prime.

Challenge 58 makes the attack from the previous challenge yet more realistic.

It can be mounted against a group where p-1 has at least one large factor in addition to q (the order of a generator used).

The attack makes use of J.M. Pollard's Lambda Method for Catching Kangaroos, as outlined in

Section 3 of Pollard's paper.

While not as efficient as the GNFS, Pollard's kangaroo algorithm can be applied to any cyclic group (e.g. not only to Zp*

but also to elliptic curve groups) — a fact that will be of use in a later elliptic curve challenge.

Its running time is O(√q), where q is the order of the generator used.

Pollard's method employs a pseudo-random mapping function f that maps from set {1, 2, ..., p-1} to set {0, 1, ... k-1}. The challenge suggested the following simplistic definition for f (which is similar to what Pollard gives in one of his examples):

f(y) = 2^(y mod k)

I used ceil(log2√b + log2log2√b - 2) for calculating k, which is based on

the suggestion in Section 3.1 of this paper by Ravi Montenegro and Prasad Tetali.

When deciding on the amount of jumps N that the tame kangaroo is to make, I used the suggestion from the challenge description and set N to the mean of range of f multiplied by 4. With this choice of the constant the probability of Pollard's method finding the dlog is 98%.

I generate group Zp* as follows:

pis a 1024-bit prime meeting the following requirement:p = Nq + 1, whereqis a 42-bit prime. This is based on the advice from Section 11.6 of "Cryptography Engineering, 2nd edition" by Niels Ferguson, Bruce Schneier, and Tadayoshi Kohno.- The generator

gis a random member of Zp* that has an order ofq.

The only deviation from the book is that I use fewer than 256 bits for q, which obviously weakens the group. Unfortunately

Pollard's kangaroo algorithm doesn't lend itself to parallelisation so choosing q to be much larger than 42 bits makes the

attack impractical. E.g. with a 42-bit q the attack takes on the order of 20 minutes on my MacBook Pro.

To make the attack more realistic I establish only one session to Bob to find b mod r, where r is one factor of N.

This no longer assumes that Bob uses the same private key across all new sessions with Alice. The attack thus works

in a realistic setting where Bob generates a new private key for each new session.

NB: The attack will still be infeasible if p is chosen to be a safe prime. However such choices of Zp*

are rare as they lead to more computationally intensive exponentiation in the group.

Challenge 59 is based on the Weierstrass form of representing elliptic curves: y2 = x3 + ax + b

When implementing the group operation in E(Fp), division should be carried out as multiplication by the multiplicative inverse mod p, e.g.:

function combine(P1, P2):

if P1 = O:

return P2

if P2 = O:

return P1

if P1 = invert(P2):

return O

x1, y1 := P1

x2, y2 := P2

if P1 = P2:

m := ( (3*x1^2 + a) * modInv(2*y1, p) ) mod p

else:

m := ( (y2 - y1) * modInv(x2 - x1, p) ) mod p

x3 := ( m^2 - x1 - x2 ) mod p

y3 := ( m*(x1 - x3) - y1 ) mod p

return (x3, y3)

For convenience's sake I implemented the class that represents elements of the curve so that each coordinate

of a point (x, y) is positive, i.e. x and y are stored mod p. This makes the implementation simpler.

For the rest the attack is pretty similar to Challenge 57 except that the group given in the challenge

ECGroup(modulus=233970423115425145524320034830162017933, a=-95051, b=11279326, order=233970423115425145498902418297807005944)

doesn't have an order with many small factors. Therefore instead of finding generators of the small subgroups of this elliptic curve group, the attack hinges on Alice foisting on Bob bogus public keys that are not on the original elliptic curve but are rather on specially crafted curves

ECGroup(modulus=233970423115425145524320034830162017933, a=-95051, b=210, order=233970423115425145550826547352470124412)

ECGroup(modulus=233970423115425145524320034830162017933, a=-95051, b=504, order=233970423115425145544350131142039591210)

ECGroup(modulus=233970423115425145524320034830162017933, a=-95051, b=727, order=233970423115425145545378039958152057148)

The orders of these elliptic curves do have many small factors. Interestingly all the three crafted curves are required

to recover Bob's private key. This is because the product of the small factors of each of these curves is less than

the order of the generator given for the challenge (182, 85518893674295321206118380980485522083). You need the distinct

small factors collected from all the crafted curves.

NB the algorithm suggested in Challenge 57 and this one for finding subgroups of required order

Suppose the group has order q. Pick some random point and multiply by q/r. If you land on the identity, start over.

only works for cyclic groups. For Challenge 57 it didn't matter much because Zp* is always

cyclic. This doesn't always hold for elliptic curve groups though, i.e. not every elliptic curve group is cyclic. In fact you

will not be able to find a generator of order 2 for y^2 = x^3 - 95051*x + 210 if you use the order of the group

233970423115425145550826547352470124412. The correct way to find generators of required order is to use the order

of the largest cyclic subgroup of an elliptic curve. For this curve it is 116985211557712572775413273676235062206.

See my discussion with @spdevlin. Worth noting here

that any group of a prime order is cyclic. That's one of the reasons why some popular elliptic curves such as

secp256r1 or secp256k1 have prime orders. The converse is not always true, i.e. there can be groups of non-prime order

that are cyclic.

The attack in this challenge does make two assumptions though, namely that

- Bob will hang on to the same private key across all new sessions with Alice. This is the same as in Challenge 57.

- Bob will not check whether Alice's public key lies on the expected elliptic curve. How big of an assumption is that? Unfortunately not too big because in many implementations of ECDH Bob is only sent the x coordinate of Alice's public key for the sake of efficiency, and the implementation doesn't check if x3 + ax + b is a quadratic residue. In fact such an attack can be pulled off on the ubiquitous NIST P256 curve. It takes a twist-secure elliptic curve such as 25519 to foil this attack. Or one can just check if Alice's public key is on the expected curve, e.g. the following check by Bob will render this attack harmless:

public Set8.Challenge59ECDHBobResponse initiate(ECGroup.ECGroupElement g, BigInteger q, ECGroup.ECGroupElement A) throws RemoteException {

// A bit contrived for Bob to hang on to the same private key across new sessions, however this is what

// Challenge 59 calls for.

if (ecg == null || !ecg.equals(g.group()) || !this.g.equals(g)) {

ecg = g.group();

this.g = g;

privateKey = new DiffieHellmanHelper(ecg.getModulus(), q).generateExp().mod(q);

}

// Is Alice's public key on the curve?

if (!ecg.containsPoint(A)) {

throw new RemoteException("Public key presented not on the expected curve");

}Challenge 60 is based on the Montgomery form of representing elliptic curves: Bv2 = u3 + Au2 + u

A Montgomery form curve equation can always be changed into the Weierstrass form, the converse is not always true.

Given isomorphism between EC groups of the same order regardless of their form, I abstracted the concept of

an EC point into an interface and refactored the rest of the classes accordingly. This ensured a shared implementation

of the scale and dlog methods:

public interface ECGroupElement {

BigInteger getX();

BigInteger getY();

ECGroupElement getIdentity();

ECGroupElement inverse();

ECGroupElement combine(ECGroupElement that);

ECGroup group();

/** Returns the x coordinate of kP where P is this point */

BigInteger ladder(BigInteger k);

default ECGroupElement scale(BigInteger k) {

ECGroupElement res = getIdentity(), x = this;

while (k.compareTo(BigInteger.ZERO) > 0) {

if (Set5.isOdd(k)) res = res.combine(x);

x = x.combine(x);

k = k.shiftRight(1);

}

return res;

}

}Analogously for the concept of an EC group:

public interface ECGroup {

/** Returns the order of field F<sub>p</sub> */

BigInteger getModulus();

/** Returns the order of this curve, i.e. the number of points on it. */

BigInteger getOrder();

/** If this group is cyclic, returns its order. Otherwise returns the order of the largest cyclic subgroup. */

BigInteger getCyclicOrder();

/** Returns the identity element of this group */

ECGroupElement getIdentity();

/**

* Returns the order of the quadratic twist of this curve

*/

default BigInteger getTwistOrder() {

return getModulus().multiply(TWO).add(TWO).subtract(getOrder());

}

/**

* Calculates the y coordinate of a point on this curve using its x coordinate

*/

BigInteger mapToY(BigInteger x);

/** Checks if the point {@code elem} is on this curve */

boolean containsPoint(ECGroupElement elem);

/** Creates a point on this curve with designated coordinates */

ECGroupElement createPoint(BigInteger x, BigInteger y);

BigInteger ladder(BigInteger x, BigInteger k);

}NB For a Montgomery curve the point at infinity O is always (0, 1). Each Montgomery curve has at least one point of order 2, it is always (0, 0).

This challenge turned out to be one of the toughest so far. Here Alice sends Bob only the x-coordinate of her public key.

Bob then derives the DH symmetric key using the Montgomery ladder: group.ladder(xA, b), where xA is the

x-coordinate of Alice's public key and b is Bob's private key. Bob also sends back to Alice only the x-coordinate of

his public key: g.ladder(privateKey), where g is the generator of the EC group.

What makes this challenge much more computationally intensive is that when the protocol uses only the x-coordinates of Alice's public key, Alice never learns the exact residues of Bob's private key when she foists public keys that are in fact generators of small subgroups. @spdevlin, the author of the challenge, gives a small hint:

HINT: You may come to notice that ku = -ku, resulting in a combinatorial explosion of potential CRT outputs. Try sending extra queries to narrow the range of possibilities.

By way of illustration. In this challenge we work with a Montgomery curve

MontgomeryECGroup(modulus=233970423115425145524320034830162017933, A=534, B=1, order=233970423115425145498902418297807005944, cyclicOrder=233970423115425145498902418297807005944)

which is isomorphic to

WeierstrassECGroup(modulus=233970423115425145524320034830162017933, a=-95051, b=11279326, order=233970423115425145498902418297807005944, cyclicOrder=233970423115425145498902418297807005944)

from the previous challenge.

The twist of our Montgomery curve has order 2·modulus + 2 - order-of-curve = 233970423115425145549737651362517029924. The first gotcha is that the twist is not a cyclic group and just taking small factors of its order will not do (it does have subgroups that are cyclic though). @spdevlin says:

Calculate the order of the twist and find its small factors. This one should have a bunch under 2^24.

Well, the small factors are [2, 11, 107, 197, 1621, 105143, 405373, 2323367]. However you will not be able to find a generator of order 2 if you use assume the twist has a cyclic order of 233970423115425145549737651362517029924. You will be able to find generators for the other small factors: [11, 107, 197, 1621, 105143, 405373, 2323367]. For a randomly generated Bob's private key, sending the generators of these subgroups disguised as Alice's public keys reveals the following facts about Bob's private key b:

Generator of order 11 found: 76600469441198017145391791613091732004

Found b mod 11: 4 or 11-4=7

Generator of order 107 found: 215154098129284057249603159073175023533

Found b mod 107: 24 or 107-24=83

Generator of order 197 found: 94955123407611383099634454718224635806

Found b mod 197: 44 or 197-44=153

Generator of order 1621 found: 90340124320150600231802526508276130439

Found b mod 1621: 390 or 1621-390=1231

Generator of order 105143 found: 226695433509445480278297098756629724558

Found b mod 105143: 6979 or 105143-6979=98164

...

You thus have 27=128 combinations of Bob's private key modulo the product of the [11, 107, 197, 1621, 105143, 405373, 2323367] moduli. And then you'll need to take a DLog for each of these combinations to end up with 128 guesses of Bob's private key. This will probably take a few days to compute on a typical laptop. Can we do better? Yes, it is possible to whittle down the number of combinations to just two with one additional call to Bob. What you need to do is find a generator on the twist curve of order which is the composite of these small moduli. Staying with the above example, it would mean finding a generator of order

r = 11 · 107 · 197 · 1621 · 105143 · 405373 · 2323367 = 37220200115549684379403037

and then initiating a DH exchange with Bob giving him this generator as Alice's public key. Poor Bob will then calculate a symmetric key (i.e. the mac key in the context of this challenge) by raising this generator to his private key exponent and send his Mac response.

We will then try to calculate 27 different symmetric keys ourselves each based on one of the 27 combinations

of Bob's private key modulo r = 37220200115549684379403037. Those combinations that

result in the identical Mac to that returned by Bob are the ones that are worth taking a DLog on to recover Bob's full private key. There'll

be only two unique candidates of Bob's private key modulo r = 37220200115549684379403037 to try:

k and 37220200115549684379403037 - k. Initially I had a less elegant way of going about this wrinkle. The current

implementation is thanks to the idea shared with me by Gregory Morse.

I ended up creating a class dedicated to generating different possible values of Bob's private key

modulo r (i.e. modulo 11 · 107 · 197 · 1621 · 105143 · 405373 · 2323367 = 37220200115549684379403037).

The class implements Iterable and thus allows iterating

through all possible combinations of the private key modulo r. Each candidate is constructed using

Garner's formula.

There are more intricacies to tackle along the way. Some small, others bigger:

-

A fairly small complication is that finding

b mod small-primerequires ploughing through large ranges for the bigger subgroups. For example to find b mod 2323367 requires wading through the [0, 2323367/2] range, and for each element of the range you need to calculate a DH key and derive a MAC. Without parallelizing this easily take a few minutes. I implemented logic to carry such scans in parallel to save time. -

Once you know Bob's private key

bmodulo the product of small primesr= 37220200115549684379403037 (b mod r = n), taking a DLog in E(GF(p)) to recover the full private ket will take a few hours of time. The largerr, the less effort DLog will take. Are there any other small factors to use? I searched up to 232 and didn't find any. However there's a small improvement possible. Remember that the order of the twist has a divisor of 2 but that you cannot find a subgroup of order 2 if you assume the twist is a cyclic group of order 233970423115425145549737651362517029924? The smallest subgroup you'll find has order 11. However you can find a subgroup of order 22. So instead of finding residues of Bob's private key modulo these primes [11, 107, 197, 1621, 105143, 405373, 2323367] I switched to searching for residues of moduli [22, 107, 197, 1621, 105143, 405373, 2323367] instead. Garner's algorithm still works fine as its only requirement is that moduli be pairwise co-prime. This let me learn Bob's key modulor= 74440400231099368758806074 instead of modulo 37220200115549684379403037, roughly halving the time needed to take DLog later on.A still cleaner way to address this would be to spend more time analyzing the twist and figuring out the order of its largest cyclic group, which is obviously less than the order of the twist 233970423115425145549737651362517029924. And then to search for generators of small subgroups relative to this cyclic subgroup. This challenge reveals an interesting fact — the twist of a cyclic Elliptic Curve group need not be cyclic.

-

Applying the the kangaroo attack from Challenge 58 correctly also warrants a couple of explanations. If Bob's private key is the same number of bits as the legit generator of the curve, you might easily trip up. In this problem the generator is

MontgomeryECGroup.ECGroupElement(u=4, v=85518893674295321206118380980485522083, order=29246302889428143187362802287225875743)I implemented Bob's part so that it ensures that its private key has the same number of bits as the generator. By now we know Bob's private key

bmodris equaln. That means that b = n + m·r and the only thing we miss to reconstruct Bob's pkbis findingm. Applying the maths of the kangaroo attack from Challenge 58:y = g^b = g^(n + m·r) y = g^n · g^(m·r) y' = y · g^-n = g^(m·r) g' = g^r y' = (g')^mshows that we have everything needed to calculate

mincludingy, which is Bob's public key (typically designated as B). How do we findy? It is returned by Bob in every DH response it sends back including the last one we received when we searched for the generator of the subgroup of order 74440400231099368758806074. Here's a relevant piece of server-side code representing Bob, with an appropriate comment added.public Set8.Challenge60ECDHBobResponse initiate(ECGroupElement g, BigInteger q, BigInteger xA) { init(g, q); macKey = Set8.generateSymmetricKey(g.group(), xA, privateKey, 32, Set8.MAC_ALGORITHM_NAME); mac.init(macKey); return new Set8.Challenge60ECDHBobResponse(g.ladder(privateKey), // this is Bob's public key Set8.CHALLENGE56_MSG, mac.doFinal(Set8.CHALLENGE56_MSG.getBytes()) ); }

Now we can do the rest:

ECGroupElement gPrime = base.scale(r), y = base.group().createPoint(resp.xB, base.group().mapToY(resp.xB)); List<BigInteger> ret = new ArrayList<>(); for (BigInteger n : cands) { System.out.printf("Trying b mod %d = %d as Bob's private key%n", r, n); ECGroupElement yPrime = y.combine(base.scale(order.subtract(n))); BigInteger m = gPrime.dlog(yPrime, order.subtract(ONE).divide(r), ECGroupElement::f); n = n.add(m.multiply(r)); ret.add(n); System.out.println("Possible private key: " + n); }

Time for the final run of the test:

@ParameterizedTest @ValueSource(strings = { "rmi://localhost/ECDiffieHellmanBobService" })

void challenge60(String bobUrl) throws RemoteException, ... {

MontgomeryECGroup mgroup = new MontgomeryECGroup(new BigInteger("233970423115425145524320034830162017933"),

valueOf(534), ONE, new BigInteger("233970423115425145498902418297807005944"));

MontgomeryECGroup.ECGroupElement mbase = mgroup.createPoint( // The base point, aka the generator

valueOf(4), new BigInteger("85518893674295321206118380980485522083"));

BigInteger q = new BigInteger("29246302889428143187362802287225875743"); // Order of the base point.

ECDiffieHellman ecBob = (ECDiffieHellman) Naming.lookup(bobUrl);

boolean recovered = false;

for (BigInteger b : breakChallenge60(mbase, q, bobUrl)) {

boolean isValid = ecBob.isValidPrivateKey(b);

System.out.printf("Recovered Bob's secret key: %d? %b%n", b, isValid);

recovered |= isValid;

}

assertTrue(recovered, "Didn't succeed in recovering Bob's secret key :-(");

}Recall that we have two candidates of Bob's private key modulo 74440400231099368758806074:

Trying b mod 74440400231099368758806074 = 23977054913240415887527048 as Bob's private key

k=26, N=11184810

xt=28871456718421, upperBound=29264338846924

yt=MontgomeryECGroup.ECGroupElement(u=66018503796393609535400154879727009901, v=75220517950417414937371017845721779515)

Possible private key: 28218217810951813013557371685215994592

Trying b mod 74440400231099368758806074 = 50463345317858952871279026 as Bob's private key

k=26, N=11184810

xt=28871456718421, upperBound=29264338846924

yt=MontgomeryECGroup.ECGroupElement(u=66018503796393609535400154879727009901, v=75220517950417414937371017845721779515)

Possible private key: 50463345317858952871279026

Recovered Bob's secret key: 28218217810951813013557371685215994592? true

Recovered Bob's secret key: 50463345317858952871279026? false

This challenge is an excellent demonstration of the extra safety that one obtains by using only the x-coordinates of Alice's and Bob's public keys when implementing DH on an elliptic curve group. If Alice and Bob go a step further and also ensure that they use a twist secure elliptic curve group E(GF(p)) such as the curve 25519, their implementation will be almost bullet-proof. E.g. a twist secure elliptic curve group is one whose quadratic twist Ē(GF(p)) has a prime order or an order without any small subgroups. The challenge also highlights the importance of choosing large private keys, ideally the same number of bits as the order of the generator.

The first part of Challenge 61 that concerns itself with Duplicate Signature Key Selection (DSKS) for ECDSA is almost trivial compared to anything else in Sets 7 and 8. The implementation is quite compact and simpler than DSA atop of Zp* since there's only one cyclic group of points on E(Fp) to deal with rather than two groups Zp* and Zq* as is the case in the classical DSA. The effort to produce a DSKS for ECDSA is negligible, even for an industry standard curve such as the curve 25519:

@Test

void challenge61ECDSA() {

MontgomeryECGroup curve25519 = new MontgomeryECGroup(CURVE_25519_PRIME,

valueOf(486662), ONE, CURVE_25519_ORDER.shiftRight(3), CURVE_25519_ORDER);

MontgomeryECGroup.ECGroupElement curve25519Base = curve25519.createPoint(

valueOf(9), curve25519.mapToY(valueOf(9)));

BigInteger q = curve25519.getCyclicOrder();

ECDSA ecdsa = new ECDSA(curve25519Base, q);

DSAHelper.Signature signature = ecdsa.sign(CHALLENGE56_MSG.getBytes());

ECDSA.PublicKey legitPk = ecdsa.getPublicKey(),

forgedPk = Set8.breakChallenge61ECDSA(CHALLENGE56_MSG.getBytes(), signature, ecdsa.getPublicKey());

assertTrue(legitPk.verifySignature(CHALLENGE56_MSG.getBytes(), signature));

assertTrue(forgedPk.verifySignature(CHALLENGE56_MSG.getBytes(), signature));

assertNotEquals(legitPk, forgedPk);

// ECDSA is not strongly secure, i.e. if (r, s) is a valid ECDSA signature on m, then so is (r, -s).

DSAHelper.Signature altSignature = new DSAHelper.Signature(signature.getR(), q.subtract(signature.getS()));

assertTrue(legitPk.verifySignature(CHALLENGE56_MSG.getBytes(), altSignature));

assertTrue(forgedPk.verifySignature(CHALLENGE56_MSG.getBytes(), altSignature));

}An important point about ECDSA worth mentioning is that ECDSA signatures are not strongly secure in the sense that

if (r, s) is a valid signature on message m then it is easy to come up with another valid signature on the same message.

For ECDSA that is (r, -s). The last three statements in the above test demonstrate this in action.

Why does signature (r, -s) work too? This is easy to see from how r is constructed:

function sign(m, d):

k := random_scalar(1, n)

r := (k * G).x

s := (H(m) + d*r) * k^-1

return (r, s)

There's another point on the curve whose x coordinate matches that of k · G, it is point -k · G. Plugging -k · G in the verification formulas shows the desired outcome.

Mounting a DSKS attack on RSA is much more laborious. I implemented it for relatively small RSA moduli of 320 bits.

The biggest effort went into finding primes p and q that meet the requirements for 1) p-1 and q-1 being smooth, 2)

both s and pad(m) (s^e = pad(m) mod N) being generators of the entire Zp* and Zq* groups, and 3) gcd(p-1, q-1)=2.

I used PKCS#1 v1.5 mode 1 padding with SHA-1, just like in Challenge 42.

To remind, PKCS#1 v1.5 mode 1 paddding looks as follows:

0x00 || 0x01 || PS || 0x00 || ASN.1 || HASH

where the padding string PS must consist of at least eight 0xff bytes. Given SHA-1 hashes take up 20 bytes, and ASN.1

designation of SHA-1 another 15, this all leads to a minimum RSA modulus length of 20+15+3+8=46 bytes or 368 bits.

This length of RSA moduli is rather difficult to work with, so I implemented this challenge by allowing the padding string

PS to consist of two rather than 8 0xff bytes. This reduces the overhead of PKCS#1 padding with SHA-1 to

20+15+3+2=40 bytes, implying a minimum RSA modulus of 320 bits.

I ended up writing quite a bit of concurrent code to tackle this, and pre-calculated all small primes less than 220 so as to be able to find primes meeting the criterion 1) above in linear time. Even with such relatively small moduli (both p and q are around 160 bits), finding them takes on the order of 20 minutes on my MacBook Pro with all cores searching. NB it is vital that p*q is larger than the modulus of the original public key, so I search for primes that are 161 bits long to play it safe.

Suitable primes found:

DiffieHellmanUtils.PrimeAndFactors(p=2252226720431925817465020447075111488063403846689, factors=[2, 7, 277, 647, 2039, 2953, 14633, 139123, 479387, 904847]),

DiffieHellmanUtils.PrimeAndFactors(p=2713856776699319359494147955700110393372009838087, factors=[2, 13, 17, 23, 26141, 56633, 80429, 241567, 652429, 1049941])]

After that I calculate ep=logs(pad(m)) mod p and eq=logs(pad(m)) mod q using a combination of

Pohlig-Hellman and J.M. Pollard's Lambda Method using a technique from Challenge 59.

To make Pollard's Lambda Method tractable I ensured that the product

of all prime factors for each of p-1 and q-1 is at least 3700000000000000000000000000000000. I arrived at this

number heuristically, for DLogs in a group whose prime is around 160 bits long Pollard's Lambda Method works reasonably fast.

For smaller moduli I divide it by 20.7·(320 - RSA_Modulus_bit_length).

The following part of the problem description deserves a word of caution

4. Use the Chinese Remainder Theorem to put ep and eq together:

e' = crt([ep, eq], [p-1, q-1])

The reasoning behind this formula is pretty straightforward: we know that sep≡pad(m) mod p and that seq≡pad(m) mod q. Since the computations are in GF(p) and GF(q) by Fermat's theorem this is equivalent to sep mod (p-1)≡pad(m) mod p and seq mod (q-1)≡pad(m) mod q. Thus we need to find e such that e ≡ ep mod (p-1) and e ≡ eq mod (q-1). However plugging it into the CRT formula

e = ( ((ep−eq) ((q-1)−1 mod (p-1) )) mod (p-1) )·(q-1) + eq

will fail because (q-1) is not invertible mod (p-1) as they are both even. I used the approach delineated in Section 4.1 of this paper to correctly tackle it.

One interesting nuance that @spdevlin doesn't explain is why the forged e is a valid RSA exponent. We made sure that

we found two suitable smooth primes p and q. You will recall that one of the conditions for candidate primes was

that both s and pad(m) be generators of the entire Zp* and Zq* groups

(i.e. that they are both primitive roots of Zp* and Zq* respectively):

s shouldn't be in any subgroup that pad(m) is not in. If it is, the discrete logarithm won't exist. The simplest thing to do is make sure they're both primitive roots.

This implies that ep (in s^ep = pad(m) mod p) is relatively prime with p-1 — the order of Zp*.

Analogously eq is relatively prime with q-1. This, in turn, implies that e is relatively prime with (p-1)·(q-1) the order

of group ZN* and hence a valid RSA exponent.

Thwarting DSKS attacks is trivial, the signer needs to attach their public key to the message before signing it. While

the verifier should do an extra check to ensure the public key they use to verify corresponds to the one added

to the message. This way, the signing public key is authenticated along with the message. On top of it, it makes sense

to pay attention to the public keys of RSA and be suspicious of public exponents e that are not among the commonly

used ones: { 3, 5, 17, 65537 }.

The challenge ends with the following invitation:

Since RSA signing and decryption are equivalent operations, you can use this same technique for other surprising results. Try generating a random (or chosen) ciphertext and creating a key to decrypt it to a plaintext of your choice!

I tackled it by:

- Generating an RSA key-pair with a 304-bit modulus. Let's call the resulting key-pair

legitRsa. - PKCS#1 v1.5 padding the plaintext message

id135: credentials invalidusing mode 2 encryption, as explained in Challenge 47. Let's call the result of this operationpadm. This will play the role of a legit plaintext. To remind, PKCS#1 v1.5 padding for encryption adds randomness so that padding the same plaintext message twice results in two different pads. - PKCS#1 v1.5 padding the plaintext message

id135: credentials valid!using mode 2 encryption. Let's call the result of this operationforgedPadm. This will play the role of a forged plaintext. - Encrypting

padmusinglegitRsapublic key. Let's call the result of this operationcTxt - Searching for an RSA key-pair of approximately the same size as the modulus of

legitRsaand for which the following equation holds: cTxt^forgedD≡forgedPadm (mod forgedN).

WhereforgedDis the private key of the forged RSA key-pair andforgedNis its RSA modulus. This search is done in exactly the same way as for the DSKS attack on RSA earlier in this challenge.

And it all works:

@Test

void challenge61RSAEncryption() {

RSAHelperExt rsa = new RSAHelperExt(RSAHelper.PUBLIC_EXPONENT, 152);

String plainTxt = "id135: credentials invalid", forgedPlainTxt = "id135: credentials valid!";

BigInteger padm = RSAHelperExt.pkcs15Pad(plainTxt.getBytes(), rsa.getPublicKey().getModulus().bitLength()),

cTxt = rsa.encrypt(padm),

forgedPadm = RSAHelperExt.pkcs15Pad(forgedPlainTxt.getBytes(),

rsa.getPublicKey().getModulus().bitLength());

assertArrayEquals(rsa.pkcs15Unpad(rsa.decrypt(cTxt)), plainTxt.getBytes());

RSAHelperExt forgedRsa = Set8.breakChallenge61RSA(forgedPadm, cTxt,

rsa.getPublicKey().getModulus().bitLength(), false);

byte[] pTxt = rsa.pkcs15Unpad(forgedRsa.decrypt(cTxt));

assertArrayEquals(pTxt, forgedPlainTxt.getBytes());

System.out.println("Decrypted ciphertext: " + new String(pTxt) );

}The unit test passes and outputs:

Decrypted ciphertext: id135: credentials valid!

Challenge 62 is an excellent example of what could happen if a cryptographic

primitive is used incorrectly. It is an egregious misnomer to call the random integer k used in DSA signing a nonce.

A nonce is a number used once. But there's an important caveat to it — a proper cryptographic algorithm expecting

a nonce should be secure even if an adversary gets to choose its nonces (provided they are all unique, of course).

DSA's k must be a cryptographically strong (i.e. unpredictable) uniformly distributed random number for the resulting

signing scheme to be secure. I will henceforth put DSA's nonce in quotation marks to accentuate that it cannot be treated

as a real nonce.

In this attack we get to see what can happen when "nonce" k is biased: its l least significant bits are zero. In this

case all signatures end up sharing the same "nonce" suffix 00000000. To make the attack closer to a real-world setting

I implemented the challenge using curve secp256k1.

This curve is used by Bitcoin, Etherium, and Ripple. The authors of this paper

found multiple cases of signatures with the same key whose "nonces" shared the same suffix. To quote:

256-bit nonces with shared 128-bit suffixes. 121 signatures were compromised by nonces that shared a 128-bit suffix with at least one other signature. 55 of these signatures were used with multisignature addresses and 66 were generated by non-multisignature addresses. 13 keys were compromised this way, which had generated a total of 224 signatures. There were 20 distinct suffixes that had been used by these keys. The earliest signature of this type that we found was from March 2015, and the most recent was from August 2018. Some of the keys were used with nonces that all shared the same suffix, and some were used with nonces of varying and occasionally unique suffixes.

Even though this is much more biased than the 8-bit shared suffixes we get to exploit in this challenge, it still highlights how practical this attack is.

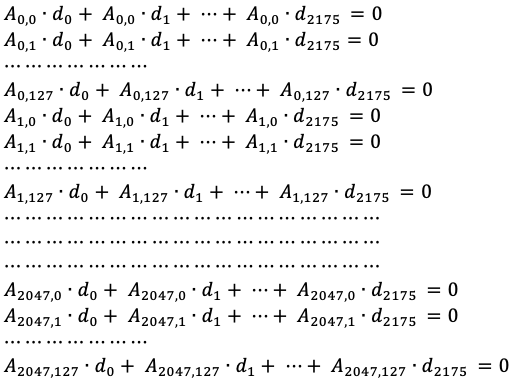

The explanation of the math behind the attack provided by @spdevlin is simply superb. There's one petty inaccuracy in the problem description: the lattice that needs to be constructed should look like

b1 = [ q 0 0 0 0 0 ... 0 0 0 ]

b2 = [ 0 q 0 0 0 0 ... 0 0 0 ]

b3 = [ 0 0 q 0 0 0 ... 0 0 0 ]

b4 = [ 0 0 0 q 0 0 ... 0 0 0 ]

b5 = [ 0 0 0 0 q 0 ... 0 0 0 ] (1)

b6 = [ 0 0 0 0 0 q ... 0 0 0 ]

... ...

bn = [ 0 0 0 0 0 0 ... q 0 0 ]

bt = [ t1 t2 t3 t4 t5 t6 ... tn ct 0 ]

bu = [ u1 u2 u3 u4 u5 u6 ... un 0 cu ]

and have dimension [n+2 x n+2] (in the problem description it is mistakenly shown to have dimension [n+2 x n+3]).

The main point to fathom is that the vector

bu - d·bt + m1·b1 + m2·b2 + ... + mn·bn (2)

is reasonably short and hence is likely to be present in the reduced basis we obtain for our lattice (1). Why is it short? Because early in the problem description we learnt that u - d·t + m·q ~ 0 or less than q/2l to be precise. This means that each element of (2) is less than q/2l and therefore the length of (2) is much shorter than the length of each of the vectors in our original lattice (1).

The implementation of the Gram-Schmidt orthogonalization process and the Lenstra-Lenstra-Lovasz basis reduction algorithm was fairly straightforward. I opted for infinite precision floating point arithmetic provided by Java's BigDecimal. I created a class with static methods for matrix operations over a field of reals and a simple unit test to verify that the main lattice operations work correctly:

@Test

void matrixOperationsOverFieldOfRealsForChallenge62() {

BigDecimal[][] basis = { { BigDecimal.valueOf(-2), BigDecimal.ZERO, BigDecimal.valueOf(2), BigDecimal.ZERO },

{ BigDecimal.valueOf(.5), BigDecimal.valueOf(-1), BigDecimal.ZERO, BigDecimal.ZERO },

{ BigDecimal.valueOf(-1), BigDecimal.ZERO, BigDecimal.valueOf(-2), BigDecimal.valueOf(.5) },

{ BigDecimal.valueOf(-1), BigDecimal.ONE, BigDecimal.ONE, BigDecimal.valueOf(2) }},

expectedReducedBasis = { { BigDecimal.valueOf(.5), BigDecimal.valueOf(-1), BigDecimal.ZERO, BigDecimal.ZERO },

{ BigDecimal.valueOf(-1), BigDecimal.ZERO, BigDecimal.valueOf(-2), BigDecimal.valueOf(.5) },

{ BigDecimal.valueOf(-.5), BigDecimal.ZERO, BigDecimal.ONE, BigDecimal.valueOf(2) },

{ BigDecimal.valueOf(-1.5), BigDecimal.valueOf(-1), BigDecimal.valueOf(2), BigDecimal.ZERO }},

orthogonalBasis = RealMatrixOperations.gramSchmidt(basis),

reducedBasis = RealMatrixOperations.lLL(basis, BigDecimal.valueOf(.99));

// Is the Gram-Schmidt orthogonalization process implemented correctly?

for (int i=0; i < orthogonalBasis.length; i++) {

for (int j=i+1; j < orthogonalBasis.length; j++) {

assertEquals(0, BigDecimal.ZERO.compareTo( /* The dot product of each pair of distinct vectors must be 0 */

RealMatrixOperations.innerProduct(orthogonalBasis[i], orthogonalBasis[j]).setScale(10, BigDecimal.ROUND_HALF_EVEN)));

}

}

// Is L^3-lattice basis reduction algorithm implemented correctly?

assertTrue(RealMatrixOperations.equals(expectedReducedBasis, reducedBasis));

}Creating a biased ECDSA signer was trivial too.

One nuance worth pointing out is the number of signatures required to recover the private key. @spdevlin writes:

I get good results with as few as 20 signatures. YMMV.

Well, the actual number of signatures required to assuredly recover the private key will depend on the curve chosen. Since I chose secp256k1 — a pretty advanced secure curve, my mileage turned out to be quite different indeed. I ended up needing 26 messages signed with the same key and different biased nonces. Moreover I had to increase the length of the shared suffix from 8 bits to 12. And, voilà, within half an hour I am able to recover the key:

Extracted private key: 0x59dc17a4bc3b63a7df0b0cde5d58119caa1b2c711ef46fa59735d8f7fe09e9d1

Actual private key: 0x59dc17a4bc3b63a7df0b0cde5d58119caa1b2c711ef46fa59735d8f7fe09e9d1

The code of the main test is pretty compact:

@Test

void challenge62() {

// Using Bitcoin's secp256k1

WeierstrassECGroup secp256k1 = new WeierstrassECGroup(CURVE_SECP256K1_PRIME, ZERO, valueOf(7), CURVE_SECP256K1_ORDER);

BigInteger baseX = new BigInteger("79BE667EF9DCBBAC55A06295CE870B07029BFCDB2DCE28D959F2815B16F81798", 16);

WeierstrassECGroup.ECGroupElement secp256k1Base = secp256k1.createPoint(baseX, secp256k1.mapToY(baseX));

BigInteger q = secp256k1.getCyclicOrder();

// Check whether the curve behaves as expected

assertEquals(secp256k1Base.inverse(), secp256k1Base.scale(q.subtract(ONE)));

assertEquals(secp256k1.getIdentity(), secp256k1Base.scale(q));

assertEquals(secp256k1.getIdentity(), secp256k1Base.combine(secp256k1Base.inverse()));

int l = 12; /* The number of least significant bits in k that will be 0 */

BiasedECDSA ecdsa = new BiasedECDSA(secp256k1Base, q, l);

int numMsgs = 26; // Each call to getPlainText(6) returns random plaintext 2^6 bytes long

BigInteger[][] tuPairs = IntStream.range(0, numMsgs).mapToObj(x -> Set8.getPlainText(6)).map(m -> {

BigInteger[] tuPair = new BigInteger[2];

DSAHelper.Signature sign = ecdsa.sign(m);

// t = r / (s*2^l)

tuPair[0] = sign.getR().multiply(sign.getS().multiply(ONE.shiftLeft(l)).modInverse(q)).mod(q);

// u = H(m) / (-s*2^l)

tuPair[1] = hashAsBigInteger(m).multiply(sign.getS().negate().multiply(ONE.shiftLeft(l)).modInverse(q)).mod(q);

return tuPair;

}).toArray(BigInteger[][]::new);

LatticeAttackHelper helper = new LatticeAttackHelper(tuPairs, q, l);

BigInteger pk = helper.extractKey();

System.out.printf("Extracted private key:\t0x%x%nActual private key:\t\t0x%x%n", pk, ecdsa.getPrivateKey());

assertEquals(ecdsa.getPrivateKey(), pk);

}Challenge 63 consists of six parts:

- Implementing GF(2128) — Polynomial Galois field over GF(2)

- Implementing Galois Counter Mode (GCM) where the earlier devised GF(2128) is used to calculate the one-time-MAC — GMAC

- Implementing a polynomial ring over GF(2128)

- Solving the problem of factoring polynomials

- Realising the actual attack of recovering the authentication key of GMAC provided a nonce was repeated

- Asking yourself a question of what you can do with the recovered authentication key

All in all it is a fairly laborious challenge that took me quite some time to complete. The effort is commensurate to a university coursework. On the other hand it helped me consolidate my understanding of finite fields and polynomial rings like no text book would ever permit.

I came up with a fairly straightforward implementation of GF(2128) using Java's BigInteger. See com.cryptopals.set_8.PolynomialGaloisFieldOverGF2 for details.

A correct implementation of GCM turned out a bit more tricky to get right. Here are a couple of important nuances to bear in mind:

- When preparing a buffer over which to calculate the GMAC

a0 || a1 || c0 || c1 || c2 || len(AD) || len(C)everything must be encoded using a big-endian ordering. Padding is done with zero bits appended. I found this document from NIST to to be a good reference. - When converting blocks of plain text into elements of GF(2128) and vice versa, the following enjoinder from @spdevlin is crucial

We can convert a block into a field element trivially; the leftmost bit is the coefficient of x^0, and so on.

At the end all fell into place and I was able to confirm my implementation of the GCM to produce the same results as that from the JRE:

@Test

void GCM() {

KeyGenerator aesKeyGen = KeyGenerator.getInstance("AES");

SecretKey key = aesKeyGen.generateKey();

GCM gcm = new GCM(key);

byte[] nonce = new byte[12], plnText = CHALLENGE56_MSG.getBytes(), cTxt1, cTxt2, assocData = new byte[0];

new SecureRandom().nextBytes(nonce);

cTxt1 = gcm.cipher(plnText, assocData, nonce);

// Confirm that we get the same ciphertext as that obtained from a reference implementation.

Cipher cipher = Cipher.getInstance("AES/GCM/NoPadding");

// Create GCMParameterSpec

GCMParameterSpec gcmParameterSpec = new GCMParameterSpec(16 * 8, nonce);

cipher.init(Cipher.ENCRYPT_MODE, key, gcmParameterSpec);

cTxt2 = cipher.doFinal(plnText);

assertArrayEquals(cTxt2, cTxt1);

// Confirm that decrypting will produce the original plain text

assertArrayEquals(plnText, gcm.decipher(cTxt1, assocData, nonce));

// Confirm that garbling a single byte of cipher text will result in the bottom symbol

cTxt1[0] ^= 0x03;

assertArrayEquals(null, gcm.decipher(cTxt1, assocData, nonce));

}Instead of implementing a polynomial ring over GF(2128) I decided to implement it as a generic class over any finite field:

public interface FiniteFieldElement {

FiniteFieldElement add(FiniteFieldElement e);

FiniteFieldElement subtract(FiniteFieldElement e);

/**

* Computes this + this + ... + this {@code k} times

* @return an object of the implementing class.

*/

FiniteFieldElement times(BigInteger k);

FiniteFieldElement multiply(FiniteFieldElement e);

FiniteFieldElement modInverse();

/**

* Computes this * this * ... * this {@code k} times, i.e. computes this<sup>k</sup>

* @return an object of the implementing class.

*/

FiniteFieldElement scale(BigInteger k);

FiniteFieldElement getAdditiveIdentity();

FiniteFieldElement getMultiplicativeIdentity();

BigInteger getOrder();

BigInteger getCharacteristic();

}So as to test my implementation of polynomial rings, I wrote a class representing GF(Zp) fields. It is much easier to reason about Zp arithmetic than arithmetic in GF(2128).

This entailed working out:

- Division of polynomials: https://en.wikipedia.org/wiki/Polynomial_long_division#Pseudocode

- Differentiation of polynomials

- GCD for polynomials

- Square-free factorization of polynomials: https://en.wikipedia.org/wiki/Factorization_of_polynomials_over_finite_fields#Square-free_factorization

- Distinct-degree factorization of polynomials: https://en.wikipedia.org/wiki/Factorization_of_polynomials_over_finite_fields#Distinct-degree_factorization

- Equal-degree factorization of polynomials: https://en.wikipedia.org/wiki/Factorization_of_polynomials_over_finite_fields#Equal-degree_factorization

Of these problems I spent the most time getting distinct-degree factorization to work. The first obstacle I faced was my earlier decision to represent polynomials as arrays of coefficients. This algorithm requires dealing with polynomials whose degree is the order of the field and higher, which turns out to be 2128 for this field. E.g. a polynomial like this one: x2128 - x = x340282366920938463463374607431768211456 + x in GF(2128). To tackle it I switched to representing polynomials in a way that stores only their non-zero coefficients.

The second obstacle was the awful running time of the Distinct-degree factorization algorithm from Wikipedia. It has a running time of O(q) where q is the order of GF(2128), which takes forever. I tackled it by adopting a Distinct-degree factorization algorithm that uses repeated squaring.

Equal-degree factorization, while fairly well delineated by @spdevlin, presented a couple of difficulties too. To start

with, calculating

g := h^((q^d - 1)/3) - 1 mod f

as specified in the problem description will take forever for the very same reason as I indicated above —

the order (qd-1)/3 will be too large. You need to raise to this high a power by constantly taking modulus

of f in your exponentiation routine. I solved it by implementing a scaleMod method on my Polynomial Ring class.

The other difficulty is that the square-free polynomial without distinct-degree factors that you pass to your edf implementation might not have factors of the degree you specify. This would lead to the algorithm running ad infinitum... For example in my approach I always call edf with a desired degree of factors being 1. While the setting guarantees the presence of at least one factor of degree 1 for the original polynomial, there's no guarantee that each polynomial spewed out by distinct-degree factorization can be factoed in one-degee polynomials. I dealt with this predicament by setting a heuristic limit on the maximum number of passes through the loop in my edf implementation. When the polynomial passed to edf has factors of requested degree, this heuristic limit will not halt the loop without finding the factors with a probability close to 1.

// maxPasses ensures the method doesn't hang if this polynomial can't be factored into d-degree polynomials

int maxPasses = 5 * (int) Math.ceil((32 - Integer.numberOfLeadingZeros(r)) * 2.5);All the hard work on implementing square-free factorization, distinct-degree factorization, end equal-degree factorization can finally be brought to bear:

KeyGenerator aesKeyGen = KeyGenerator.getInstance("AES");

SecretKey key = aesKeyGen.generateKey();

GCM gcm = new GCM(key);

byte[] nonce = new byte[12], plnText = "crazy flamboyant for the rap enjoyment".getBytes(),

plnText2 = "dummy text to try".getBytes(),

cTxt1, cTxt2, assocData = "valid assoc.Data".getBytes();

new SecureRandom().nextBytes(nonce);

// a0 || a1 || c0 || c1 || c2 || (len(AD) || len(C)) || t

cTxt1 = gcm.cipher(plnText, assocData, nonce);

// Reusing the same nonce, thereby making ourselves vulnerable to the attack.

cTxt2 = gcm.cipher(plnText2, assocData, nonce);

PolynomialRing2<PolynomialGaloisFieldOverGF2.FieldElement> poly1 = GCM.toPolynomialRing2(cTxt1, assocData),

poly2 = GCM.toPolynomialRing2(cTxt2, assocData),

equation = poly1.add(poly2).toMonicPolynomial();

System.out.println("cTxt1 polynomial: " + poly1);

System.out.println("cTxt2 polynomial: " + poly2);

System.out.println("Equation: " + equation);

List<PolynomialRing2<PolynomialGaloisFieldOverGF2.FieldElement>>

allFactors = equation.squareFreeFactorization().stream().map(PolynomialRing2.PolynomialAndPower::getFactor)

.flatMap(x -> x.distinctDegreeFactorization().stream()).collect(Collectors.toList()),

oneDegreeFactors = allFactors.stream().filter(x -> x.intDegree() == 1).collect(Collectors.toList()),

oneDegreeFactorsThroughEdf = allFactors.stream().filter(x -> x.intDegree() > 1)

.flatMap(x -> x.equalDegreeFactorization(1).stream()).collect(Collectors.toList());

System.out.println("Actual authentication key: " + gcm.getAuthenticationKey());

System.out.println("Candidates found after distinct-degree factorization: " + oneDegreeFactors);

System.out.println("Additional candidates found after equal-degree factorization: " + oneDegreeFactorsThroughEdf);

oneDegreeFactors.addAll(oneDegreeFactorsThroughEdf);

List<PolynomialGaloisFieldOverGF2.FieldElement> candidateAuthenticationKeys =

oneDegreeFactors.stream().map(x -> x.getCoef(0)).collect(Collectors.toList());

assertTrue(candidateAuthenticationKeys.contains(gcm.getAuthenticationKey()));Running it produces the following output:

cTxt1 polynomial: 862e862274c6f6cece8604269636866ex^5 + a2c92e99dba07ce117b3bb3665fedff9x^4 + f4551f6d035a339b2e5b061ca2830ce4x^3 + 320f10f267edx^2 + c800000000000000100000000000000x + 5aafa98bf7b25cfe22f5e630f97d59e9

cTxt2 polynomial: 862e862274c6f6cece8604269636866ex^4 + c291acf103e2e47987fbbb368dce3f19x^3 + 7ex^2 + 11000000000000000100000000000000x + b3180499d9b2e60566ac9c204aad7ff

Equation: x^5 + 597419e85ea532a59c4d0eed034a9044x^4 + 812f7801991ead15d455a70fcb83086fx^3 + 87c24da8d5f25ea3e9f92b8c9b319712x^2 + 10ec19974d245b5f16890e6a1effeec8x + e4cb0203ef19430f3c13947f6f6d17a7

Actual authentication key: 67cf01239432c85151d9f7c021bfd121

Candidates found after square-free and distinct-degree factorization: []

Additional candidates found after equal-degree factorization: [x + 67cf01239432c85151d9f7c021bfd121, x + e8cde43205a09d05379422572e11dfb5]

Having gone to the lengths of completing this Herculean labour of recovering the GMAC authentication key from a victim who naively encrypted two different plain texts with the same nonce, you might wonder what you can do with it. Well, you can forge a piece of distinct cipher text that the cryptosystem you attack will authenticate. In other words you can mount an existential forgery attack.

Imagine that t0 is the last block of the first cipher text you have cTxt1. Looking at the way it was calculated

t0 = a0*h^5 + c0*h^4 + c1*h^3 + c2*h^2 + l0*h + s

and noting that you have both a0 and h, you can go far. Say a'0 is a block of your bogus associated data you want to swap for

the legitimate block a0. What you do is first subtract a0*h^5 from t0 and then replace it with a block of bogus

associated data by adding a'0*h^5 to t0. Here's how it looks in my code:

/**

* Forges valid cipher text from legit cipher text and associated data coupled with a recovered authentication key.

* @param additionalBogusAssocData blocksize-long buffer, must be the same size as padded {@code legitAssocData}

*/

public static byte[] forgeCipherText(byte[] legitCipherText, byte[] legitAssocData, byte[] additionalBogusAssocData,

PolynomialGaloisFieldOverGF2.FieldElement authenticationKey) {

int plainTextLen = legitCipherText.length - BLOCK_SIZE,

assocDataPaddedLen = (legitAssocData.length / BLOCK_SIZE + (legitAssocData.length % BLOCK_SIZE != 0 ? 1 : 0)) * BLOCK_SIZE,

plainTextPaddedLen = (plainTextLen / BLOCK_SIZE + (plainTextLen % BLOCK_SIZE != 0 ? 1 : 0)) * BLOCK_SIZE,

lastPower = plainTextPaddedLen / BLOCK_SIZE + 1,

last = additionalBogusAssocData.length / BLOCK_SIZE;

if (additionalBogusAssocData.length != assocDataPaddedLen) {

throw new IllegalArgumentException("additionalBogusAssocData must be of same length as padded legit associated data and not "

+ additionalBogusAssocData.length);

}

// We start with the original legit tag...

PolynomialGaloisFieldOverGF2.FieldElement forgedTag = toFE(Arrays.copyOfRange(

legitCipherText, legitCipherText.length - BLOCK_SIZE, legitCipherText.length));

byte[] buf = new byte[assocDataPaddedLen];

System.arraycopy(legitAssocData, 0, buf, 0, legitAssocData.length);

// ... and then subtract from it the legit associated data and

// add to it bogus associated data.

for (int i=last; i > 0; i-=1) {

lastPower++;

// Remove the summand of the legit associated data

forgedTag = forgedTag.subtract(

toFE( Arrays.copyOfRange(legitAssocData, (last - 1) * BLOCK_SIZE, last * BLOCK_SIZE))

.multiply(authenticationKey.scale(valueOf(lastPower))) );

// And then add the summand of the bogus associate data

forgedTag = forgedTag.add(

toFE( Arrays.copyOfRange(additionalBogusAssocData, (last - 1) * BLOCK_SIZE, last * BLOCK_SIZE))

.multiply(authenticationKey.scale(valueOf(lastPower))) );

}

byte[] res = legitCipherText.clone();

System.arraycopy(forgedTag.asArray(), 0, res, legitCipherText.length - BLOCK_SIZE, BLOCK_SIZE);

return res;

}Giving it a spin:

cTxt1 polynomial: 862e862274c6f6cece8604269636866ex^5 + a2c92e99dba07ce117b3bb3665fedff9x^4 + f4551f6d035a339b2e5b061ca2830ce4x^3 + 320f10f267edx^2 + c800000000000000100000000000000x + 5aafa98bf7b25cfe22f5e630f97d59e9

cTxt2 polynomial: 862e862274c6f6cece8604269636866ex^4 + c291acf103e2e47987fbbb368dce3f19x^3 + 7ex^2 + 11000000000000000100000000000000x + b3180499d9b2e60566ac9c204aad7ff

Equation: x^5 + 597419e85ea532a59c4d0eed034a9044x^4 + 812f7801991ead15d455a70fcb83086fx^3 + 87c24da8d5f25ea3e9f92b8c9b319712x^2 + 10ec19974d245b5f16890e6a1effeec8x + e4cb0203ef19430f3c13947f6f6d17a7

Actual authentication key: 67cf01239432c85151d9f7c021bfd121

Candidates found after square-free and distinct-degree factorization: []

Additional candidates found after equal-degree factorization: [x + 67cf01239432c85151d9f7c021bfd121, x + e8cde43205a09d05379422572e11dfb5]

Recovered authentication key: 67cf01239432c85151d9f7c021bfd121

Legit associated data: valid assoc.Data

Bogus associated data: bogus assoc.Data

Legit cipher text: 9FFB7FA66CDDCDE8873E05DB997493452730C1453860DA74D9CC5AC0B6F8AA2FB7E64F08F04C979ABE9F0C67AF447F3A4DEFD195F55A

Forged cipher text: 9FFB7FA66CDDCDE8873E05DB997493452730C1453860DA74D9CC5AC0B6F8AA2FB7E64F08F04CDE9E643787D35A6765241FEC4324627A

Decrypted by the crypto system under attack into: crazy flamboyant for the rap enjoyment

Recovered authentication key: e8cde43205a09d05379422572e11dfb5

Legit associated data: valid assoc.Data

Bogus associated data: bogus assoc.Data

Legit cipher text: 9FFB7FA66CDDCDE8873E05DB997493452730C1453860DA74D9CC5AC0B6F8AA2FB7E64F08F04C979ABE9F0C67AF447F3A4DEFD195F55A

Forged cipher text: 9FFB7FA66CDDCDE8873E05DB997493452730C1453860DA74D9CC5AC0B6F8AA2FB7E64F08F04CD3355D1A58A0B6E730F4356A7027481F

Decrypted by the crypto system under attack into: ⊥

I am able to commit an existential forgery attack!

Challenge 64 implements an attack first outlined by Niels Ferguson in his Authentication weaknesses in GCM paper. GCM is the most popular standard for authenticated encryption and is used in TLS 1.2 and higher. To aid efficient fast implementations of GCM Intel even added a special new instruction PCLMULQDQ, which makes it easy to implement GCM's GHASH hash function. Of the different modes of authenticated encryption not encumbered by patents and certified by NIST, GCM is the fastest. It is faster than CCM and EAX. Moreover, with the help of Intel's PCLMULQDQ instruction for GHASH, it can be implemented with less code than would otherwise be required. All of these are the main reasons for GCM's popularity.

Niels's paper shows that the actual authentication security of GCM will be less than the number of bits in its authentication tag.

Given the maximum tag size of 128 bits, the best possible authentication security of GCM can be 128 - k bits where k

is ⌊log2(number-of-blocks-encrypted)⌋. Niels's paper shows that for smaller authentication tag

sizes, it will be worse than n - k bits because of some peculiarities of the GHASH one-time hash function that GCM uses.

Like Challenge 63, this challenge shows how to succeed at an existential forgery attack on GCM. This time without your adversary having made any mistakes in using GCM apart from choosing a small authentication tag size. Namely the minimum size allowed for GCM's authentication tag by NIST of 32 bits.

To tackle this challenge you will need to implement the following parts.

- Linear algebra routines for GF(2) and GF(2128):

- Implementing a vector representation for elements of GF(2128);

- Implementing a matrix representation for multiplication by a constant in GF(2128) and for squaring in GF(2128);

- Implementing basic operations for matrices in GF(2): addition, multiplication, scaling, transposition, Gaussian elimination, finding a kernel.

- Extraction and replacement of 2i-th blocks of ciphertext counting from the end, i.e. all blocks of the ciphertext that are the coefficients of x2^i (where i = 1, 2, ..., n) in the GHASH polynomial in the indeterminate x over GF(2128).

- Calculation of matrix Ad = ∑MDi(MS)i, where MDi are matrix representations of the differences between the 2i-th element of ciphertext and its forged counterpart. Along with the calculation of the dependency matrix T, as explained in the challenge.

- Finding the kernel of the matrix T, whose elements represent all the possible manipulations to the 2i-th blocks of ciphertext that don't change the most significant 16 bits of GHASH.

- Attempting an existential forgery attack on the smallest allowed GHASH tag size of 32 bits.

- Recovering the authentication key.

I added two new methods to my class for representing GF(2128) elements: PolynomialGaloisFieldOverGF2::FieldElement::asVector and PolynomialGaloisFieldOverGF2::createElement

Analogously to vector representation, I wrote these as methods of my class for GF(2128): PolynomialGaloisFieldOverGF2::FieldElement::asMatrix and PolynomialGaloisFieldOverGF2::getSquaringMatrix

I felt it would be an overkill to create a whole new class to represent matrices over GF(2), instead I went for

a simple representation as boolean[][] and the BooleanMatrixOperations class

with static methods that accept matrices and vectors in GF(2).

Time for some tests to validate that everything works correctly:

@DisplayName("Linear algebra over GF(2)") @Test

void linearAlgebraForChallenge64() {

BigInteger modulus = ONE.shiftLeft(128).or(valueOf(135));

PolynomialGaloisFieldOverGF2 gf = new PolynomialGaloisFieldOverGF2(modulus);

PolynomialGaloisFieldOverGF2.FieldElement c = gf.createElement(valueOf(3)), y = gf.createElement(valueOf(15));

assertEquals(c.multiply(y), gf.createElement(multiply(c.asMatrix(), y.asVector())) );

assertEquals(y.multiply(y), gf.createElement(multiply(gf.getSquaringMatrix(), y.asVector())) );

assertEquals(c, gf.createElement(multiply(c.asMatrix(), gf.getMultiplicativeIdentity().asVector())) );

assertEquals(y, gf.createElement(y.asVector()));

boolean[][][] mss = new boolean[18][][];

mss[0] = gf.getSquaringMatrix();

for (int i=1; i < 18; i++) {

mss[i] = multiply(mss[i-1], mss[0]);

}

assertEquals(y.scale(valueOf(2)), gf.createElement(multiply(mss[0], y.asVector()) ));

assertEquals(y.scale(valueOf(4)), gf.createElement(multiply(mss[1], y.asVector()) ));

assertEquals(y.scale(valueOf(8)), gf.createElement(multiply(mss[2], y.asVector()) ));