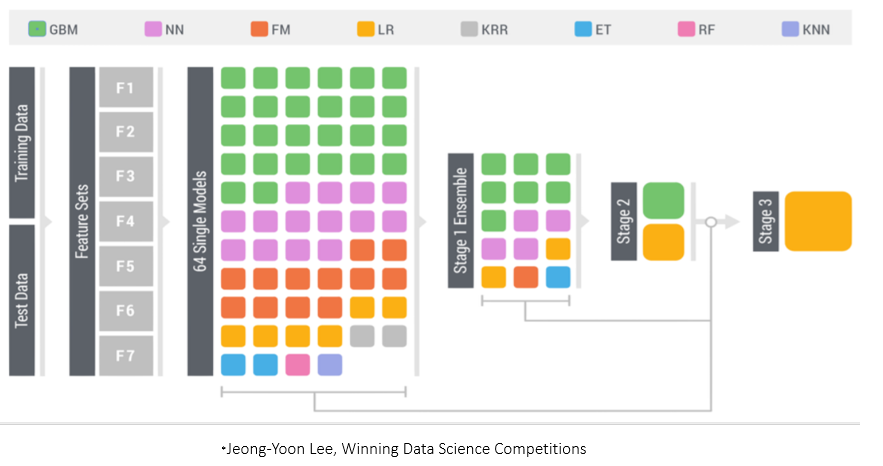

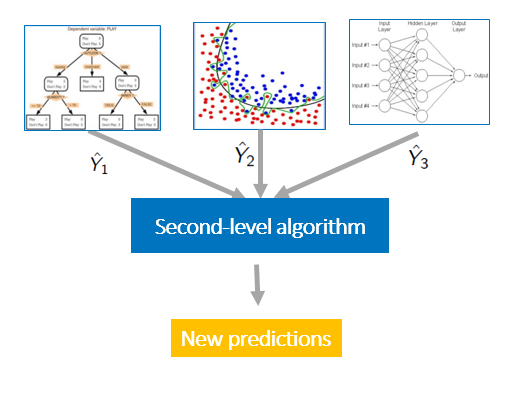

Rarely is a single machine learning model enough. Typically state of the art machine-learning networks take advantage of a variety of neural networks and machine learning algorithms. Machine learning models can return different types of results and accuracies depending on the dataset and the amount of overfitting from the training data. To work against overfitting, sometimes its best to train a variety of machine-learning models and then use the weighted average of their results as a usable predicton.

In this example, we're doing to some advanced data visualization to check for outliers, visualize null values, and extract feature importance in sales data for home sales prices. We gain some great insights about what features matter most in the final sales price of a home, as well as use three different types of machine learning models (XGBoost, SVM, and Neural Network) to create an ensemble model with a Gridsearch to return the weighted average prediction

The dataset in its entirety can be found here: https://www.kaggle.com/c/5407/download-all

- KaizenTek - IT Consulting & Cloud Professional Services