This is a induction motor faults detection project implemented with Tensorflow. We use Stacking Ensembles method (with Random Forest, Support Vector Machine, Deep Neural Network and Logistic Regression) and Machinery Fault Dataset dataset available on kaggle.

This database is composed of 1951 multivariate time-series acquired by sensors on a SpectraQuest's Machinery Fault Simulator (MFS) Alignment-Balance-Vibration (ABVT). The 1951 comprises six different simulated states: normal function, imbalance fault, horizontal and vertical misalignment faults and, inner and outer bearing faults. This section describes the database. For more info please check : http://www02.smt.ufrj.br/~offshore/mfs/page_01.html

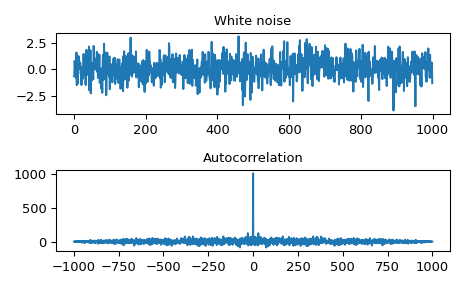

FFT convolution uses the principle that multiplication in the frequency domain corresponds to convolution in the time domain. The input signal is transformed into the frequency domain using the DFT, multiplied by the frequency response of the filter, and then transformed back into the time domain using the Inverse DFT.

Stacking or Stacked Generalization is an ensemble machine learning algorithm. It uses a meta-learning algorithm to learn how to best combine the predictions from two or more base machine learning algorithms.The benefit of stacking is that it can harness the capabilities of a range of well-performing models on a classification or regression task and make predictions that have better performance than any single model in the ensemble. The architecture of a stacking model involves two or more base models, often referred to as level-0 models, and a meta-model that combines the predictions of the base models, referred to as a level-1 model.

- Level-0 Models (Base-Models): Models fit on the training data and whose predictions are compiled.

- Level-1 Model (Meta-Model): Model that learns how to best combine the predictions of the base models.

The meta-model is trained on the predictions made by base models on out-of-sample data. That is, data not used to train the base models is fed to the base models, predictions are made, and these predictions, along with the expected outputs, provide the input and output pairs of the training dataset used to fit the meta-model.

The outputs from the base models used as input to the meta-model may be real value in the case of regression, and probability values, probability like values, or class labels in the case of classification.

precision recall f1-score support

normal 0.99 0.99 0.99 24692

imbalance 6g 1.00 1.00 1.00 24369

imbalance 10g 1.00 1.00 1.00 24107

imbalance 15g 0.95 0.95 0.95 23728

imbalance 20g 0.96 0.95 0.95 24588

imbalance 25g 0.95 0.95 0.95 23588

imbalance 30g 0.98 0.97 0.97 23381

imbalance 35g 0.98 0.98 0.98 22544

accuracy 0.97 190997

macro avg 0.97 0.97 0.97 190997

weighted avg 0.97 0.97 0.97 190997 precision recall f1-score support

normal 0.99 1.00 0.99 24725

imbalance 6g 1.00 1.00 1.00 24553

imbalance 10g 1.00 1.00 1.00 23819

imbalance 15g 0.96 0.96 0.96 24007

imbalance 20g 0.96 0.96 0.96 24472

imbalance 25g 0.96 0.96 0.96 23308

imbalance 30g 0.98 0.98 0.98 23576

imbalance 35g 0.98 0.98 0.98 22537

accuracy 0.98 190997

macro avg 0.98 0.98 0.98 190997

weighted avg 0.98 0.98 0.98 190997