This Web Scraping Template provides you with a great starting point when creating web scraping bots.

This template can be utilized in various scenarios, including:

-

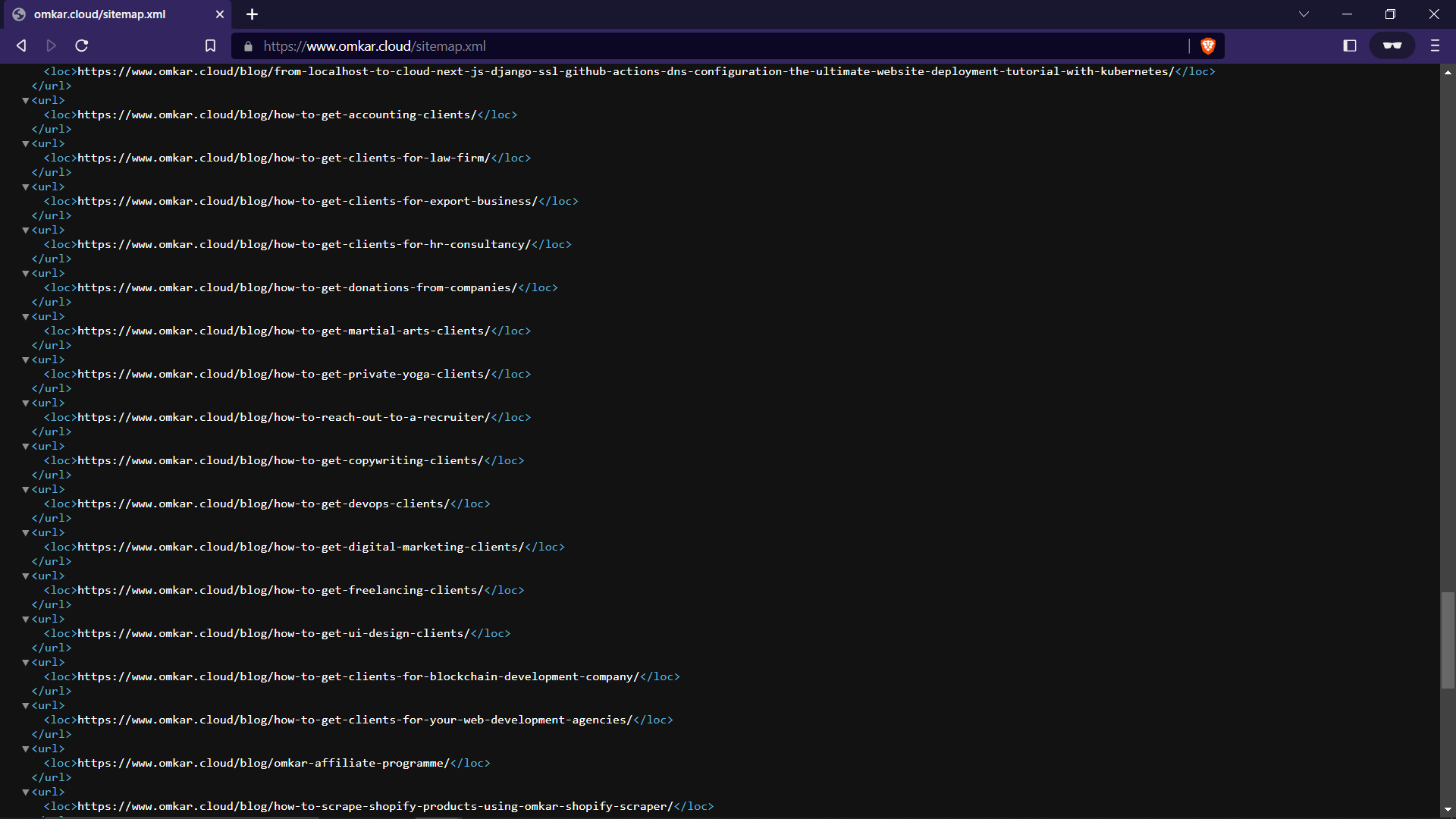

Scraping articles from a blog, like the Omkar Cloud Blog.

-

Extracting product information from e-commerce stores, for example, by scraping products from Amazon.

-

Gathering items from paginated lists, such as extracting product details from g2.

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/web-scraping-template

cd web-scraping-template2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt3️⃣ Write Code to scrape your target website. 🤖

4️⃣ Run Scraper 😎:

python main.pyHere are some best practices for web scraping:

- Instead of individually visiting each page to gather links, it is advisable to search for pagination links within sitemaps or RSS feeds. In most cases, these sources provide all links in an organized manner.

-

Make the bot look humane by adding random waits using methods like

driver.short_random_sleepanddriver.long_random_sleep. -

If you need to scrape a large amount of data in a short time, consider using proxies to prevent IP-based blocking.

-

If you are responsible for maintaining the scraper in the long run, it is recommended to avoid using hash-based selectors. These selectors will break with the next build of the website, resulting in increased maintenance work.

Note that most websites do not implement bot protection as many frontend developers are not taught bot protection in their courses.

So, it is recommended to only add IP rotation or random waits if you are getting blocked.