pggb builds pangenome variation graphs from a set of input sequences.

A pangenome variation graph is a kind of generic multiple sequence alignment. It lets us understand any kind of sequence variation between a collection of genomes. It shows us similarity where genomes walk through the same parts of the graph, and differences where they do not.

pggb generates this kind of graph using an all-to-all alignment of input sequences (wfmash), graph induction (seqwish), and progressive normalization (smoothxg, gfaffix).

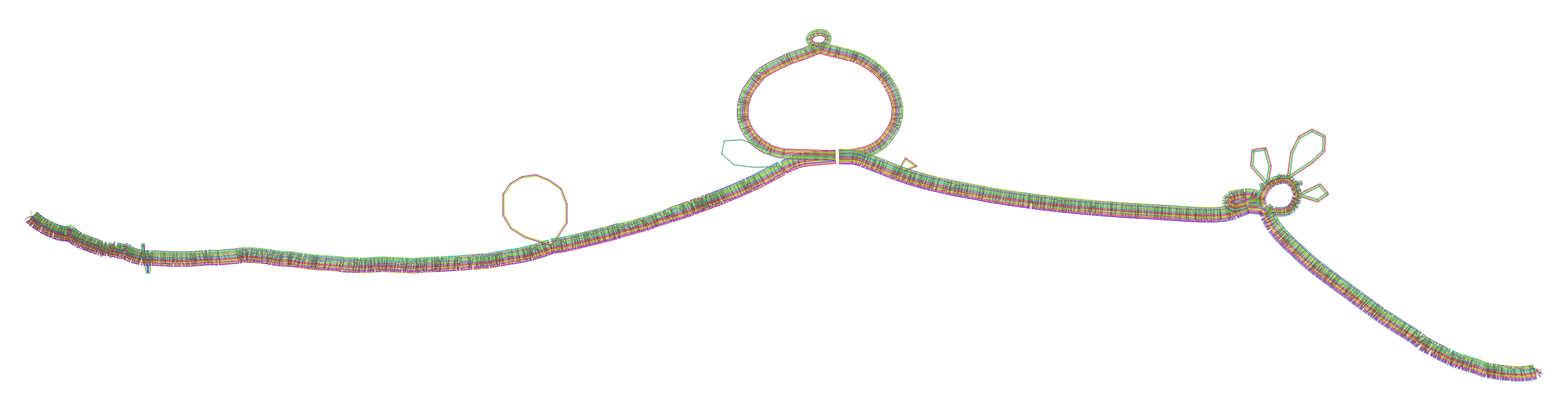

After construction, pggb generates diagnostic visualizations of the graph (odgi).

A variant call report (in VCF) representing both small and large variants can be generated based on any reference genome included in the graph (vg).

pggb writes its output in GFAv1 format, which can be used as input by numerous "genome graph" and pangenome tools, such as the vg and odgi toolkits.

pggb has been tested at scale in the Human Pangenome Reference Consortium (HPRC) as a method to build a graph from the draft human pangenome.

Documentation at https://pggb.readthedocs.io/ and pggb manuscript (WIP).

-

Install

pggbwith Docker, Singularity, bioconda, guix, or by manually building its dependencies. -

Put your sequences in one FASTA file (

in.fa), optionally compress it withbgzip, and index it withsamtools faidx. If you have many samples and/or haplotypes, we recommend using the PanSN prefix naming pattern. -

[OPTIONAL] If you have whole-genome assemblies, you might consider partitioning your sequences into communities, which usually correspond to the different chromosomes of the genomes. Then, you can run

pggbon each community (set of sequences) independently (see partition before pggb). -

To build a graph from

in.fa, which contains, for example, 9 haplotypes, in the directoryoutput, scaffolding the graph using 5kb matches at >= 90% identity, and using 16 parallel threads for processing, execute:

pggb -i in.fa \ # input file in FASTA format

-o output \ # output directory

-n 9 \ # number of haplotypes (optional with PanSN-spec)

-t 16 \ # number of threads

-p 90 \ # minimum average nucleotide identity for segments

-s 5k \ # segment length for scaffolding the graph

-V 'ref:1000' # make a VCF against "ref" decomposing variants <= 1000bpThe final output will match outdir/in.fa.*final.gfa.

By default, we render 1D and 2D visualizations of the graph with odgi, which are very useful to understand the result of the build.

See also this step-by-step example for more information.

In the above example, to partition your sequences into communities, execute:

partition-before-pggb -i in.fa \ # input file in FASTA format

-o output \ # output directory

-n 9 \ # number of haplotypes (optional with PanSN-spec)

-t 16 \ # number of threads

-p 90 \ # minimum average nucleotide identity for segments

-s 5k \ # segment length for scaffolding the graph

-V 'ref:1000' # make a VCF against "ref" decomposing variants <= 1000bpThis generates the command lines to run pggb on each community (2 in this example) independently:

pggb -i output/in.fa.dd9e519.community.0.fa \

-o output/in.fa.dd9e519.community.0.fa.out \

-p 5k -l 25000 -p 90 -n 9 -K 19 -F 0.001 \

-k 19 -f 0 -B 10000000 \

-H 9 -j 0 -e 0 -G 700,900,1100 -P 1,19,39,3,81,1 -O 0.001 -d 100 -Q Consensus_ \

-V ref:1000 --threads 16 --poa-threads 16

pggb -i output/in.fa.dd9e519.community.1.fa \

-o output/in.fa.dd9e519.community.1.fa.out \

-p 5k -l 25000 -p 90 -n 9 -K 19 -F 0.001 \

-k 19 -f 0 -B 10000000 \

-H 9 -j 0 -e 0 -G 700,900,1100 -P 1,19,39,3,81,1 -O 0.001 -d 100 -Q Consensus_ \

-V ref:1000 --threads 16 --poa-threads 16Se also the sequence partitioning tutorial for more information

Erik Garrison*, Andrea Guarracino*, Simon Heumos, Flavia Villani, Zhigui Bao, Lorenzo Tattini, Jörg Hagmann, Sebastian Vorbrugg, Santiago Marco-Sola, Christian Kubica, David G. Ashbrook, Kaisa Thorell, Rachel L. Rusholme-Pilcher, Gianni Liti, Emilio Rudbeck, Sven Nahnsen, Zuyu Yang, Mwaniki N. Moses, Franklin L. Nobrega, Yi Wu, Hao Chen, Joep de Ligt, Peter H. Sudmant, Nicole Soranzo, Vincenza Colonna, Robert W. Williams, Pjotr Prins, Building pangenome graphs, bioRxiv 2023.04.05.535718; doi: https://doi.org/10.1101/2023.04.05.535718

Pangenome graphs let us understand multi-way relationships between many genomes.

They are thus models of many-way sequence alignments.

A goal of pggb is to reduce the complexity of making these alignments, which are notoriously difficult to design.

The overall structure of pggb's output graph is defined by two parameters: segment length (-s) and pairwise identity (-p).

Segment length defines the seed length used by the "MashMap3" homology mapper in wfmash.

The pairwise identity is the minimum allowed pairwise identity between seeds, which is estimated using a mash-type approximation based on k-mer Jaccard.

Mappings are initiated from collinear chains of around 5 seeds (-l, --block-length), and extended greedily as far as possible, allowing up to -n minus 1 mappings at each query position. Genome number (-n) is automatically computed if sequence names follow PanSN-spec.

An additional parameter, -k, can also greatly affect graph structure by pruning matches shorter than a given threshold from the initial graph model.

In effect, -k N removes any match shorter than Nbp from the initial alignment.

This filter removes potentially ambiguous pairwise alignments from consideration in establishing the initial scaffold of the graph.

The initial graph is defined by parameters to wfmash and seqwish.

But due to the ambiguities generated across the many pairwise alignments we use as input, this graph can be locally very complex.

To regularize it we orchestrate a series of graph transformations.

First, with smoothxg, we "smooth" it by locally realigning sequences to each other with a traditional multiple sequence alignment (we specifically apply POA).

This process repeats multiple times to smooth over any boundary effects that may occur due to binning errors near MSA boundaries.

Finally, we apply gfaffix to remove forks where both alternatives have the same sequence.

We suggest using default parameters for initial tests.

For instance pggb -i in.fa.gz -o out would be a minimal build command for a pangenome from in.fa.gz.

The default parameters provide a good balance between runtime and graph quality for small-to-medium (1kbp-100Mbp) problems.

However, we find that parameters may still need to be adjusted to fine-tune pggb to a given problem.

Furthermore, it is a good idea to explore a range of settings of the pipeline's key graph-shaping parameters (-s, -p, -k) to appreciate the degree to which changes in parameters may or may-not affect results.

These parameters must be tuned so that the graph resolves structures of interest (variants and homologies) that are implied by the sequence space of the pangenome.

In preparation of a manuscript on pggb, we have developed a set of example pangenome builds for a collection of diverse species.

(These also use cross-validation against nucmer to evaluate graph quality.)

Examples (-n is optional if sequence names follow PanSN-spec):

- Human, whole genome, 90 haplotypes:

pggb -p 98 -s 10k -k 47 [-n 90]... - 15 helicobacter genomes, 5% divergence:

pggb -k 47 [-n 15], and 15 at higher (10%) divergencepggb -k 23 [-n 15] ... - Yeast genomes, 5% divergence:

pggb's defaults should work well. - Aligning 9 MHC class II assemblies from vertebrate genomes (5-10% divergence):

pggb -k 29 [-n 9] ... - A few thousand bacterial genomes

pggb -x auto [-n 2000] .... In general mapping sparsification (-x auto) is a good idea when you have many hundreds to thousands of genomes.

pggb defaults to using the number of threads as logical processors on the system (the thread count given by getconf _NPROCESSORS_ONLN).

Use -t to set an appropriate level of parallelism if you can't use all the processors on your system.

To guide graph building, pggb provides several visual diagnostic outputs.

Here, we explain them using a test from the data/HLA directory in this repo:

git clone --recursive https://github.com/pangenome/pggb

cd pggb

./pggb -i data/HLA/DRB1-3123.fa.gz -p 70 -s 500 -n 10 -t 16 -V 'gi|568815561' -o out -MThis yields a variation graph in GFA format, a multiple sequence alignment in MAF format (-M), and several diagnostic images (all in the directory out/). We specify -n because the sequences do not follow PanSN-spec, so the number of haplotypes can not be automatically computed.

We also call variants with -V with respect to the reference gi|568815561.

- The graph nodes’ are arranged from left to right forming the pangenome’s sequence.

- The colored bars represent the binned, linearized renderings of the embedded paths versus this pangenome sequence in a binary matrix.

- The black lines under the paths, so called links, represent the topology of the graph.

- Each colored rectangle represents a node of a path. The node’s x-coordinates are on the x-axis and the y-coordinates are on the y-axis, respectively.

- A bubble indicates that here some paths have a diverging sequence or it can represent a repeat region.

The pipeline is provided as a single script with configurable command-line options.

Users should consider taking this script as a starting point for their own pangenome project.

For instance, you might consider swapping out wfmash with minimap2 or another PAF-producing long-read aligner.

If the graph is small, it might also be possible to use abPOA or spoa to generate it directly.

On the other hand, maybe you're starting with an assembly overlap graph which can be converted to blunt-ended GFA using gimbricate.

You might have a validation process based on alignment of sequences to the graph, which should be added at the end of the process.

The resulting graph can then be manipulated with odgi for transformation, analysis, simplification, validation, interrogation, and visualization.

It can also be loaded into any of the GFA-based mapping tools, including vg map, mpmap, giraffe, and GraphAligner.

Alignments to the graph can be used to make variant calls (vg call) and coverage vectors over the pangenome, which can be useful for phylogeny and association analyses.

Using odgi matrix, we can render the graph in a sparse matrix format suitable for direct use in a variety of statistical frameworks, including phylogenetic tree construction, PCA, or association studies.

pggb's initial use is as a mechanism to generate variation graphs from the contig sets produced by the human pangenome project.

Although its design represents efforts to scale these approaches to collections of many human genomes, it is not intended to be human-specific.

It's straightforward to generate a pangenome graph by the all-pairs alignment of a set of input sequences. And it's good: this provides a completely unbiased view of the pangenome. However, all-to-all alignment scales very poorly, with the square of the number of sequences, making it untenable for even small collections of genomes (~10s). To make matters worse, existing standards for alignment are based on k-mer matching, which can require processing enormous numbers of seeds when aligning repetitive sequences.

We answer this new alignment issue with the mashmap and WFA-based algorithms in wfmash.

wfmash provides a practical way to generate alignments between the sequences.

Its design focuses on the long, collinear homologies that are often "orthologous" in a phylogenetic context.

Crucially, wfmash is robust to repetitive sequences, and it can be adjusted using probabilistic thresholds for segment alignment identity.

Finally, seqwish converts the alignments directly into a graph model.

This allows us to define the base graph structure using a few free parameters, as discussed above.

The manifold nature of typical variation graphs means that they are very likely to look linear locally.

By running a stochastic 1D layout algorithm that attempts to match graph distances (as given by paths) between nodes and

their distances in the layout, we execute a kind of multi-dimensional scaling (MDS). In the aggregate, we see that

regions that are linear (the chains of nodes and bubbles) in the graph tend to co-localize in the 1D sort.

Applying an MSA algorithm (in this case, abPOA or spoa) to each of these chunks enforces a local linearity and

homogenizes the alignment representation. This smoothing step thus yields a graph that is locally as we expect: partially

ordered, and linear as the base DNA molecules are, but globally can represent large structural variation. The homogenization

also rectifies issues with the initial wfa-based alignment.

You'll need wfmash, seqwish, smoothxg, odgi, and gfaffix in your shell's PATH.

These can be individually built and installed.

Then, put the pggb bash script in your path to complete installation.

Optionally, install bcftools, vcfbub, vcfwave, and vg for calling and normalizing variants, MultiQC for generating summarized statistics in a MultiQC report, or pigz to compress the output files of the pipeline.

To simplify installation and versioning, we have an automated GitHub action that pushes the current docker build to the GitHub registry. To use it, first pull the actual image (IMPORTANT: see also how to build docker locally):

docker pull ghcr.io/pangenome/pggb:latestOr if you want to pull a specific snapshot from https://github.com/orgs/pangenome/packages/container/package/pggb:

docker pull ghcr.io/pangenome/pggb:TAGYou can pull the docker image also from dockerhub:

docker pull pangenome/pggbAs an example, going in the pggb directory

git clone --recursive https://github.com/pangenome/pggb.git

cd pggbyou can run the container using the human leukocyte antigen (HLA) data provided in this repo:

docker run -it -v ${PWD}/data/:/data ghcr.io/pangenome/pggb:latest /bin/bash -c "pggb -i /data/HLA/DRB1-3123.fa.gz -p 70 -s 3000 -n 10 -t 16 -V 'gi|568815561' -o /data/out"The -v argument of docker run always expects a full path.

If you intended to pass a host directory, use absolute path.

This is taken care of by using ${PWD}.

Multiple pggb's tools use SIMD instructions that require AVX (like abPOA) or need it to improve performance.

The currently built docker image has -Ofast -march=sandybridge set.

This means that the docker image can run on processors that support AVX or later, improving portability, but preventing your system hardware from being fully exploited. In practice, this could mean that specific tools are up to 9 times slower. And that a pipeline runs ~30% slower compared to when using a native build docker image.

To achieve better performance, it is STRONGLY RECOMMENDED to build the docker image locally after replacing -march=sandybridge with -march=native and the Generic build type with Release in the Dockerfile:

sed -i 's/-march=sandybridge/-march=native/g' Dockerfile

sed -i 's/Generic/Release/g' Dockerfile To build a docker image locally using the Dockerfile, execute:

docker build --target binary -t ${USER}/pggb:latest .Staying in the pggb directory, we can run pggb with the locally built image:

docker run -it -v ${PWD}/data/:/data ${USER}/pggb /bin/bash -c "pggb -i /data/HLA/DRB1-3123.fa.gz -p 70 -s 3000 -n 10 -t 16 -V 'gi|568815561' -o /data/out"A script that handles the whole building process automatically can be found at https://github.com/nf-core/pangenome#building-a-native-container.

Many managed HPCs utilize Singularity as a secure alternative to docker. Fortunately, docker images can be run through Singularity seamlessly.

First pull the docker file and create a Singularity SIF image from the dockerfile. This might take a few minutes.

singularity pull docker://ghcr.io/pangenome/pggb:latestNext clone the pggb repo and cd into it

git clone --recursive https://github.com/pangenome/pggb.git

cd pggbFinally, run pggb from the Singularity image.

For Singularity, to be able to read and write files to a directory on the host operating system, we need to 'bind' that directory using the -B option and pass the pggb command as an argument.

singularity run -B ${PWD}/data:/data ../pggb_latest.sif pggb -i /data/HLA/DRB1-3123.fa.gz -p 70 -s 3000 -n 10 -t 16 -V 'gi|568815561' -o /data/outA script that handles the whole building process automatically can be found at https://github.com/nf-core/pangenome#building-a-native-container.

Bioconda (VCF NORMALIZATON IS CURRENTLY MISSING, SEE THIS ISSUE)

pggb recipes for Bioconda are available at https://anaconda.org/bioconda/pggb.

To install the latest version using Conda execute:

conda install -c bioconda pggbIf you get dependencies problems, try:

conda install -c bioconda -c conda-forge pggbgit clone https://github.com/ekg/guix-genomics

cd guix-genomics

GUIX_PACKAGE_PATH=. guix package -i pggbA nextflow DSL2 port of pggb is developed by the nf-core community. See nf-core/pangenome for more details.

When running a Singularity container on an Alma Linux node in HPC, you may encounter an 'Illegal option --' error. This issue arises due to an incompatibility between the Singularity container and the Alma Linux environment.

The which function from the Alma Linux 9 host machine is inherited by the Singularity container during execution. However, the container's Debian operating system may not be compatible with this function, leading to the error. Modifying the container's definition file (.def) alone is insufficient to resolve this issue persistently.

To address this problem, you have two options:

-

Unset the

whichfunction before running the container:Before executing your Singularity container, run the following command on the host machine:

unset -f whichThis command unsets the

whichfunction, preventing it from being inherited by the container. -

Use the

-eflag when running the container:Execute your Singularity container using the

-eflag:singularity exec -e ...The

-eflag prevents any host environment variables and functions from being inherited by the container.

Many thanks go to @Zethson and @Imipenem who started to implemented a MultiQC module for odgi stats. Using -m, --multiqc statistics are generated automatically, and summarized in a MultiQC report. If created, visualizations and layouts are integrated into the report, too. In the following an example excerpt:

pip install multiqc --userThe docker image already contains v1.11 of MultiQC.

Erik Garrison*, Andrea Guarracino*, Simon Heumos, Flavia Villani, Zhigui Bao, Lorenzo Tattini, Jörg Hagmann, Sebastian Vorbrugg, Santiago Marco-Sola, Christian Kubica, David G. Ashbrook, Kaisa Thorell, Rachel L. Rusholme-Pilcher, Gianni Liti, Emilio Rudbeck, Sven Nahnsen, Zuyu Yang, Mwaniki N. Moses, Franklin L. Nobrega, Yi Wu, Hao Chen, Joep de Ligt, Peter H. Sudmant, Nicole Soranzo, Vincenza Colonna, Robert W. Williams, Pjotr Prins, Building pangenome graphs, bioRxiv 2023.04.05.535718; doi: https://doi.org/10.1101/2023.04.05.535718

MIT