'bigdoc' allows you to handle gigabyte order files easily with high performance. You can search bytes or words / read data/text from huge files.

It is licensed under MIT license.

Search mega-bytes,giga-bytes order file.

package org.example;

import java.io.File;

import java.util.List;

import org.riversun.bigdoc.bin.BigFileSearcher;

public class Example {

public static void main(String[] args) throws Exception {

byte[] searchBytes = "hello world.".getBytes("UTF-8");

File file = new File("/var/tmp/yourBigfile.bin");

BigFileSearcher searcher = new BigFileSearcher();

List<Long> findList = searcher.searchBigFile(file, searchBytes);

System.out.println("positions = " + findList);

}

}When used asynchronously, #cancel can be used to stop the process in the middle of a search.

package org.riversun.bigdoc.bin;

import java.io.File;

import java.io.UnsupportedEncodingException;

import java.util.List;

import org.riversun.bigdoc.bin.BigFileSearcher.OnRealtimeResultListener;

public class Example {

public static void main(String[] args) throws UnsupportedEncodingException, InterruptedException {

byte[] searchBytes = "sometext".getBytes("UTF-8");

File file = new File("path/to/file");

final BigFileSearcher searcher = new BigFileSearcher();

searcher.setUseOptimization(true);

searcher.setSubBufferSize(256);

searcher.setSubThreadSize(Runtime.getRuntime().availableProcessors());

final SearchCondition sc = new SearchCondition();

sc.srcFile = file;

sc.startPosition = 0;

sc.searchBytes = searchBytes;

sc.onRealtimeResultListener = new OnRealtimeResultListener() {

@Override

public void onRealtimeResultListener(float progress, List<Long> pointerList) {

System.out.println("progress:" + progress + " pointerList:" + pointerList);

}

};

final Thread th = new Thread(new Runnable() {

@Override

public void run() {

List<Long> searchBigFileRealtime = searcher.searchBigFile(sc);

}

});

th.start();

Thread.sleep(1500);

searcher.cancel();

th.join();

}

}Search sequence of bytes from big file

Tested on AWS t2.*

| CPU Instance | EC2 t2.2xlarge vCPU x 8,32GiB | EC2 t2.xlarge vCPU x 4,16GiB | EC2 t2.large vCPU x 2,8GiB | EC2 t2.medium vCPU x 2,4GiB |

| File Size | Time(sec) | Time(sec) | Time(sec) | Time(sec) |

| 10MB | 0.5s | 0.6s | 0.8s | 0.8s |

| 50MB | 2.8s | 5.9s | 13.4s | 12.8s |

| 100MB | 5.4s | 10.7s | 25.9s | 25.1s |

| 250MB | 15.7s | 32.6s | 77.1s | 74.8s |

| 1GB | 55.9s | 120.5s | 286.1s | - |

| 5GB | 259.6s | 566.1s | - | - |

| 10GB | 507.0s | 1081.7s | - | - |

Please Note

- Processing speed depends on the number of CPU Cores(included hyper threading) not memory capacity.

- The result is different depending on the environment of the Java ,Java version and compiler or runtime optimization.

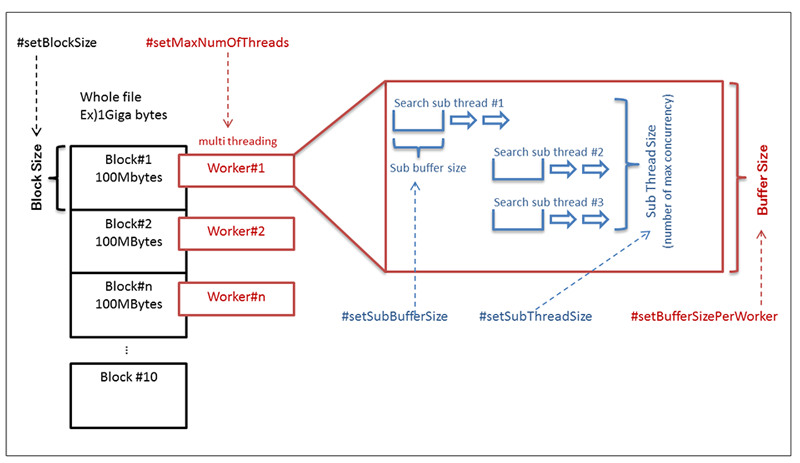

You can tune the performance using the following methods. It can be adjusted according to the number of CPU cores and memory capacity.

- BigFileSearcher#setBlockSize

- BigFileSearcher#setMaxNumOfThreads

- BigFileSearcher#setBufferSizePerWorker

- BigFileSearcher#setBufferSize

- BigFileSearcher#setSubThreadSize

BigFileSearcher can search for sequence of bytes by dividing a big file into multiple blocks. Use multiple workers to search for multiple blocks concurrently. One worker thread sequentially searches for one block. The number of workers is specified by #setMaxNumOfThreads. Within a single worker thread, it reads and searches into the memory by the capacity specified by #setBufferSize. A small area - used to compare sequence of bytes when searching - is called a window, and the size of that window is specified by #setSubBufferSize. Multiple windows can be operated concurrently, and the number of conccurent operations in a worker is specified by #setSubThreadSize.

See javadoc as follows.

https://riversun.github.io/javadoc/bigdoc/

- You can add dependencies to maven pom.xml file.

<dependency>

<groupId>org.riversun</groupId>

<artifactId>bigdoc</artifactId>

<version>0.4.0</version>

</dependency>