a tensorflow implementation for scene understanding and object detection using Semantic segmentation. This project uses the DeepLab model to perform semantic segmentation on a sample input image or video. Expected outputs are semantic labels overlayed on the sample image or each frame on the video frame set.The models used in this project perform semantic segmentation. Semantic segmentation models focus on assigning semantic labels, such as sky, person, or car, to multiple objects and stuff in a single image.

DeepLab is a state-of-art deep learning model for semantic image segmentation, where the goal is to assign semantic labels (e.g., person, dog, cat and so on) to every pixel in the input image. In semantic segmentation, By putting an image into a neural network, it generates an output a category for every pixel in the image. Now the output is a discrete set of categories, much like in a classification task. But instead of assigning a single class to an image, we want to assign a class to every pixel in that image.

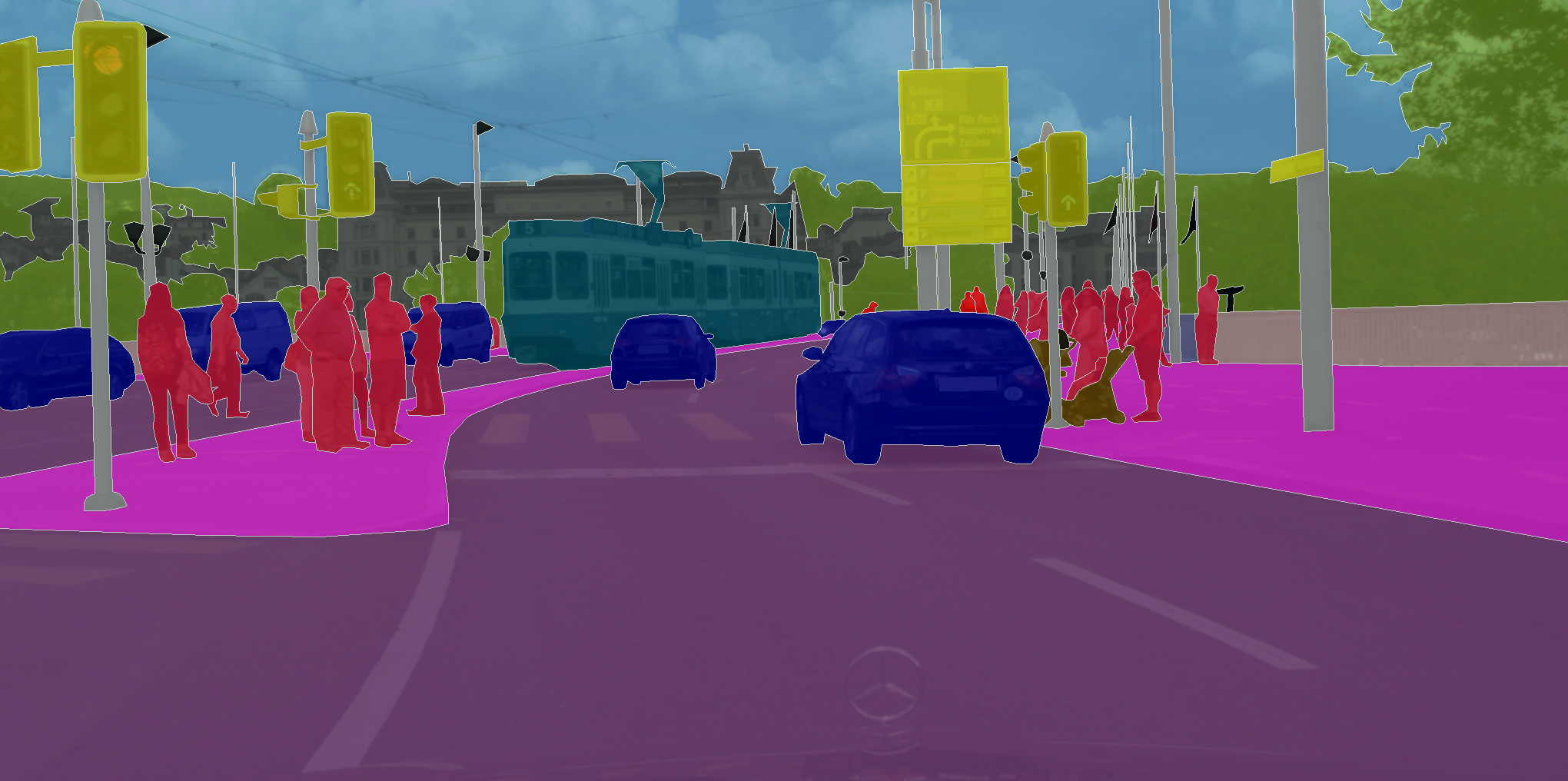

In the driving context, we aim to obtain a semantic understanding of the front driving scene throught the camera input. This is important for driving safety and an essential requirement for all levels of autonomous driving. The first step is to build the model and load the pre-trained weights. In this demo, we use the model checkpoint trained on Cityscapes dataset.

Following segemented images are from Cityscapes dataset result.

| Road, Pedestrian, Bicycle, Traffic sign | Road, Car,Traffic Sign, Traffic Light |

|---|---|

|

|

- Load the latest version of the pretrained DeepLab model

- Load the colormap from the PASCAL VOC dataset

- Adds colors to various labels, such as "gray" for car, "pink" for people, "green" for bicycle and more as shown in the below result image

- Visualize an image, and add an overlay of colors on various regions

Selected Segmented lables are as follows:

LABEL_NAMES = np.asarray([

'background', 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus',

'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike',

'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tv'

])

- very expensive to label this data (labeling every pixel)

- computationally expensive to maintain spatial information in each convolutional layer

Cars are detected and overlayed with "gray" colored segmented region.

| 10s of Driving | 10sec Segmented Result |

|---|---|

|

|

| Original Driving HQ Video | Segmented HQ Video |

|

|

Cars are detected and overlayed with "gray" colored segmented region. Pedestrians are detected and overlayed with "pink" colored segemented region.